Hands-on with ChatGPT o1-preview, OpenAI's Latest Innovation

This new model is capable of advanced "reasoning," but it's still in preview mode.News

Even with recent turnover in the executive ranks, OpenAI has shipped two big features in the span of a couple weeks: ChatGPT Advanced Voice Mode and o1-preview, a model that, according to the company, can “perform complex reasoning.”

Photo: ChatGPT/Dan Ackerman

Photo: ChatGPT/Dan Ackerman This o1-preview, though new, isn’t the long-awaited GPT-5. It’s an offshoot that uses “chain of thought” to better handle complex tasks that require many steps. This improves the model's problem-solving skills, though it also comes with some limitations.

How o1-preview works: Chain-of-thought reasoning

In January of 2022, a group of nine researchers at Google released a paper titled “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models.” The paper found that prompting an LLM to work through a problem led to more accurate results in complex problems that require multiple steps or a deeper understanding of what the problem asks.

Exactly how OpenAI implements chain-of-thought is a mystery because, despite its name, OpenAI is tight-lipped about how its models work. But OpenAI is clear that o1-preview uses chain-of-thought, and even provides a summary of the steps in its chain-of-thought alongside its output.

To test this new model out, I tried a few things that standard LLMs struggle with.

Three problems o1-preview can solve (that AI models usually can’t)

A patio for my shed

I recently built a patio area around the shed in my backyard. I used 12-inch square concrete tiles that line the east and north sides of the shed. That meant I had to calculate how many tiles I would need. It’s not an entirely straightforward problem. Each section of the patio is a different size, and there’s an area where they meet that must be properly accounted for.

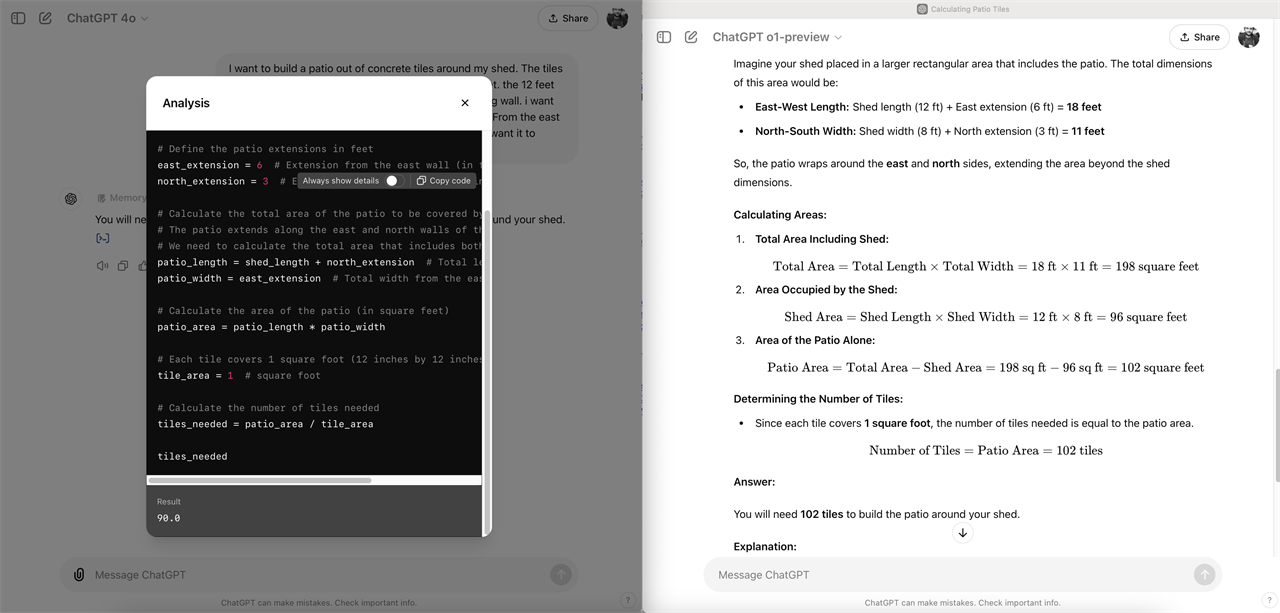

Photo: ChatGPT, Matthew Smith. Solution from GPT-4o on the left, from o1-preview on the right

GPT-4o tried to solve this problem by writing Python code, which is neat, but it concluded I should buy 90 tiles, which is wrong. I tried this several times; in some replies GPT-4o did not write code, but still reached the wrong answer.

GPT o1-preview, though, spat out the correct answer, which is 102 tiles. GPT correctly understood the relationship of the space around the shed. It approached the problem by calculating the total area of the shed and patio and then subtracting the shed. Because the tiles are themselves a square foot each, this easily leads to the right answer.

Personally, I solved the problem by drawing a map. Which is fine, but arguably much less efficient than how o1-preview solved it.

Building a website

Next up, I tasked o1-preview with building a basic version of my personal website from my plain text prompt. I provided specifics about the design (responsive) and asked for a 3x3 grid of placeholder images with links I could change later to insert articles from my own portfolio. I also asked to use the Aptos font.

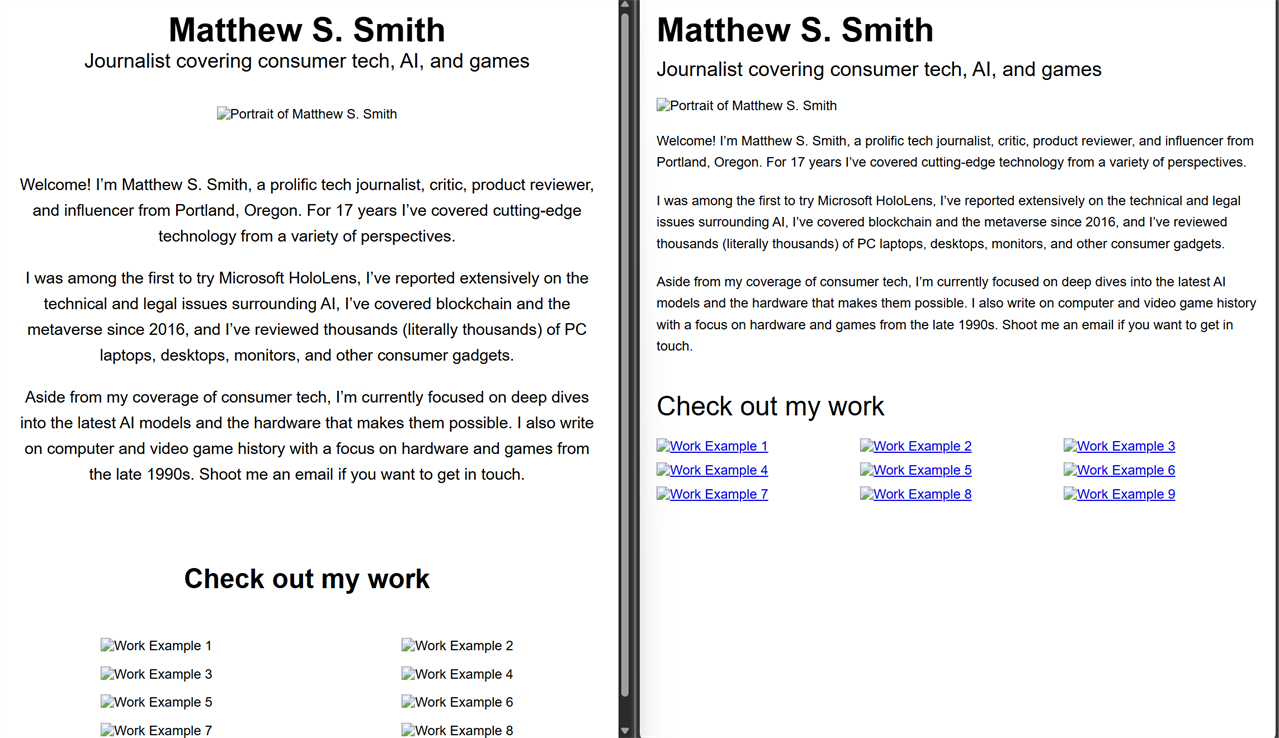

Photo:ChatGPT/Matthew Smith Website from GPT-4o on the left, from o1-preview on the right

Photo:ChatGPT/Matthew Smith Website from GPT-4o on the left, from o1-preview on the right

GPT-4o included everything I asked, but missed on the details. It made the odd choice to center-align the entire website, and the 3x3 grid didn’t work as a 3x3 grid, instead changing dynamically to the size of the browser in which it was viewed.

The code from o1-preview also wasn’t perfect, but closer. It didn’t center-align the website and did a better job of producing the 3x3 grid, which functions correctly. And o1-preview also spotted a problem with the font: Aptos is not broadly supported by browsers, so it recommended adding code to provide the font files.

Summarizing an academic paper

I recently wrote about LLMs with long context window for IEEE Spectrum magazine and highlighted a paper, LongRAG, which used long context windows alongside another technique called retrieval augmented generation.

It’s a complex paper, and summarizing its conclusions isn’t easy, so I set both GPT-4o and o1-Preview to the task. I made two specific requests: that the summary be about 250 words and include the three most relevant, important examples from the paper.

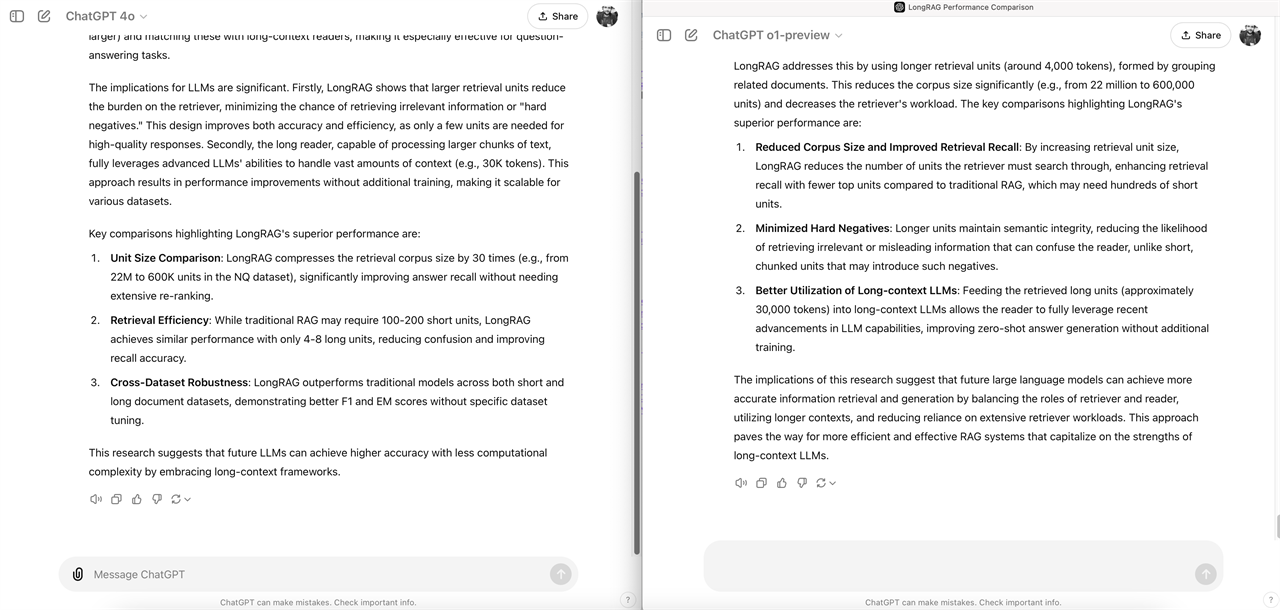

Photo: ChatGPT/Matthew Smith Summarization from GPT-4o on the left, from o1-preview on the right

Photo: ChatGPT/Matthew Smith Summarization from GPT-4o on the left, from o1-preview on the right

Now, this is a complex topic, so seeing the difference might be tough at a glance. In my opinion, though, o1-preview’s summary is miles better. GPT-4o includes some highlights, but on closer inspection they are vague, and the single-sentence conclusion feels rushed. I also think o1-preview presents the points in a more logical arrangement that lets the reader follow from one point to the next, while the information in GPT-4o feels scattershot.

Local vs. cloud: o1-preview and Llama 3.1-8B

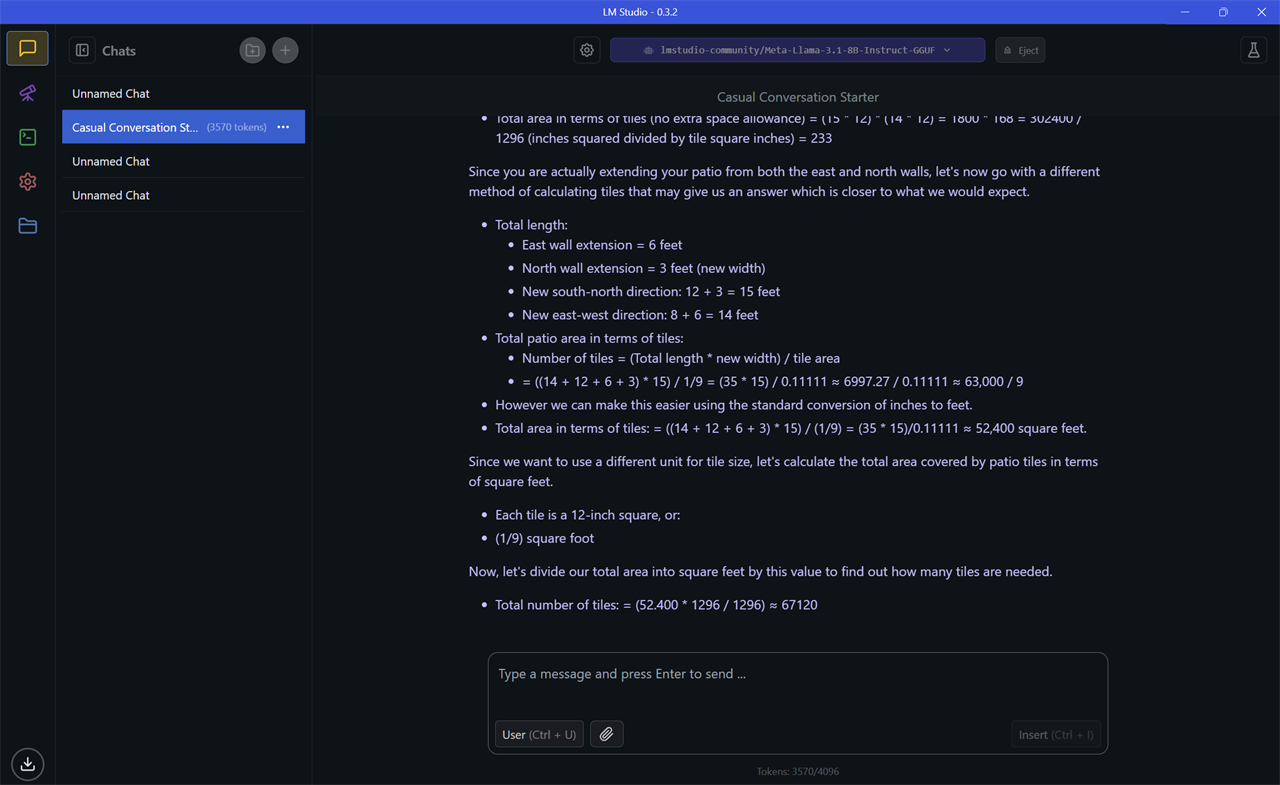

So, can more basic models that run locally on your PC perform nearly as well as o1-preview if you prompt it to work through problems? In theory, maybe...but in practice, not yet.

I loaded Llama 3.1-8B on my Microsoft Surface Laptop with the Qualcomm Snapdragon X processor and gave it the prompt for the shed problem. It failed.

So, I tried to handle the problem with prompts leading the model in the right direction. Unfortunately, this led to some wild calculations. In one instance, Llama 3.1-8B calculated I would need over 60,000 concrete tiles, an error caused in part by a chain of decisions that converted the entire problem to square inches.

Of course, Llama 3.1-8B doesn’t have whatever “secret sauce” OpenAI has used. Also, OpenAI offers a faster version of o1-preview called o1-mini. While I expect it remains a rather large model, it hints that smaller models can also benefit from OpenAI’s implementation of chain-of-thought reasoning. Perhaps we’ll see Meta release a “Llama 3-CoT” with its own spin on the idea.

The limitations of o1-Preview

What “reasoning” means for an AI model is up to debate, but the reality is this: o1-preview can solve problems GPT-4o couldn’t, and better handles problems GPT-4o struggled with. But it’s not perfect.

OpenAI released o1-preview without many features available in GPT-4o. You can’t upload documents or images to o1-preview. You can’t use o1-preview with the new Advanced Voice Mode. The o1-preview model is not available alongside the “Create a GPT” feature. And web browsing is not available.

Tasks like writing, outlining, and brainstorming rely more on subjective opinion and creativity than reasoning, so chain-of-thought doesn’t offer much improvement. And if you access it through the developer API, you’ll find o1-preview’s API fees are three times higher than GPT-4o.

Buckle up: AI models will just get smarter from here

I think o1-preview is, if anything, a bit under-hyped. Much of the coverage focuses on either its ability to solve riddles (clever, but not that useful) or wonders at its “dangerous” implications for humanity.

This overlooks a more basic fact: o1-preview is just flat-out better at reasoning through complex problems than prior LLMs, which makes it more useful than before. Though o1-preview has limitations, it shows how AI can now tackle problems that once evaded it.

Read more: AI Tools and Tips

- Getting started with LM Studio: A Beginner's Guide

- Meet Claude, the Best AI You've Never Heard of

- Hands-on with the Faster, Smarter ChatGPT-4o AI

- How to Get NVIDIA Chat with RTX: Local AI for Everyone

- Microsoft Surface Laptop Review: The First Copilot Plus PC

- What is TOPS? The AI Performance Metric Explained

Comment on This Post

See More Blog Categories

Recent Posts

The End is Coming for Windows 10: What You (Still) Need to Know

With the official end-of-support date for the world's most popular PC operating system fast approaching, here's how to keep your PC secure and decide if it's finally time for an upgrade.

Continue Reading About The End is Coming for Windows 10: What You (Still) Need to KnowMesh Network vs. Router: What’s the Difference?

Are you fed up with Wi-Fi dead zones and spotty connections? In this guide, we’ll pit traditional routers against mesh networks to show you which solution delivers seamless, high-speed coverage tailored to your home, devices, and budget—without breaking the bank.

Continue Reading About Mesh Network vs. Router: What’s the Difference?