How to Get NVIDIA Chat RTX: Local AI for Everyone

Image: NVIDIA

Image: NVIDIA

It's been possible to run local AI for some time now, but not always easy. NVIDIA's version, called Chat RTX, makes it fairly simple, as long as you have a 30 Series or 40 Series NVIDIA GPU.

What is Chat RTX?

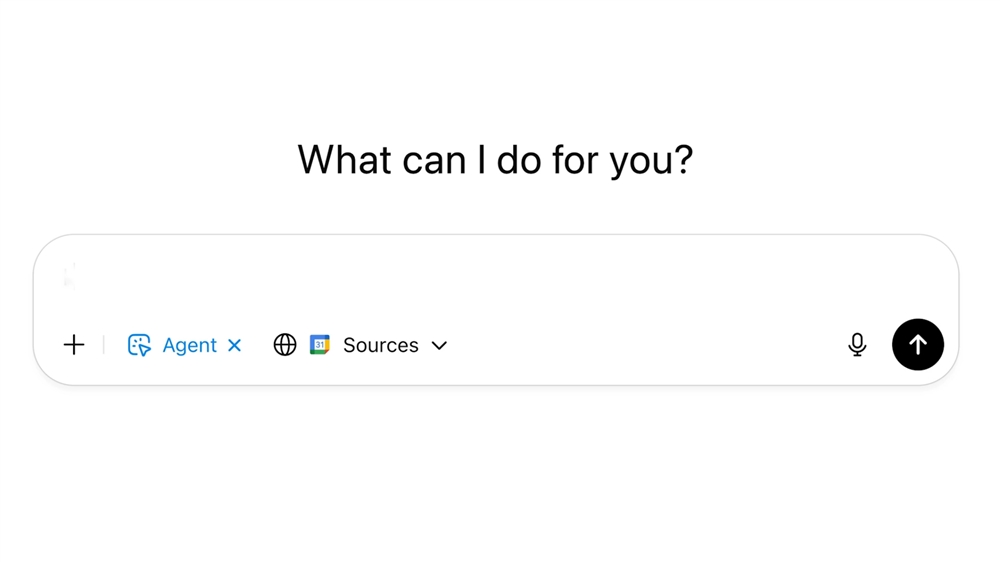

Chat RTX (originally called Chat with RTX) is essentially a tech demo that empowers users with recent RTX GPUs to create a personalized GPT chatbot. This application is designed to run locally on your Windows PC, utilizing the Tensor cores built into your GPU.

What makes Chat RTX different from cloud-based AI tools is that it's built to leverage the local content stored on your PC, giving you the power of a big LLM -- models like Mistral and Llama 2 are supported -- but with the added privacy of keeping your docs and queries local, rather that uploaded and processed on some remote cloud server. Programs like LM Studio similarly run on. your local hardware, assuming you have the power for it.

Practical Uses of Chat RTX

By connecting the chatbot to local files, you can have in-depth conversations about all the files in a particular folder or set of folders. Just point the app browser to your preferred director, and it can read, analyze, and provide answers about any .txt, .pdf, .doc/.docx and .xml files.

For example, I used it to write a detailed summary and notes of a YouTube video transcript, and also to quickly and easily cross-reference data from a large spreadsheet, making inferences that a human would need a much longer period of time to suss out. Think of it as a conversational interface for your data.

How to Install and Run Chat RTX

Before you begin, ensure your system is equipped with a GeForce RTX 30 Series or 40 Series GPU or higher and has at least 8GB of VRAM. You'll also need Windows 11, NVIDIA driver version 535.11 or later, at least 16GB of RAM and at least 30GB free for the initial download, but once it's installed and running, that'll be closer to 100GB of space needed.

Head over to NVIDIA's official website to download the Chat RTX application. The application is available for free at this link. To avoid issues, for now stick with the default recommended installation path.

After installation, you can start personalizing your chatbot by directing the application to the folders containing your data, including .txt, .pdf, .doc/.docx, and .xml files.

Cloud-based apps are always going to have issues with speed, uptime, and privacy, so I'm excited that we're seeing a bigger push towards local AI this early in the current AI age. With AI-focused hardware coming this year from so many hardware makers, it's a trend that will only grow over time.

Read more: AI Tools and Tips

- Microsoft Launches a New Era of Copilot Plus PCs

- Hands-on with the Faster, Smarter ChatGPT-4o AI

- Qualcomm Intros AI-Ready Snapdragon X Plus Chip

- Why Coders are Learning to Love Copilot

- Roll Your Own GPT: Setting Up Your Computer for Local AI

- How to Get NVIDIA Chat with RTX: Local AI for Everyone

- How to Make Sure Your Next Computer Is AI Ready

Editor's note: This article was originally published March 12, 2024 and has been updated.

Micro Center Editor-in-Chief Dan Ackerman is a veteran tech reporter and has served as Editor-in-Chief of Gizmodo and Editorial Director at CNET. He's been testing and reviewing laptops and other consumer tech for almost 20 years and is the author of The Tetris Effect, a Cold War history of the world's most influential video game. Contact Dan at dackerman@microcenter.com).