Getting Started with Ollama: Local AI for Everyone

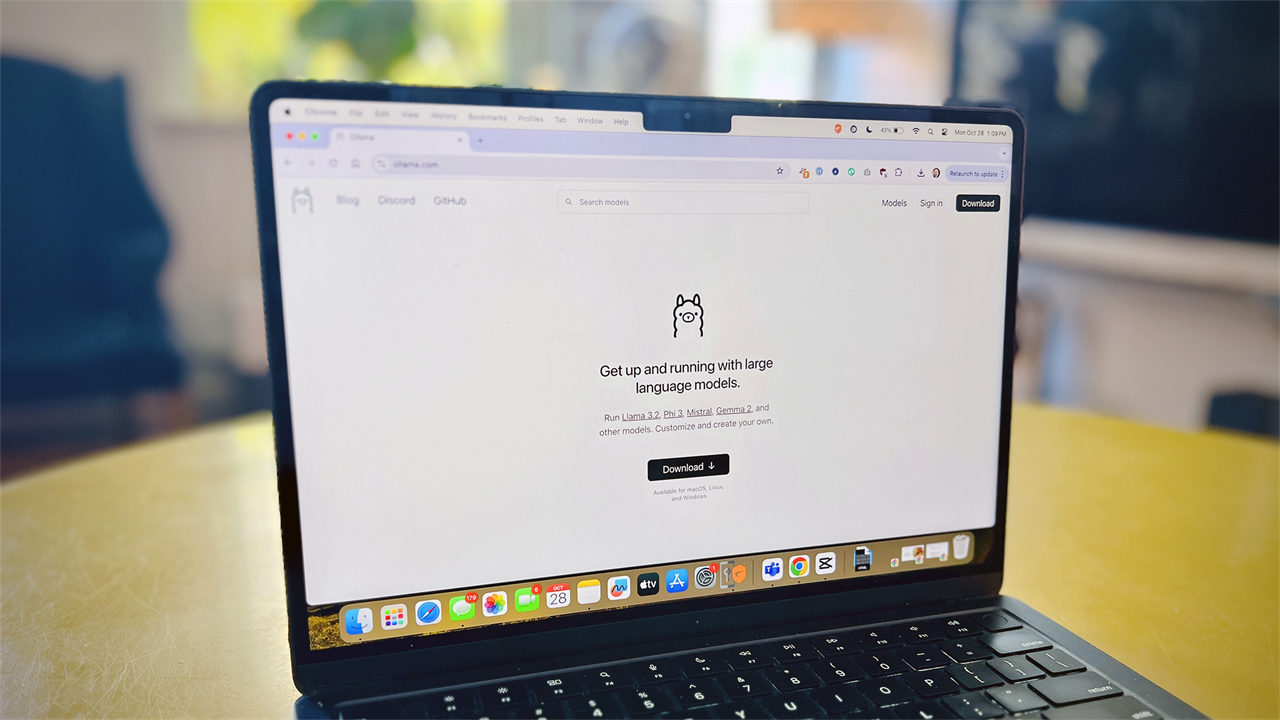

This open-source system can download and run many LLMs with just a couple of clicks.How-To

It's easy to think of AI as an online tool that other people create and you just use, like an off-the-shelf consumer product. But to take advantage of all the latest AI developments, you really should be able to run, manage and create your own AI systems. With a little help, it's actually not complicated at all to run a Large Language Model (LLM) on your own computer, or even to create cool tools like your own voice assistant or custom AI Models.

Photo: Richard Baguley

Photo: Richard Baguley We can do this because AI is really very simple: take an input and create something based on it from a series of rules. The set of rules is called a model, and it can range from a handful to the billions built into a model such as GPT-4o. The trick that makes these models revolutionary is that these rules are not rigid: if there is not a rule for a specific input, the model can guess based on the rules it has. In effect, it can improvise, which gives the illusion of intelligence.

That’s the secret sauce of AI: the ability to guess. Models like GPT-4o do this guessing based on billions of rules derived from millions of sources. Companies like OpenAI keep these models secret: you can use them, but you can’t download them yourself and poke around with them. Fortunately, there are plenty of people who disagree with this proprietary approach, and companies like Mistral AI and, to a smaller extent, Meta and Microsoft, have open-sourced their models so you can download and run them yourself.

To run an LLM on your PC, we are going to use Ollama. This is an open-source system that allows you to download and run many LLMs with a couple of clicks: it handles all of the behind the scenes stuff. It’s available for MacOS, Linux and Windows. Ollama will run on any Mac with Apple Silicon and most PCs with a discrete GPU and we've covered similar tools like LM Studio previously.

Open the pod bay doors please, Ollama

Firstly, install Ollama by downloading the app and running the installer. You don’t have to create an account at Ollama to use the app: that is only required to publish models. Once Ollama is installed, you’ll get a notification to click to start, and a terminal window will open. Don’t worry about having to use a terminal window: we will add a web interface soon. For now, let’s follow the progam’s own suggestion to download and run the Llama3 LLM, created by Meta, the parent company of Facebook, by typing in this in the terminal window:

Ollama run llama3

Hit return and Ollama will download and set up everything that is needed to run Llama3. This will take a few minutes and show a series of messages ending with a prompt for you to type something in. Let’s be polite and say hello by typing that into the terminal window.

And there we go: you are talking to a sophisticated large language model that can chat, answer questions or perform many other tasks. Ask it anything!

Remember that an LLM can still get things wrong and sometimes make things up if it doesn’t know the answer, which experts call AI hallucination. For a great example, I tried asking this:

What is the world record for walking across the English Channel?

That’s an impossible question to answer because the English Channel is the stretch of sea between England and France: you could no more walk across it than you could swim from Boston to Denver. Despite this, Llama 3 gives us an answer, plus a warning that it is a dangerous thing to do:

Next, let’s try a different LLM to see how the results differ. These commands will exit out the chat with Llama3 and load another model called Mistral from Mistral AI. Type the commands below, hitting return between and waiting for the prompt to return.

/?

Ollama run mistral

What is the world record for walking across the English Channel?

Finding the record for walking across large bodies of water produces a far more reasonable answer from this model, although it still seems slightly confused about how to do it. Ask it whatever questions you want.

Ollama isn’t limited to just chatting in text, though: the input you give it can be text, audio, or even an image. Let’s load a model designed to load an image and process it called LLaVA (Large Language and Vision Assistant), then ask it to grab an image from the Internet and describe it. Again, type these commands into the terminal and hit enter after each, then wait for the prompt to return before you start on the next one. NOTE: The second part is one line, reading “what’s in this image? https://upload…”.

Ollama run llava

what's in this image? https://upload.wikimedia.org/wikipedia/commons/0/05/Ansel_Adams_and_camera.jpg

The model interprets the prompt, then grabs the image from the web and describes it to you, identifying it as an image of the famous photographer Ansel Adams. This model can also find text in an image and write a caption for the image, or even a haiku about it…

Nature's light and shadow

Cameras snap the scene

Ansel captures all.

Not bad. Try it on your photos!

I’m sorry Richard, I can’t do that

At this point, you may be asking what the point is: you can get similar results by just asking Google, Microsoft, or OpenAI to do the same thing. The difference is that everything I have done here has been done on my computer, without sharing my data, my prompts, or my fondness for Ansel Adams with Google, Microsoft, or OpenAI. If I run the AI model locally, that data is not shared. Plus, I can tweak, change and chop the AI model around as much as I want: I am not beholden to some secret AI Model controlled by a corporation.

In the next installment in this series, I’ll show you how powerful this can be by getting deeper into hacking Ollama to create a private voice assistant that responds to commands. After that, I’ll show you how easy it is to edit machine learning models and to create your own to truly tap into the power of AI, all on your local computer, without sharing any of your data online.

Read more: AI Tools and Tips

- What is Meta AI? A Capable Chatbot That’s 100% Free

- Hands-on with ChatGPT o1-preview, OpenAI's Latest Innovation

- How to Get Started with Copilot for Microsoft 365

- Getting started with LM Studio: A Beginner's Guide

- Meet Claude, the Best AI You've Never Heard of

- How to Get NVIDIA Chat with RTX: Local AI for Everyone

Richard Baguley is a seasoned technology journalist and editor passionate about unraveling the complexities of the digital world. With over three decades of experience, he has established himself as a leading authority on consumer electronics, emerging technologies, and the intersection of tech and society. Richard has contributed to numerous prestigious publications, including PCMag, TechRadar, Wired, and CNET.