Meet Gemma, The Best Local LLM You’ve Never Heard Of

Google has built a surprisingly powerful lineup of AI models, some of which you can run locally on your own PC for free.How-To

If you're not exploring the world of open source AI, you're not a part of one of the biggest trends in the AI field today. And one of the best models to dive into comes from a surprising place -- Google.

Yes, Google is a powerhouse in AI research and it was even Google researchers who created the transformer architecture that makes AI large language models possible. But, the company’s first attempts to build useful consumer AI tools were still a mixed bag. The reveal of Google Bard included demos that produced incorrect answers. Google’s AI search, meanwhile, got off to a rough start, telling people to glue cheese to their pizza.

If that made you write off Google’s AI, I don’t blame you. But it’s time to reconsider. Google has a fleet of impressive large language and image generation models, many of which beat the alternatives from OpenAI and Meta.

Gemma, the best open source LLM you’ve never heard of

Gemma, first released in February of 2024, is Google’s (mostly) open source model. The model is available for anyone to download and you can run it on your PC free of charge, without limitations, for as long as you’d like. But Google has written its own new version of an open source style license for Gemma, so there's some legalistic debate about how open source it actually is.

For our purposes, Gemma is close enough to be considered open, and it's also good. Really good.

The latest and largest version, Gemma 3 with 27 billion parameters (known as Gemma-3-27B-it), currently ranks 13 on LMArena. That’s the third-highest rating for an open model, behind only DeepSeek’s V3 and R1. It’s also the highest-rated model you can expect to run on consumer laptop or desktop hardware.

That’s not to say you can fire it up on a netbook. Gemma 3 27B requires a super high-end video card, like the Nvidia RTX 50-series. Alternatively, you could run it on a desktop with a very high-end CPU and at least 64GB of RAM.

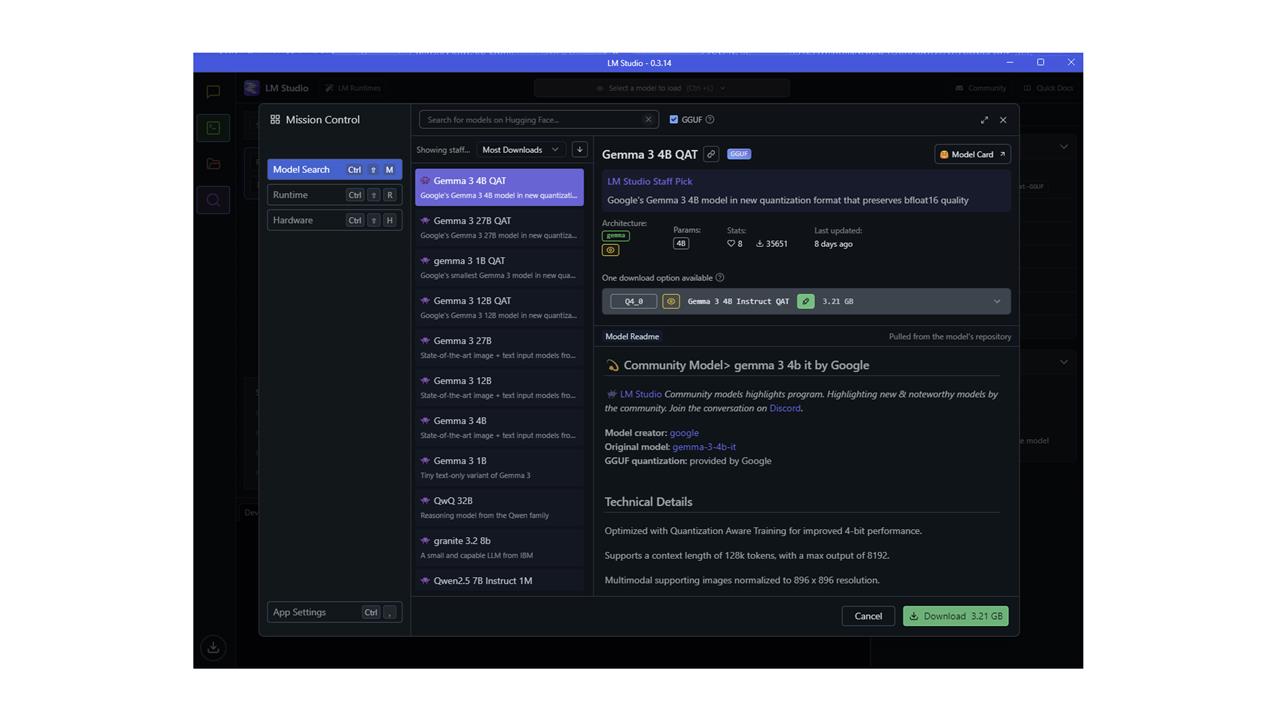

Screenshot: Matt Smith

Screenshot: Matt Smith

Fortunately, Gemma 3 is also available with 1, 7, or 12B parameters. These don’t perform as well as the largest model but are still top-ranked for their size. You can also find "quantized" versions of Gemma 3 27B that can load on a PC with less than 24GB of RAM.

A year ago, Meta’s Llama 3 was the go-to recommendation for people looking to run an LLM on a consumer laptop. But Meta’s rough launch of Meta’s Llama 4 cleared the path for Gemma, and it’s the new top dog among small, open models.

Gemini 2.5 steals the spotlight

Gemma is great, but if you’ve never heard of it, I wouldn’t be surprised. It often finds itself in the shadow of Google’s closed model, Gemini (the fact their names are similar doesn’t help, either). Plus, Gemini performs even better than its open cousin.

Announced on March 25, 2025, Gemini 2.5 is a “reasoning model” like OpenAI’s o1 and o3, or DeepSeek R1 (although Google chooses to call it a “thinking model”). The model executes a self-prompting chain-of-thought to navigate tough logical problems.

How good is it? Very good. Arguably the best, depending on who you ask. Gemini 2.5 Pro is still the number one model on LMArena, just ahead of OpenAI’s newly released o3 and Grok 3 Preview. On tough benchmarks like Humanity’s Last Examine and SimpleBench, it’s currently ranked second-best, behind OpenAI o3, and third-best on LiveCodeBench, behind OpenAI’s o4 and o4-mini.

And here’s the real kicker. Gemini 2.5 Pro is currently free to use on the web without significant rate limits.

There are two ways you can access it.

Google’s Gemini app and website are the user-friendly way to use Gemini 2.5 Pro, and best for quick tasks. However, you will hit rate limits that cut you off after a few questions (unless you subscribe to Gemini Advanced).

That’s not a big deal, though, because there’s a way to use Gemini 2.5 Pro for free without rate limits.

Just visit Google’s AI Studio. Built to show off Google’s models to developers, it provides 100% free access to Gemini 2.5 Pro with a context window of one million tokens (enough to fit about 1,500 pages of single-spaced text).

Seriously. 100% free.

And I’ve hit it hard.

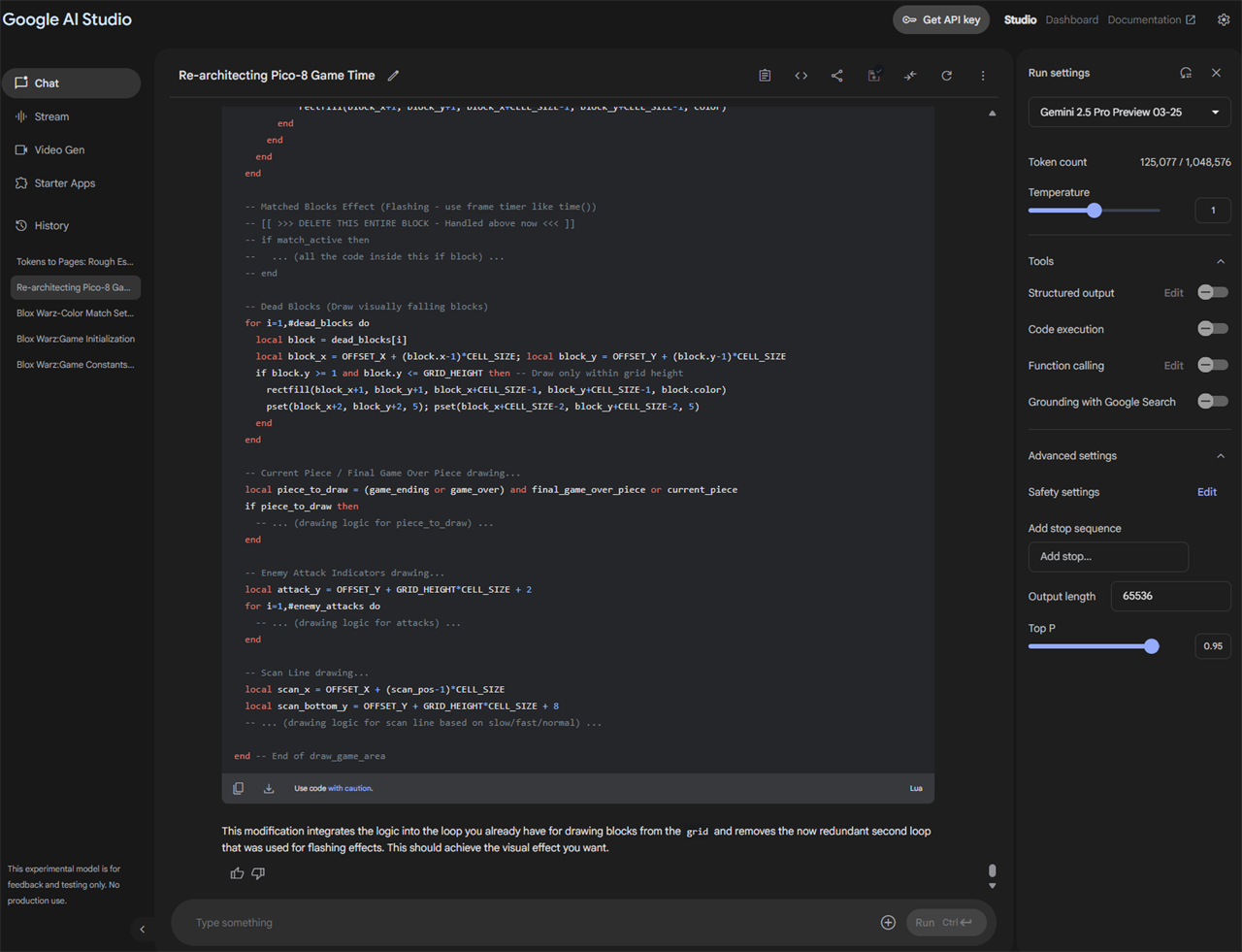

Screenshot: Matthew Smith

For example: I’ve had some fun “vibe coding” a Tetris-like puzzle game for the Pico-8 fantasy console. As an experiment, I wanted to change the game from real-time to a pseudo turn-based game where time only passes when the player moves a puzzle piece.

Rather than try to do it myself, I threw my game’s Pico-8 file (1,600 lines of code) into Gemini 2.5 Pro and told it to make the change.

The result was incredible. The game had one syntax error that was easily resolved. Otherwise, there were a few oddities in gameplay because of the huge shift in game mechanics, but it was completely playable.

Gemini 2.5 Pro is so good that it feels like someone at Google messed up and accidentally made it free. Of course, that’s not the case. It really is free.

Google catches up in image and video generation, too

Google’s image and video models are called Imagen and Veo. Neither hits the heights of Gemma and Gemini, but they’re solid.

Imagen is available in Gemini via a web browser or the app and works much like image generation in ChatGPT or Grok. Gemini will automatically detect when a prompt should be fulfilled by generating an image. It’s free and the rate limits are high. Alternatively, you can access Imagen through Google’s ImageFX. It’s free, too, and can generate four images at once.

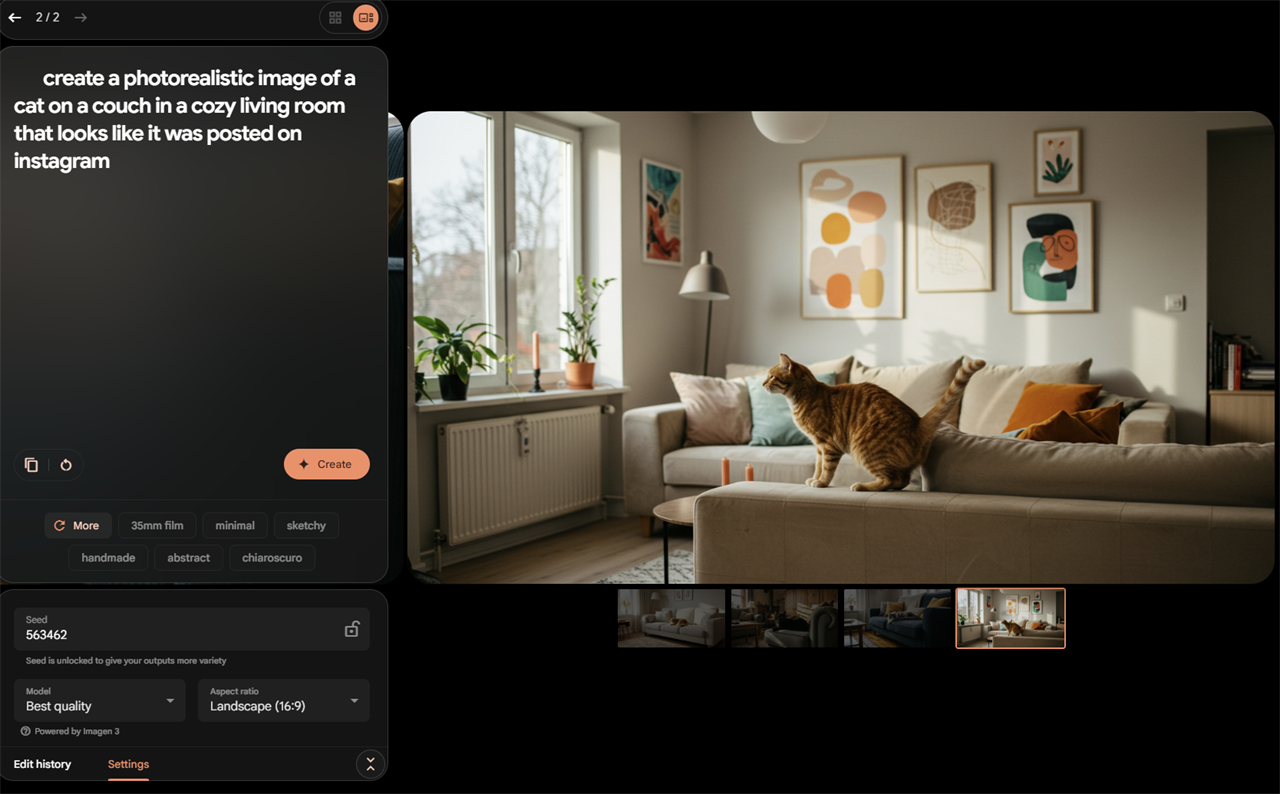

Screenshot: Matthew Smith

Compared to competitors, like OpenAI’s new 4o Image Generation, Google Imagen has one big strength and one big weakness.

The strength of Imagen is photorealism. AI-generated images are known to have a certain “sheen” or “plastic” quality. That’s not a problem for Imagen, which is shockingly good at photorealistic images.

However, Imagen isn’t nearly as good at text generation and instruction-following as OpenAI’s 4o Image Generation. As you can see in the image above, it gets the text on the most obvious sign correct, but the others don’t make sense. There’s also an odd doodle on the sign.

Veo is basically Imagen, but for video, and it’s harder to access. The Gemini website and app will only generate video for Gemini Advanced subscribers and, even then, it’s limited to low-resolution clips no more than eight seconds long.

Swing by Google AI Studio (the same place that offers Gemini 2.5 Pro for free), though, and you’ll find Veo 2. And, yes, it’s free! However, it’s rate-limited (it stops me at 5 generations each day).

Strangely, Google also has a website for VideoFX, but it’s behind a waitlist. I applied to that waitlist months ago and was never granted entry, and given Veo 2 is available through Google AI Studio, I kinda think they forgot about the VideoFX website.

Veo 2’s quality is roughly on par with OpenAI’s Sora. It’s not bad at clips that don’t include a lot of movement, but it has trouble with fast action and realistic physics. Still, it’s impressive for a free tool.

What’s the catch?

Ok, Google’s models look great. But perhaps you’re skeptical. If they’re so good, why does ChatGPT have a billion users, while Gemini is at just 350 million?

I think it’s the lack of a unified, easy-to-understand product. Google has Gemma (including some forks I didn’t get around to discussing), Gemini, Google AI Studio, Imagen via Gemini, Imagen via ImageFX, Veo via Gemini Advanced, Veo via Google AI Studio, and a landing page for VideoFX that seems abandoned. Among other things; I didn’t even mention Google’s excellent NotebookLM for summaries and faux podcasts, or Firebase Studio for prototyping apps with AI.

It’s a lot to wrap your head around. ChatGPT is a bit confusing, too, now that OpenAI has about a dozen models, but most of it’s at least packed in the same interface.

However, if you’re willing to navigate between a few different sites, Google’s models provide most of the functionality of a ChatGPT Plus subscription, free of charge.

Read more: AI Tools and Tips

- How to Use an AI Agent

- Chain of Thought: AI's New Reasoning Revolution

- DeepSeek's New AI Challenges ChatGPT — and You Can Run It on Your PC

- How To Improve Your AI Chatbot Prompts

- How I Turned Myself into an AI Video Clone for Under $50

Matthew S. Smith is a prolific tech journalist, critic, product reviewer, and influencer from Portland, Oregon. Over 16 years covering tech he has reviewed thousands of PC laptops, desktops, monitors, and other consumer gadgets. Matthew also hosts Computer Gaming Yesterday, a YouTube channel dedicated to retro PC gaming, and covers the latest artificial intelligence research for IEEE Spectrum.

Comment on This Post

See More Blog Categories

Recent Posts

This Week in AI: OpenAI Promises GPT-5 Changes

For Aug. 15, 2025: Perplexity bids for Google Chrome, Google adds "Create" tab to Photos, more impacts from AI energy needs, Elon Musk goes after Apple over OpenAI, companies still searching for AI profits.

Continue Reading About This Week in AI: OpenAI Promises GPT-5 Changes