How I Turned Myself into an AI Video Clone for Under $50

My virtual twin can host videos, answer questions, and more -- and anyone can make one.How-To

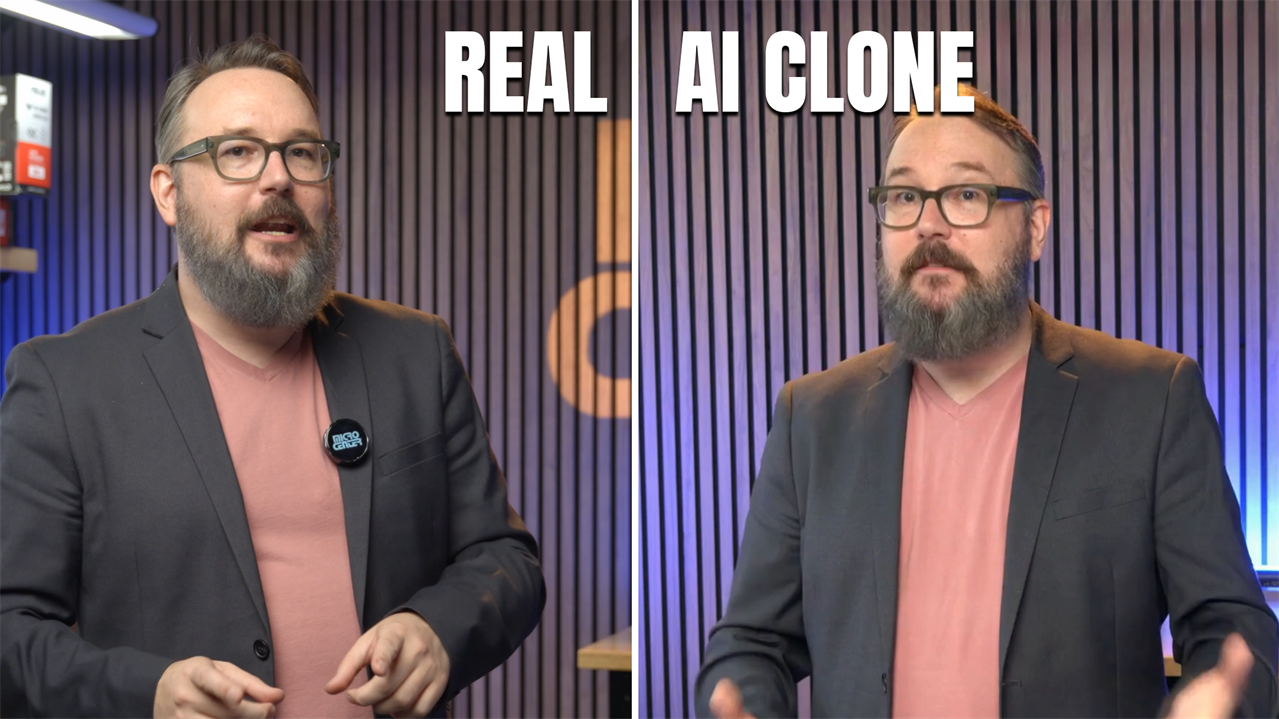

One of the most talked-about possibilities of the AI era is the ability to create virtual copies of ourselves, featuring both voice and likeness. Now, with a combination of high-powered PCs, AI software, and decent mics and cameras, you can create an AI video clone that copies your face and voice and can say just about anything you can type into a script. The kicker? You can get started for under fifty bucks.

As someone who is frequently in front of the camera, I’ve been intrigued by this idea for some time. Until recently, however, the available tools were either too complex, too costly, or just didn’t look and feel realistic enough. But now, after testing a few accessible options, I’ve figured out how to create a decent video clone on a budget.

Step 1: Recording source video and audio

To build this AI clone, start by capturing high-quality video and audio assets. In both cases, you want the captured media to be as high-quality as possible. For video, your smartphone’s rear camera set to 4K works well, and a DSLR or higher-end camera is even better. Lighting is key: Good natural light or professional studio lighting makes a huge difference in the quality of your clone.

About five minutes of good-quality video of you speaking is enough to get started. Keep in mind the video clone will be wearing the same clothes and be in front of the same background, although most video editing apps can isolate your clone from the background and make other adjustments.

For my voice, I gathered a mix of audio files ripped from my previous videos, the audiobook I narrated for my book, The Tetris Effect, and some fresh audio captured on my Logitech Yeti microphone. The cleaner the audio, the more natural the result, so invest some time in finding a quiet space for recording.

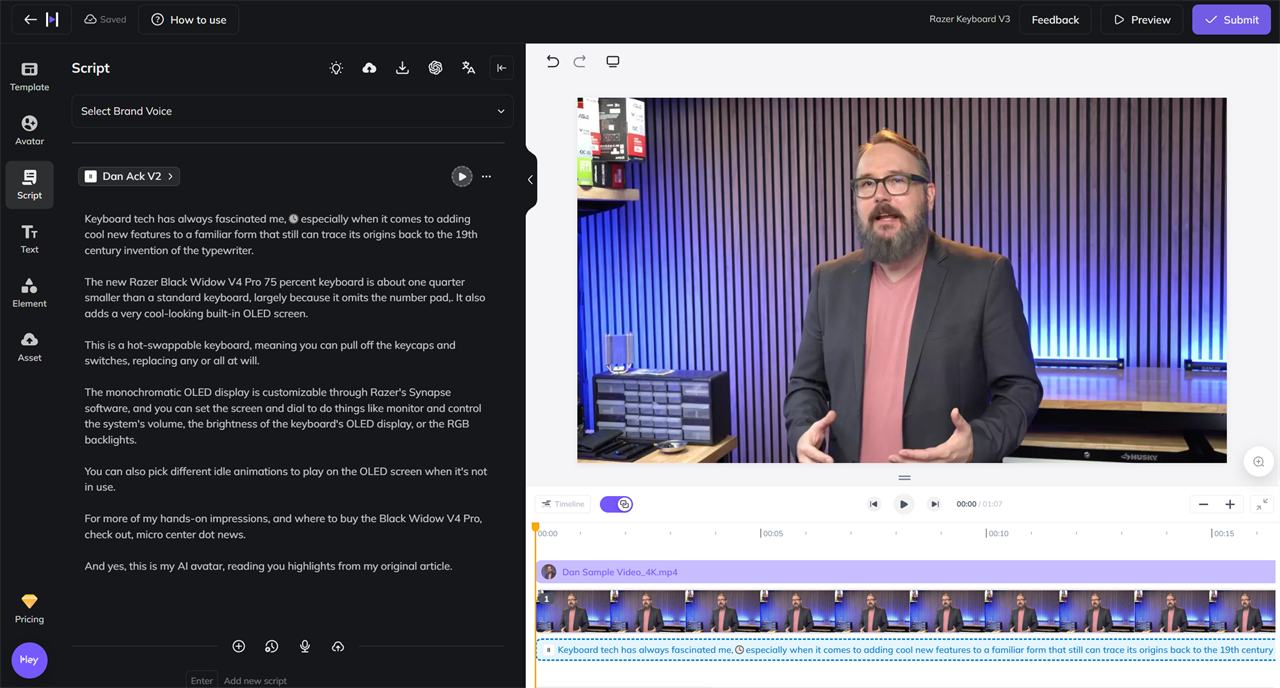

Step 2: Building the video clone with HeyGen

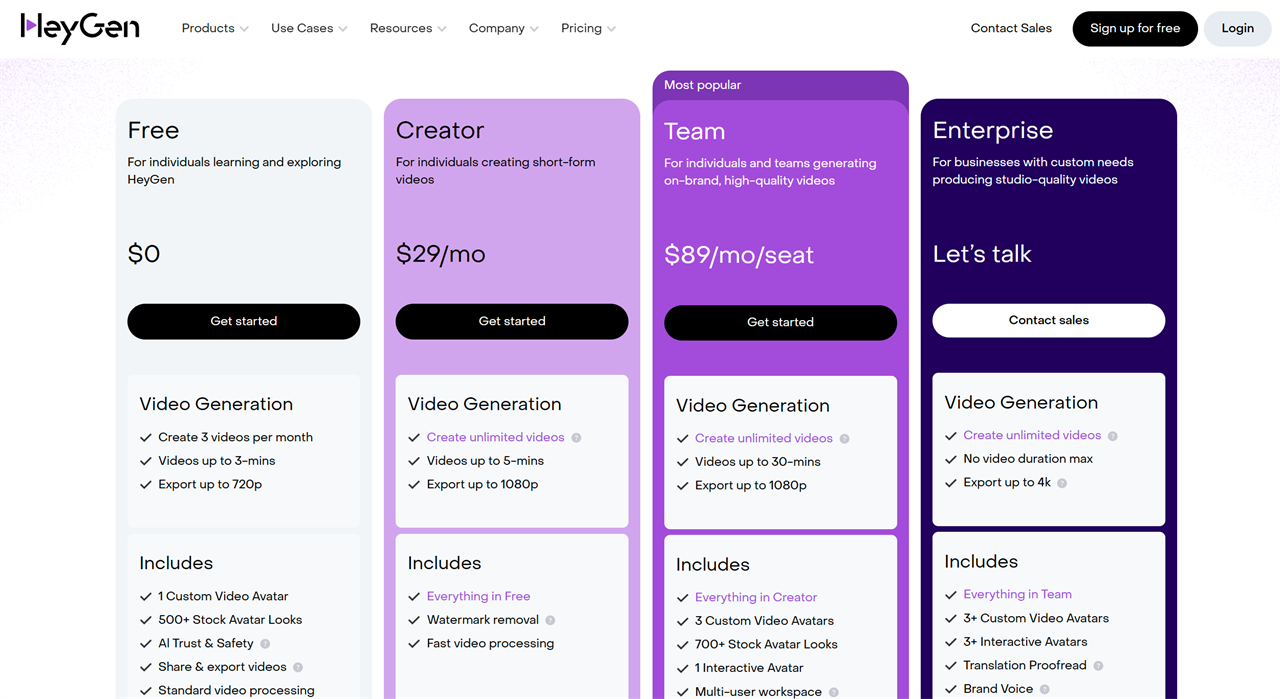

The video component of my AI clone comes from an online app called HeyGen. There's a free version that allows you to play around and even create a watermarked virtual AI avatar, but to create longer watermark-free videos that download at a higher quality, you'll need to subscribe to the $29/month plan.

With HeyGen, all you need is a clean, well-lit, high-resolution video of yourself talking. After capturing the video, I uploaded it to HeyGen, where it analyzed and created a video avatar based on my footage.

Different recording conditions yield varied results. My first attempt, shot with a smartphone’s front camera and low lighting, produced an avatar that was passable. For the next attempt, I shot with the rear camera in front of a large window with good natural lighting. Finally, for this article, I shot my source video in the Micro Center video studio, with a professional camera and lighting setup.

Step 3: Cloning my voice with Eleven Labs

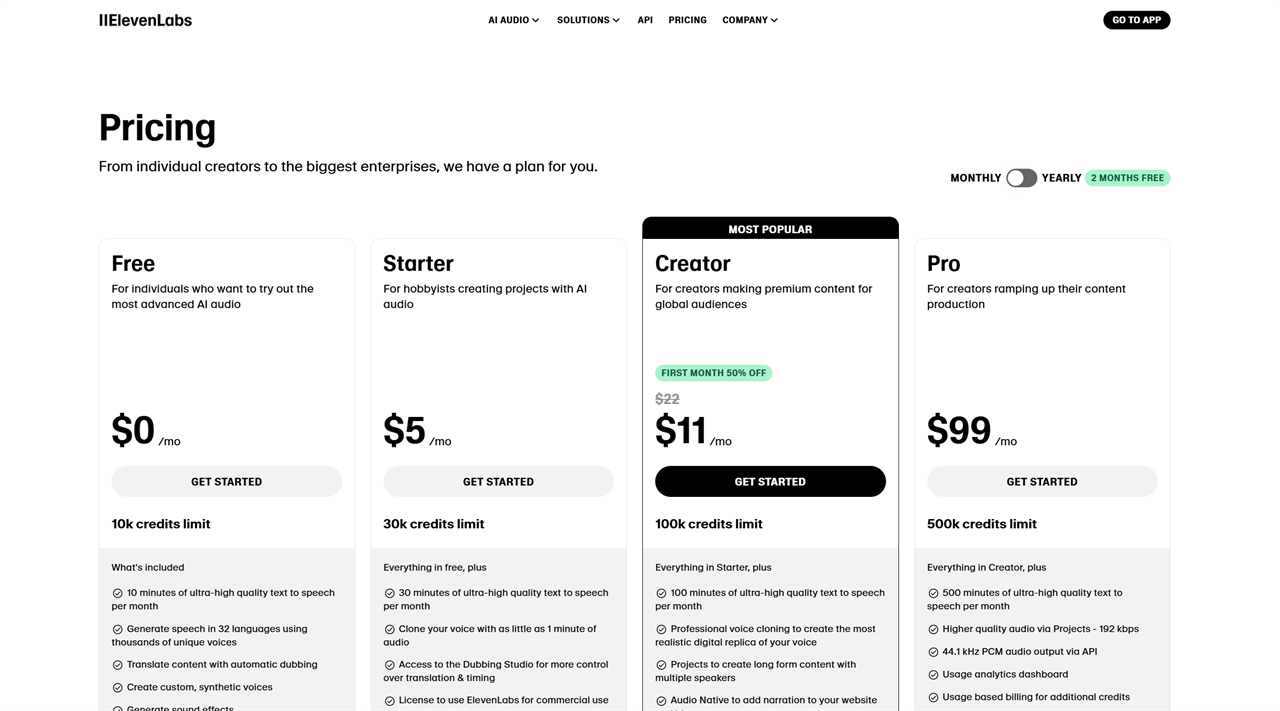

While HeyGen offers voice cloning based on your uploaded audio, the results are merely ok. To get a more natural-sounding voice, I subscribed to Eleven Labs, a top-tier voice cloning service. You can get started there for $5/month for 30 minutes of audio output or $11/month for 100 minutes at a higher bitrate.

This is where we need the standalone audio samples recorded earlier, the cleaner the better. For what Eleven Labs calls an instant voice clone, 5 minutes or so of audio is good; subscribers at the Creator level or above can upload around 30 minutes of audio to create a more polished professional voice clone.

It's a very similar process to HeyGen, and you end up with a voice that can sound very realistic. It has controls for altering its presentation, including stability, similarity and voice exaggeration, although if you mess with the controls too much, you start to get some artifacts in the audio.

But how do I use my ElevenLabs voice together with my HeyGen video avatar? The nice thing is I can sync them together by hooking the Eleven Labs voice into the HeyGen system via an API key.

Step 4: Putting it all together

Once the AI clone is set up, you can use it in various ways. For example, if I wanted a video version of a Micro Center News article, I’d take the text, import it into HeyGen, then choose the voice from ElevenLabs. I give it a few sample listens, changing the text where necessary, adding pauses, and fixing pronunciation where needed.

Then, I'd let it process and review the results. I like to leave a little blank space in the timeline at the end of the clip so the video doesn't cut off immediately after I get the last word out. Your avatar will just kind of sit there and move around a bit, filling in with the non-verbal cues you gave it during your original video recording.

For a final touch, I added a third AI element -- a custom GPT created in OpenAI's ChatGPT tool and trained on my professional writing and career. I decided to ask that chatbot version of myself a couple of simple tech questions, then take the unedited text responses and import them into my HeyGen/Eleven Labs workflow.

In the video above, you can see me ask myself these questions -- about GPU power supplies and new video games -- and my clone offers a decent, well-informed answer.

Even a couple of years into the AI era, I'm still amazed that, for less than $50, you can create a digital double. It's not perfect yet, and it’s easy to spot artifacts or robotic inflections if you overdo the settings. But overall, it's an impressive bit of DIY AI, and a glimpse into a future where tech and AI literacy will be crucial for distinguishing what's real and what's virtual.

Read more: AI Tools and Tips

- What is Meta AI? A Capable Chatbot That’s 100% Free

- Hands-on with ChatGPT o1-preview, OpenAI's Latest Innovation

- How to Get Started with Copilot for Microsoft 365

- Getting started with LM Studio: A Beginner's Guide

- Meet Claude, the Best AI You've Never Heard of

- How to Get NVIDIA Chat with RTX: Local AI for Everyone

Micro Center Editor-in-Chief Dan Ackerman is a veteran tech reporter and has served as Editor-in-Chief of Gizmodo and Editorial Director at CNET. He's been testing and reviewing laptops and other consumer tech for almost 20 years and is the author of The Tetris Effect, a Cold War history of the world's most influential video game. Contact Dan at dackerman@microcenter.com.

Comment on This Post

See More Blog Categories

Recent Posts

Watch: Intro to Electronics at Micro Center - Episode 3: Arduino and Servo Motors

In our new Intro to Electronics episode, we continue our DIY journey with some servo motors and an Arduino Kit, including code demonstration.

Continue Reading About Watch: Intro to Electronics at Micro Center - Episode 3: Arduino and Servo Motors