DeepSeek's New AI Challenges ChatGPT — and You Can Run It on Your PC

AI company DeepSeek emerged from seemingly nowhere in late January with a model that challenges the quality of LLM leaders like OpenAI’s o1. It came as such a surprise, and earned so much buzz, that it caused a stock market rout among U.S. tech stocks.

But DeepSeek isn’t another closed-off model privately deployed on a fleet of NVIDIA GPUs. The web and mobile apps are free to use, the API is inexpensive enough for even hobbyist developers to afford, and the model is available for anyone to use and download on a home computer. This model can rival OpenAI’s best, yet you can run it on a Raspberry Pi.

What is it?

DeepSeek is an AI company founded in 2023. It’s owned by a China-based hedge fund called High-Flyer, but DeepSeek is focused on AI large language models (LLMs). The recent excitement surrounding the company is thanks to two recently released models: DeepSeek-V3 and DeepSeek-R1.

DeepSeek-V3 is a solid GPT-4o look-alike that posts great benchmark scores, yet DeepSeek claims it cost less than six million dollars to train (the full picture is somewhat more complicated). That’s far less than the hundreds of millions of dollars companies like OpenAI sink into training models. Despite that, DeepSeek-V3 is competitive, if not a little better than, GPT-4o in most AI benchmarks.

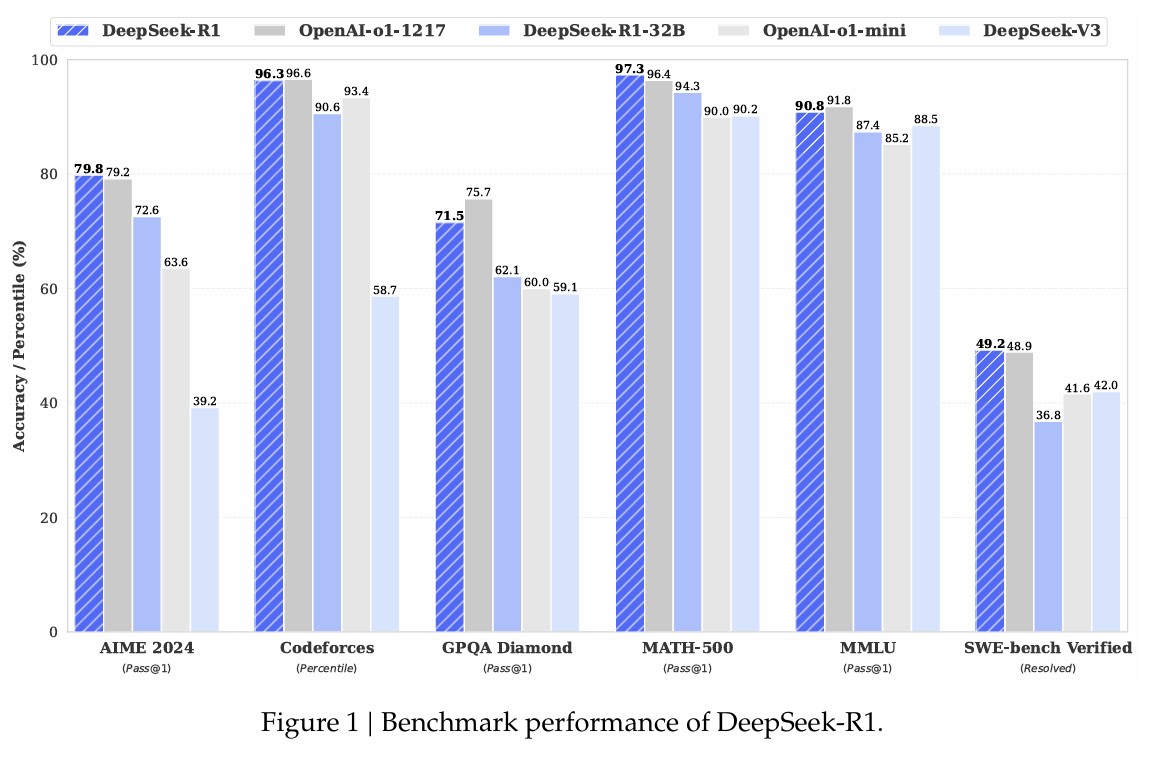

DeepSeek-R1 is more interesting. It uses chain-of-thought (CoT) reasoning, a technique where an LLM learns to prompt itself with questions that guide it through answering the user’s prompt. That results in better performance when asked to handle multi-step problems and logic puzzles. DeepSeek-R1 isn’t the first CoT model, but it posts benchmark scores that land just a hair behind OpenAI’s o1, and it defeats both Google Gemini 2.0 and Claude 3.5 Sonnet.

How good is it?

Performance varies between DeepSeek-V3 and DeepSeek-R1, but both are very, very good.

DeepSeek-V3 claims a score of 88.5 in the MMLU benchmark, a test that pits LLMs against a broad range of questions across math, economics, and philosophy. DeepSeek-R1 is even better, with a claimed score of 90.8. Both numbers are from DeepSeek’s own technical reports on its models and still need to be independently verified. OpenAI’s o1, by comparison, scored 91.7.

Benchmark numbers don’t really tell you how it feels to use an LLM, but I was more than pleased with DeepSeek’s performance. I most frequently use LLMs for brainstorming, editing, and writing or debugging code for personal projects, like my D&D combat tracker. DeepSeek felt like OpenAI’s ChatGPT (right down to the interface) and spat out comparable results.

Does it really beat OpenAI’s o1 or Anthropic’s Claude 3.5 Sonnet?

DeepSeek’s new models are remarkable technical achievements that show it’s possible to train an excellent LLM on far less capable hardware than previously thought, but they don’t beat the pants off leading competitors.

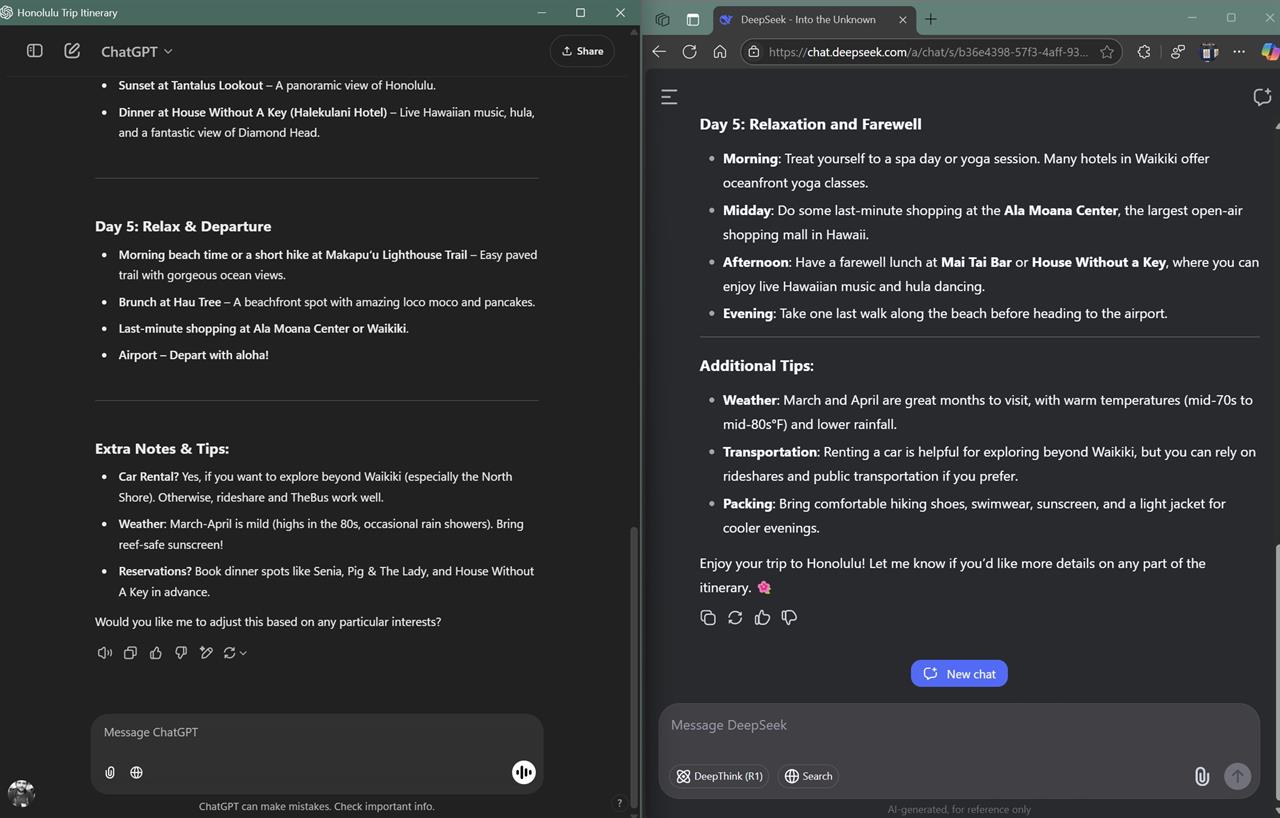

Compared to ChatGPT, DeepSeek-V3 and DeepSeek-R1 feel almost identical. They deliver responses with similar formatting (both love bullet points), and both will throw in a few emoji to add playfulness when a topic seems casual. Both tend to be concise, as well, skewing towards short, to-the-point answers. They’re similarly quick to respond in most situations, but DeepSeek frequently spat back a “server busy” error.

Pitted against Claude, DeepSeek-V3 and DeepSeek-R1 feel quite different. Claude is more serious, formal, and detailed. It rarely inserts emoji, unless specifically asked, and is less aggressive with bullet points. Also, unlike both DeepSeek and ChatGPT, Claude doesn’t present CoT as an option you select. Instead, Claude automatically runs CoT behind-the-scenes when it thinks it’s necessary.

I wouldn’t say any of these models are better or worse than the others. ChatGPT and DeepSeek are very similar, so much so that I’m not sure I’d notice any difference in their replies in a blind test (which is, perhaps, intended). Claude feels different from ChatGPT and DeepSeek, and I personally prefer the style of its replies and its user interface -- but I can’t say it’s objectively better.

It’s mostly free for personal use, and the API is cheap

So, if DeepSeek’s models aren’t necessarily better than ChatGPT or Claude, why would you want to use them?

Simple. They’re cheap!

DeepSeek’s website and app provide free access to both DeepSeek-V3 and DeepSeek-R1. Both presumably have rate limits, but I didn’t encounter them in my use. Unlike ChatGPT, DeepSeek doesn’t even have a paid or consumer plan (yet). It’s free or bust.

Instead, DeepSeek charges for access to its API. This is the method developers use to call DeepSeek’s model in their own app, website, or service. Pricing varies over time but, at the moment, DeepSeek-V3 is about 35x less expensive than GPT-4o, and DeepSeek-R1 is about 27x less than OpenAI o1.

That cuts to the heart of why DeepSeek made headlines. Its models don’t beat those from OpenAI or Anthropic, but they offer similar results for a tiny fraction of the price. It makes AI far more affordable, not only for professional developers, but also for enthusiasts looking to add AI to a personal project.

And you can run DeepSeek on your PC (or Mac)

Accessing DeepSeek in a browser, or over the API, is inexpensive and easy. But what if you want to run it on your home computer or laptop? You can do that, too.

DeepSeek’s models are open weight, meaning the model weights are available and free to download on AI communities like Hugging Face. The weights let individuals run the model locally. Better still, DeepSeek released numerous distilled models with lower parameter counts that function on consumer hardware. The smallest has just 1.5B parameters and should work on most consumer PCs with at least 8GB of memory.

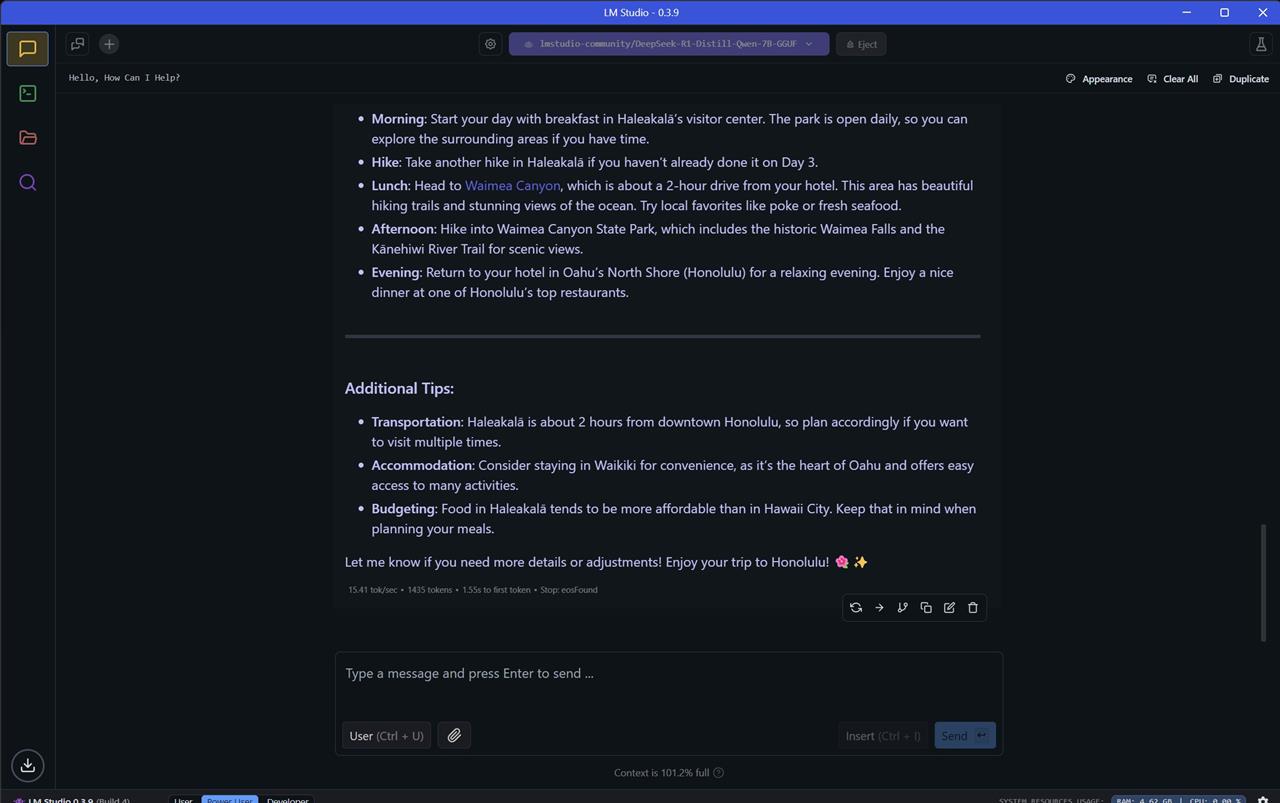

It's not difficult to use, either. Popular local LLM frontends, like LM Studio, quickly added support for DeepSeek.

You can use DeepSeek through LM Studio by downloading and installing the LM Studio app to your Windows, Mac, or Linux computer. Once installed, open the app and search for DeepSeek in the Discover tab (which should appear by default). You’ll see a list of options. Select one (I recommend DeepSeek-R1-Distill-Llama-8B for PCs with at least 32GB of RAM), hit download, and wait. It will automatically become available in the Chat interface once the download has finished.

Note: You will need LM Studio 0.3.7 or newer. You may need to re-install LM Studio if you already have it on your computer.

While it’s neat to use DeepSeek’s models on your local machine, be warned: the distilled models won’t perform nearly as well as the full-sized model available through DeepSeek’s app and API. They’re still useful for less demanding tasks, but users wanting to tackle challenging reasoning, coding, math, and logic problems won’t be impressed.

And, yes, it’s possible to download the full-sized DeepSeek-V3 and DeepSeek-R1 models. Your computer needs a couple hundred gigabytes of memory to run them, though, so they’re not exactly practical.

What about data privacy?

DeepSeek’s privacy policy notifies users that it collects a broad swath of data. This includes not only the data provided to DeepSeek directly but also “automatically collected” data like the device and browser you’re using, your general location, your IP, and so on.

None of this is unusual. Many large online services collect the same or similar information. Still, you should use DeepSeek with the assumption that everything you type, every file you upload, and every session your access is being tracked and stored.

DeepSeek’s privacy policy also applies only to DeepSeek’s web interface, app, and API. DeepSeek models hosted by other services, like Microsoft Azure, are subject to the host’s data privacy policy, not DeepSeek’s. And, of course, running DeepSeek’s LLM on your own PC will eliminate most data privacy concerns, since the model is running on your own hardware.

The launch of DeepSeek has set a new standard for free, widely available LLMs. Though not quite the best models in the world, DeepSeek-V3 and R1 land incredibly close to OpenAI’s class-leading o1. Yet, despite the slim gap between them, DeepSeek’s models are many times more affordable. That makes DeepSeek an obvious go-to for both AI professionals and enthusiasts who want an open, affordable, and capable LLM.

Read more: AI Tools and Tips

- What is Meta AI? A Capable Chatbot That’s 100% Free

- Hands-on with ChatGPT o1-preview, OpenAI's Latest Innovation

- How to Get Started with Copilot for Microsoft 365

- Getting started with LM Studio: A Beginner's Guide

- Meet Claude, the Best AI You've Never Heard of

- How to Get NVIDIA Chat with RTX: Local AI for Everyone

Matthew S. Smith is a prolific tech journalist, critic, product reviewer, and influencer from Portland, Oregon. Over 16 years covering tech he has reviewed thousands of PC laptops, desktops, monitors, and other consumer gadgets. Matthew also hosts Computer Gaming Yesterday, a YouTube channel dedicated to retro PC gaming, and covers the latest artificial intelligence research for IEEE Spectrum.