Roll Your Own GPT: Setting Up Your Computer for Local AI

Learn these basics and you'll be on the way to running AI in no time.How-To

Image: LM Studio

Image: LM Studio

In the less than two years since OpenAI launched ChatGPT, artificial intelligence chatbots have already become a regular part of the lives of millions of people. Apple's Siri, Amazon's Alexa and the Google Assistant have been around for years, but the new class of AIs like ChatGPT, Microsoft's Copilot and Google's Gemini offer far more interactive help with coding, writing, and decision making.

The downside is that each of these chatbots requires an internet connection and most charge a monthly subscription for advanced features.

But good news: You can build your own ChatGPT-like bot at home and on the computer right in front of you. Modern AIs use far more computing power than most of us have at home. But even then, some of these chatbots are pretty impressive and new AI-capable chips and components are only going to make it easier to run powerful models locally.

Hardware needs

Many people tend to hold onto computer hardware for several years without ever feeling the need to upgrade. That's very different from what it was like a decade or two ago, when people typically upgraded their computers every couple years because the technology had advanced so much in such a short time. Back then, chip advancements meant significant battery life improvements, as well as wow-worthy upgrades to video games.

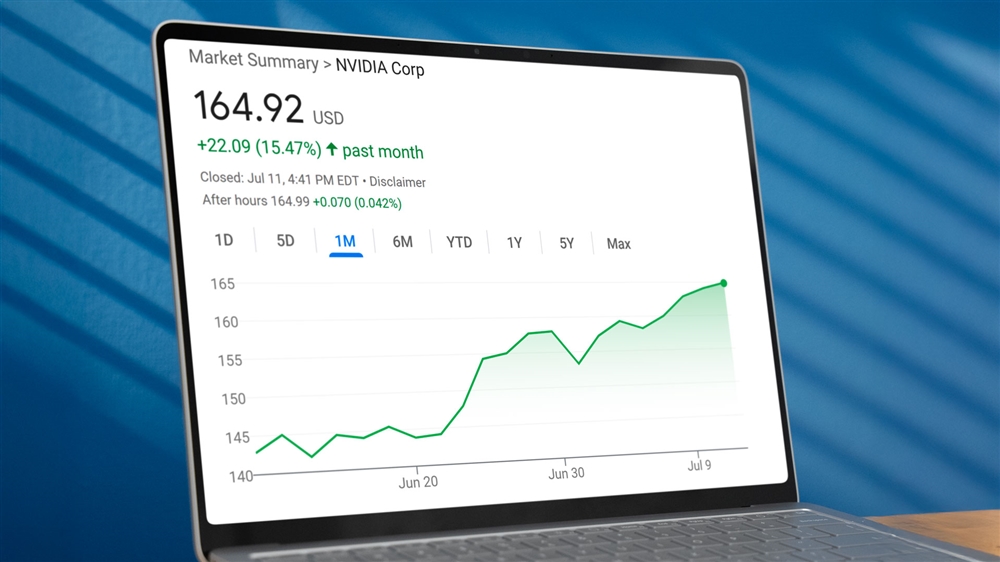

AI is kicking us back into that feeling, as CPUs, GPUs and NPUs from Intel, AMD, NVIDIA, Qualcomm and ARM add specialized functions to support chatbots and machine learning algorithms.

If you're looking to future-proof today, you want to focus your purchasing on high-performance and high-memory GPUs, such as Nvidia's 4000-series cards. You also want to make sure you have more memory in your computer, as most AI apps need at least 16GB of dedicated memory just to run on their own. That means you now need to consider 32GB is likely to become standard sooner rather than later. Intel's latest Core Ultra chips are built with NPU hardware for taking over AI tasks from the CPU as needed.

You'll also want to invest in fast and ample storage, such as PCIE 4-compliant NVME SSD's, which can quickly move data around your computer for the large datasets and training models AIs run from.

Choose your app

There are already several apps designed to make running your own AI on your home computer relatively easy.

One of the most popular ones is called GPT4All, which just as the name suggests, is designed to help anyone run an AI on their computers.

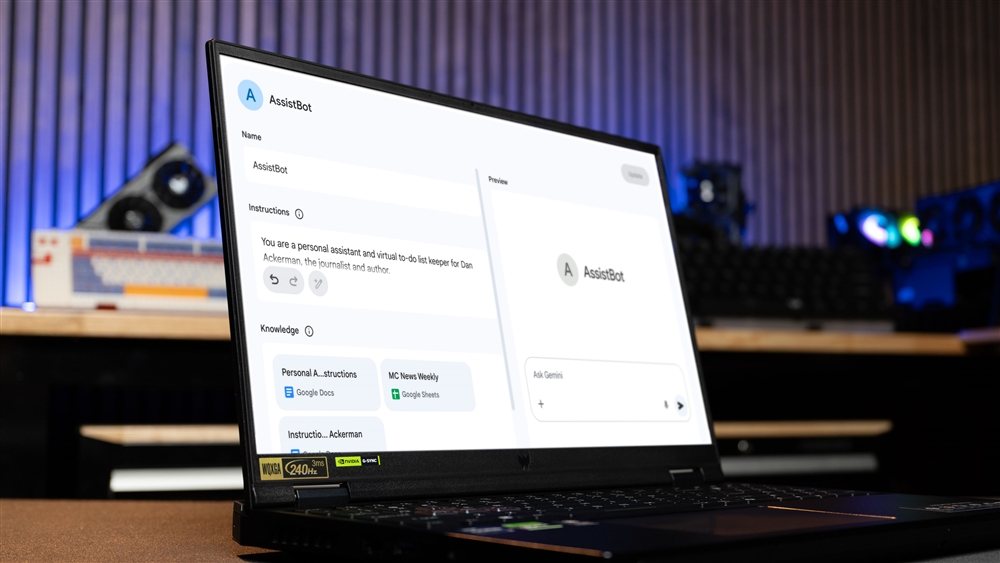

Another is LM Studio, which is similar to GPT4All, but offers access to many more models, introducing a lot more complexity.

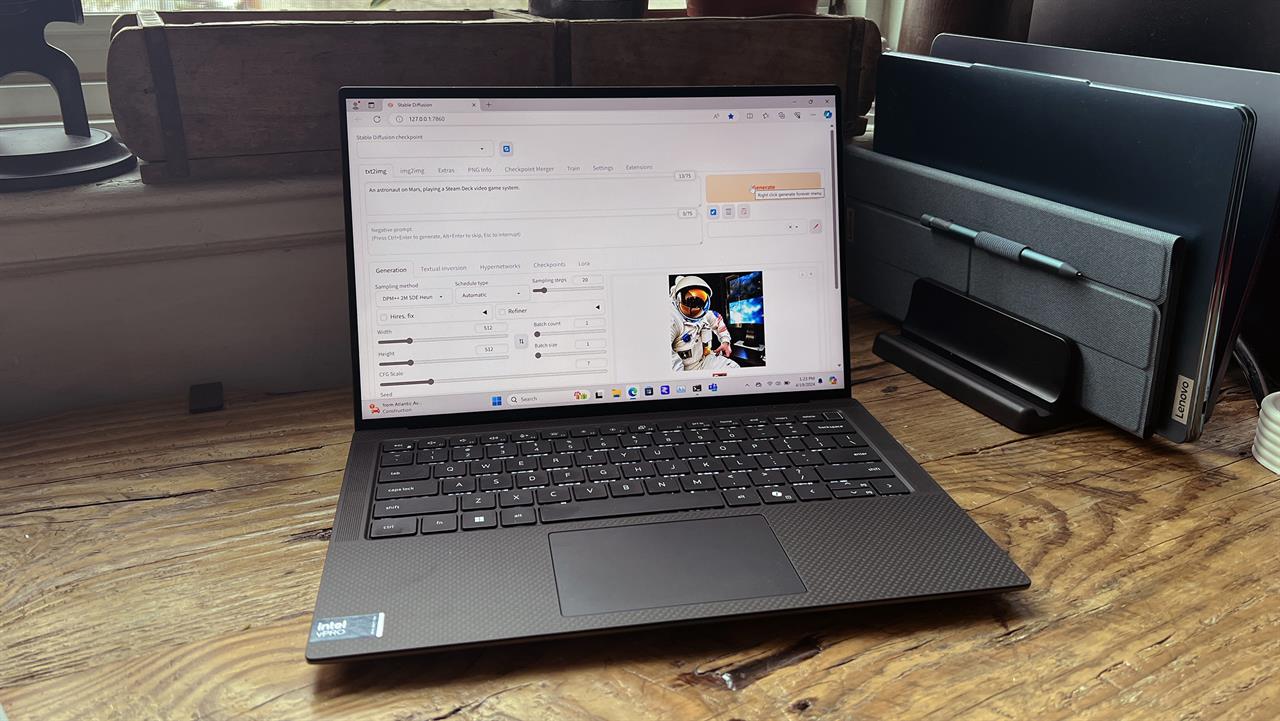

For generating images, there are apps like Draw Things, Diffusers and DiffusionBee on Mac. For Windows, it's a little more involved, using Stable Diffusion Web-UI and an app called Automatic1111.

Finally, there are specialized apps like Reor, an app built around taking notes that you can then "chat" with in order to pull useful information out of them. There's also MacWhisper and Speech Translate, which provides audio transcription using OpenAI's open-source Whisper technology.

NVIDIA is also offering ChatRTX, which works on systems with 3000-series or 4000-series NVIDIA GPUs, and supports local versions of LLMs including Mistral and Llama.

Photo: Dan Ackerman -- A Dell Precision laptop running Stable Diffusion.

Photo: Dan Ackerman -- A Dell Precision laptop running Stable Diffusion. Choose your model

Running an AI isn't as easy as downloading an app. You also have to choose your "AI Model," which is effectively the brain of the AI. There are larger models that take up more memory, and they typically have been trained to acceptably do many things, or few things very well.

Whisper is a great example of this: A smaller model can quickly translate an audio recording to text but will typically screw up some words or ignore punctuation. A more advanced model infers punctuation marks and might even be able to identify different speakers.

Fair warning: Choosing your model can be a rabbit hole in and of itself. If you thought online debates about RAM timings and CPU overclocking got overwhelming at times, get ready. LM Studio is an example of putting a simpler interface on top of a more complex subject, as it can import models directly from Github for you.

Internet or not?

It's important to consider how functional you want your home-grown AI to be. Some apps are building in extra features that can connect with OpenAI, Microsoft or Google through their APIs, effectively offloading larger tasks to their massive data centers.

Of course, this comes with a price, so consider what you're comfortable with. For now, we recommend using at least two different AIs: One for local use, and one for internet-powered use. That way, you can split tasks based on how much processing power you need. And for each, you might need a text/chat-based AI like LM Studio and another one, like Stable Diffusion, for generating images.

Complex fun

Running an AI on your computer is much more complicated than using a simple online interface like ChatGPT, Gemini or Copilot right now, and installing LM Studio and Stable Diffusion requires digging into things like Python and running code in the command prompt. But you'll likely be impressed with the results and want to continue to dig deeper.

Stay tuned for more detailed tutorials on installing and running specific local AI apps and review of more AI-capable hardware.

More on AI

Ian Sherr is a widely published journalist who's covered nearly every major tech company from Apple to Netflix, Facebook, Google, Microsoft, and more for CBS News, The Wall Street Journal, Reuters, and CNET. His stories and their insights have moved markets, changed how companies see themselves and given readers a unique view into how some of the world’s most powerful brands operate. Aside from writing, he tinkers with tech at home, is a longtime fencer -- the kind with swords -- and began woodworking during the pandemic.