How to Build Your Own AI Personal Assistant

The key to customizing an AI tool to better understand you? Write it a job description.How-To

For years, I tried to wrangle a chaotic mix of deadlines, meetings, and a never-ending to-do list with a patchwork of apps and notebooks, but I never found a single tool that truly fit my workflow.

It turns out what I really needed wasn't another app, but a personal assistant. Someone who could take notes at a moment's notice, yes, but more importantly, someone who could ingest a wide variety of tasks, ideas, and events -- delivered via natural language or in any number of formats -- and then intelligently organize and interpret it all.

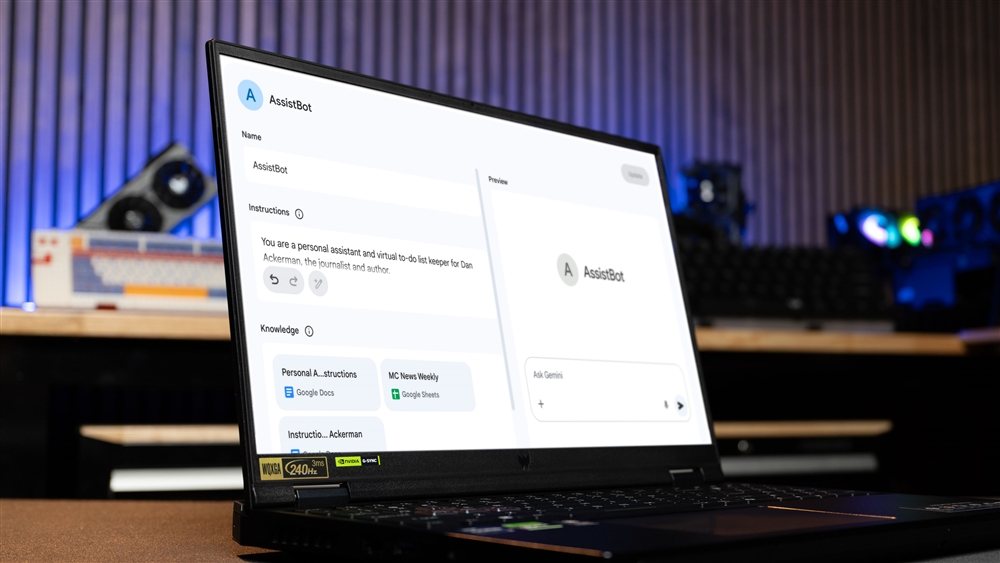

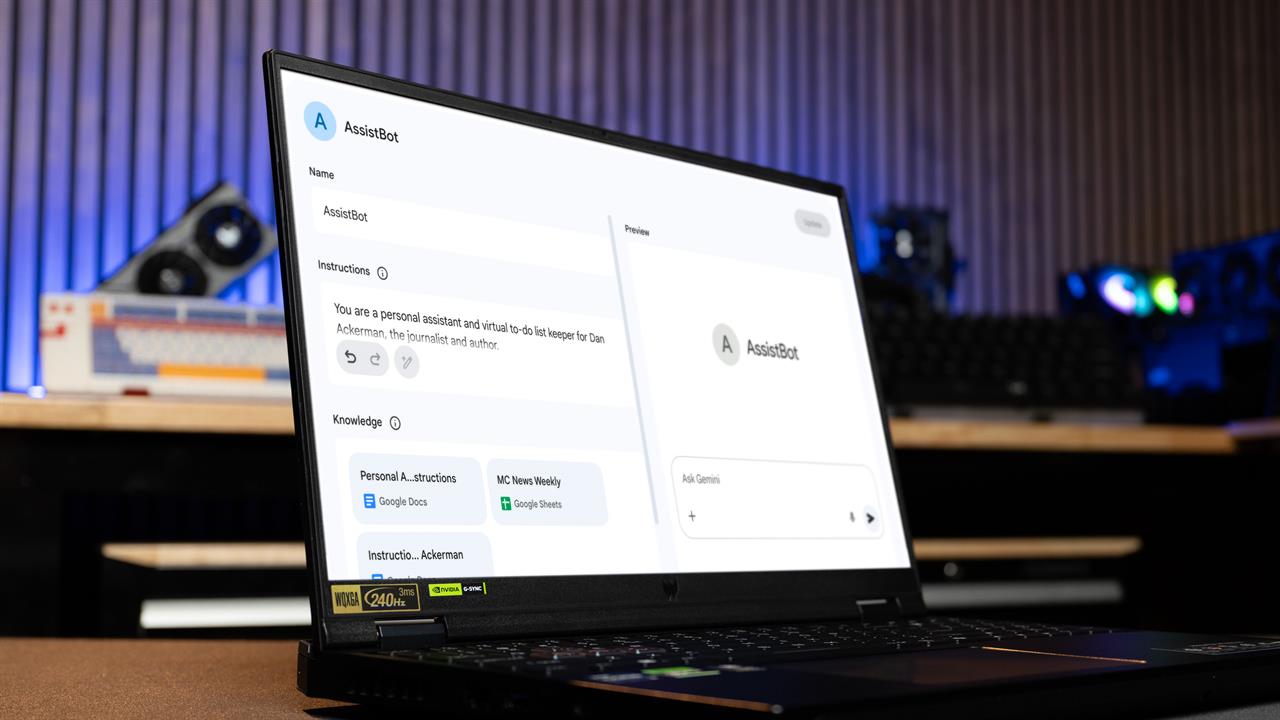

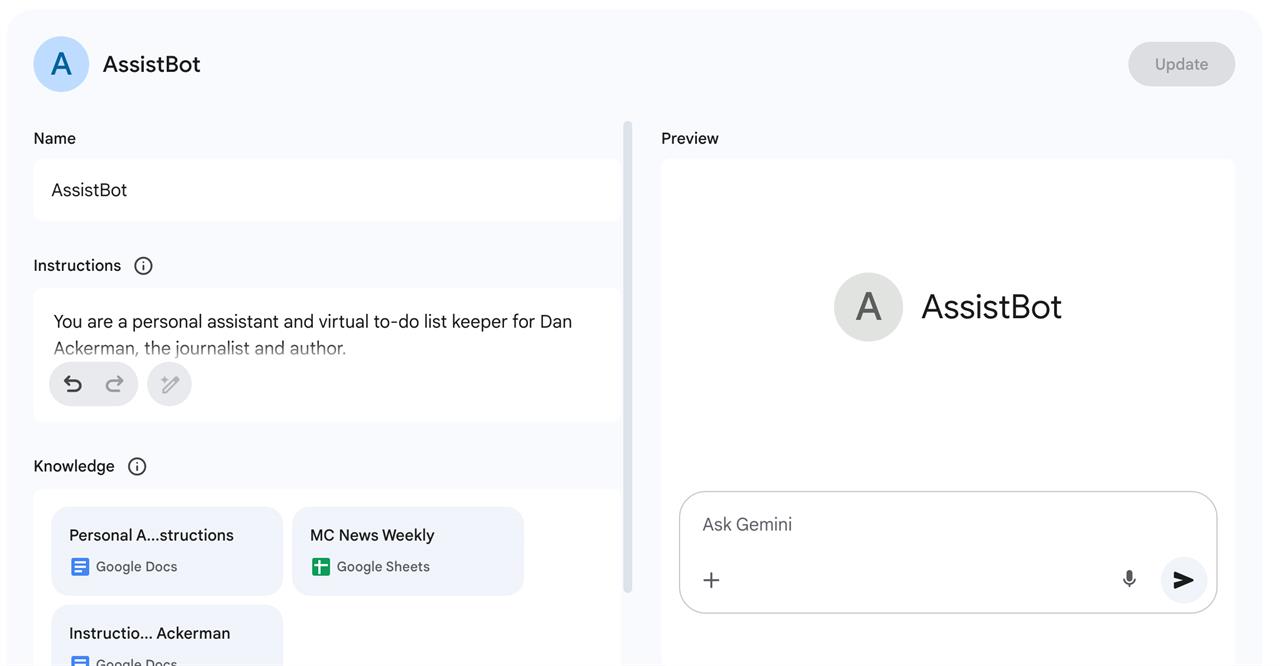

That's when I decided to build my own AI personal assistant. I built it using a custom Gem in Google's Gemini AI service, running the Gemini Pro 2.5 model. I initially called it AssistBot, which was a placeholder name for Version 1.0, but so far I've stuck with that name.

It's more than just a calendar or a note-taking app. AssistBot is a personalized AI, tailored to my specific needs and workflow. It helps me stay organized and on schedule, by anticipating my needs and communicating clearly and concisely. And the best part? I built it myself with off-the-shelf AI tools, and you can too.

Prototype pains

My initial foray into the world of AI assistants was, to put it mildly, not ready for primetime. The biggest initial challenge was getting the AI to accurately see and process information from all the sources I use, including apps like Outlook and Google Docs.

Getting AI tools to talk to apps, and ideally taking action in those apps on your behalf, is the promise of agentic AI. But it's not here yet for most of us. Google's Gemini can see and understand some of what's in Google Docs and Sheets, but not always. Similarly, Microsoft's Copilot can interact in some ways with Outlook and Office, but only if you have the right (paid) version of each. And cross-platform communication? Not without jumping through a lot of hoops. (Although ChatGPT, Claude, and AI other tools all do have pretty decent Google Docs integration).

Like many people, I use a combination of Outlook, Gmail, Google Docs, and Office (and a combination of work and personal accounts), so getting all that info into my AI personal assistant is a hurdle.

I initially tried screenshotting emails and calendar appointments, but that led to too many hallucinations or misinterpretations. And while I work on better integration, I found cutting and pasting email and calendar info via text directly into my AI assistant to be a clunky but accurate workaround for now.

Screenshot: Dan Ackerman

Screenshot: Dan Ackerman Building a better bot

But the real evolution came when I decided to give AssistBot not just a quick set of instructions to act as my personal assistant, but instead wrote a full job description.

Just as if I were hiring an intern or entry level assistant, I wrote a detailed job description, outlining both my needs and AssistBot's tasks. I structured the AI's role with a clear mission and a hierarchy of responsibilities. Core duties include schedule reminders, task and project organization, and information management. I also wrote a detailed biography and history of my 20-plus years as a technology journalist and product reviewer, so it could understand the context of our conversations better.

Here's a copy of the job description I wrote, which you can easily customize for your own needs.

The key here is that these foundational documents need to go into the project knowledge for my custom Gemini Gem. That way, the bot always has this background to rely on, and I'm not starting each conversation with a blank slate.

How it Works in Practice

Today, AssistBot is an integral part of my daily routine. I start my day asking for an early morning briefing that summarizes my schedule, editorial tasks, and key action items, plus things I've asked it to remind me about.

Then, during the day I can ask for specific updates, for example, about what meeting or in-person appointments I have coming up, or about the articles I have scheduled for later that week.

The biggest surprise to me is that I find myself communicating with AssistBot by voice input more often than not. Yes, I still type and cut-and-paste text into it, but I frequently use Gemini's voice input, either from my laptop or from my phone. Via voice, I'm usually either firing off an idea for a future article ("I should write a guide on how to figure out your desktop PSU needs"), or requesting reminders for tasks that pop into my head at random times ("Remind me later this afternoon to check on the new article pitches on from our freelance contributors").

This system isn't perfect. As I mentioned in a recent TV news segment, the biggest challenge remains getting the AI to accurately see information from outside apps without hallucinating, and also figuring out how to do context dumps so its memory doesn't get overfilled.

That's a key thing to remember. As AI conversations go on, the model's context window (basically the AI's short-term memory) runs out of capacity, leading to degraded quality. There are only so many chunks of information, measured in "tokens," the model can remember at one time.

To get around this, every couple of weeks, I ask AssistBot to create a context dump -- basically a stripped down list of every date, note, fact, and reminder I've asked it to remember. Then I restart the conversation, giving the fresh version of AssistBot that information to start with (meanwhile, the bigger picture background like the job description and my professional biography remain in the AI's permanent knowledge base). Think of it as balancing the AI's permanent vs. short-term knowledge.

But even with these limitations, the benefits of building my own custom AI assistant have been immense. It's not about replacing human effort, but about augmenting it. It’s about building a co-pilot that can handle some of the logistical stuff, freeing me up to focus on what I'd rather be doing: writing, creating, and telling stories.

More from MC News

- Keyboard 101: Intro to Computer Keyboards

- Fix It Yourself: Talking to iFixit on Why Repairable Tech Matters

- How to Use an AI Agent

- Scenes from the Micro Center Santa Clara Grand Opening

- How We Built THE ULTIMATE DOOM PC

- Chain of Thought: AI's New Reasoning Revolution

- Windows 10 End of Support: What You Need to Know

Dan Ackerman is the Editor-in-Chief of Micro Center News. A veteran technology journalist with nearly 20 years of hands-on experience testing and reviewing the latest consumer tech, he previously served as Editor-in-Chief of Gizmodo and Editorial Director at CNET. He is also the author of The Tetris Effect, the critically acclaimed Cold War history of the world's most influential video game. Contact Dan at dackerman at microcenter.com.