NVIDIA RTX 4000 Series Breakdown: RTX 4090 vs. RTX 4080 vs. RTX 4070 Ti

With sky high core counts, clock speeds, and more and faster memory than ever before, there’s a lot to love about NVIDIA’s RTX 4000 series, but there are a few things you should know before you commit.Reviews

NVIDIA’s RTX 4000 series of graphics cards ushered in a new era of performance and features when it debuted in the Fall of 2022, and even with new GPUs from the competition, it looks set to remain the dominant ray-tracing GPU line for this generation. With sky high core counts, clock speeds, and more and faster memory than ever before, there’s a lot to love about these new cards, but there are a few things you should know before you commit.

For starters, the RTX 4090 and RTX 4080 are gigantic cards, coming in longer, taller, and much wider than anything else out there. They also pull a lot of power, and kick out a lot of heat, demanding heavy cooling and even a new power adapter for good measure. The RTX 4070 Ti is much more modest, resembling a traditional GPU in size and shape, but it’s still a triple slot card – so you’re going to need a lot of room in your system if you’re upgrading to the latest generation of NVIDIA GPUs.

These aren’t insurmountable challenges to a modern gaming PC, however. As long as you have an 850W PSU (or more), plenty of space in your case, and capable system cooling, there’s nothing to stop you sporting one of the most powerful graphics cards ever made. Unsure of which is right for you though? Let’s take a look at how the top new NVIDIA GPUs of this generation compare. This is the RTX 4090, RTX 4080, and RTX 4070 Ti.

Ada Lovelace – An Overview

The NVIDIA 40-series graphics cards are based on the new Ada Lovelace architecture and are built on TSMC’s 4N process, which is a custom 4nm process node co-developed with NVIDIA, and is a different design to the 5nm N4 TSMC node which other devices have made use of.

Lovelace features a new, fourth generation of NVIDIA’s Tensor Cores used for deep learning super sampling and AI acceleration, as well as a new third-generation of RT Cores, used for RTX-driven ray tracing. These are present on all the new generation GPUs, though they are available in greater numbers on the higher-end cards, making them better suited to both ray tracing acceleration and deep learning super sampling (DLSS) frame rate enhancement.

The new RT cores bring with them a number of improvements which have the potential to make the RTX 40-series far more capable when it comes to rendering ray tracing in games. It includes a new Opacity Micromap Engine which simplifies the calculations required when dealing with rays passing through transparent objects by breaking up those objects into individual triangles that are either transparent, partially transparent, or opaque. The new RT cores then only need to produce additional calculations based on the transparent sections, rather than the entire object.

Other improvements were made to the way Lovelace’s 3rd generation RT cores handle complicated tessellation, and shader execution reordering ensures that ray tracing threads are reordered so that they are more efficient, leading to additional performance improvements in ray tracing heavy games.

The new Tensor cores support a new generation of DLSS, known as DLSS 3, that introduces frame generation technology, enhanced by a new Optical Flow accelerator (OFA). The OFA is designed to offload intensive tasks to the appropriate hardware on the GPU. So, for ray tracing tasks, it can funnel them to the RT cores, freeing up the CUDA cores for more general rendering tasks that they are better suited for. Where previously, however, portions of the OFA’s pipeline had to be completed by the compute engine, with the Lovelace architecture, the OFA algorithms are mostly handled by dedicated hardware, thereby preventing demand on the CUDA cores from impacting ray tracing performance in the same way.

Because of these additional enhancements which make ray tracing and DLSS so much more effective on Lovelace GPUs, DLSS 3 and its frame generation techniques are unlikely to be backported to older NVIDIA architectures, making it one of the specific features that may be worth upgrading to the 40-series GPUs for.

The RTX 4090, 4080, and 4070 Ti come without any kind of NVLink connector for dual-GPU operation, putting the final nail in the coffin of NVIDIA’s classic SLI multi-GPU feature. They support the latest Dual NVENC encoder with 8K 10-bit support, as well as the latest versions of OpenCL, OpenGL and Vulkan.

All three of the new RTX 40-series graphics cards have three DisplayPort 1.4a connectors, and a single HDMI 2.1 connector. This is comparable to previous-generation NVIDIA cards, though the USB-C Virtualink port from the NVIDIA RTX Turing generation of 20-series GPUs remains absent. This configuration of ports and connectors is somewhat distinct from the competition’s latest graphics cards, but both have the ports and bandwidth available to play games at ultra-high definition and high frame rates.

The RTX 4090 and RTX 4080 are gigantic cards. The reference design RTX 4090 measures almost 12 inches long, and at almost 5.4 inches wide it is going to give some cases clearance issues. Not to mention its cooler is more than triple slot in some configurations, so you’re going to lose a lot of PCIExpress slots with these cards. The RTX 4080 is smaller with some add-in-board partners, but most are comparable and the reference design is just as big as the 4090.

Make sure these cards fit in your PC case before buying.

The RTX 4070 Ti, fortunately, is more modest and has dimensions much more in-line with a typical high-end graphics card. It measures 11.2-inches long and 4.4-inches wide, so it’s not a small card by any means, but clearance issues are unlikely to be a problem for it in quite the same way as the RTX 4090 and RTX 4080.

RTX 4090 and RTX 4080 Specifications

It’s not always the case that specification tables can reveal a lot about a new generation of graphics cards, but in the case of the new RTX 40 cards, there’s a lot to talk about.

| RTX 4090 | RTX 4080 | RTX 4070 Ti | RTX 3090 Ti | RTS 3090 | RTX 3080 Ti | RTX 3070 Ti | |

|---|---|---|---|---|---|---|---|

| Architecture | Ada Lovelace | Ada Lovelace | Ada Lovelace | Ampere | Ampere | Ampere | Ampere |

| Process node | 4nm TSMC | 4nm TSMC | 4nm TSMC | 8nm Samsung | 8nm Samsung | 8nm Samsung | 8nm Samsung |

| CUDA cores | 16384 | 9728 | 7680 | 10752 | 10496 | 8704 | 6144 |

| Ray tracing cores | 144 | 76 | 60 | 84 | 82 | 68 | 48 |

| Tensor cores | 576 | 304 | 240 | 336 | 328 | 272 | 192 |

| Base clock speed | 2,235 MHz | 2,205 MHz | 2,310 MHZ | 1,560 MHz | 1,395 MHz | 1,440 MHz | 1,575 MHz |

| Maximum clock speed | 2,520 MHz | 2,505 MHz | 2,655 MHz | 1,860 MHz | 1,695 MHz | 1,710 MHz | 1,7700 MHz |

| Memory size | 24GB GDDR6X | 16GB GDDR6X | 12GB GDDR6X | 24GB GDD6X | 24GB GDDR6X | 10GB GDDR6X | 8GB GDDRX |

| Memory speed | 21 Gbps | 21 Gbps | 21 Gbps | 21 Gbps | 19.5 Gbps | 19 Gbps | 19 Gbps |

| Bus width | 384-bit | 256-bit | 192-bit | 384-bit | 384-bit | 320-bit | 256-bit |

| Bandwidth | 1,008 GBps | 912 GBps | 504 GBps | 1,018 GBps | 936 GBps | 760 GBps | 608.3 Gbps |

| Total Board Power (TBP) | 450W | 320W | 285W | 450W | 350W | 320W | 290W |

Note: The above specifications are for the NVIDIA Founders Editions of the cards. They’re slightly different than the specs of the cards used in this review, but the only material difference is 100MHz higher boost clock of the 4080, an additional 40MHz boost clock on the 4090, and an extra 45MHz on the 4070 Ti’s boost clock.

Inter-generationally, the RTX 40-series has made some big advances over even the best last-generation Ampere graphics cards. The RTX 4070 Ti has far greater number of CUDA cores than its last-generation counterpart. That, combined with a near 1GHz increase in boost clock speed, should make the 4070 Ti dramatically more powerful than its predecessor. As is common with GPU generations, too, the 4070 Ti enjoys a higher clock speed than both the RTX 4090 and RTX 4080. That will help make up some of the disparity in CUDA core counts, but it’s unlikely to close the gap significantly.

The RTX 4070 Ti has more and faster memory than its predecessor, which should make it far more future proofed for upcoming games, and make it much more capable of 4K gameplay in older titles. The overall bandwidth does fall, however, with that 192-bit bus on the 4070 Ti really pulling back on its memory performance more than its greater quantity and speed would suggest. The slight drop in TDP as a result is welcome, especially considering the impressive increase in core counts and clock speed. That drop to a 4nm process node made a big difference for the XX70 tier of cards, it seems.

The RTX 4080, on the other hand, very much appears like a typical generational upgrade, with core counts that build off of its last-gen namesake, and much higher clock speeds. It borrows the faster memory from the 3080 Ti, although, like the 4070 Ti, its memory bus holds it back from having a comparable overall bandwidth, and its 320W TDP is lower compared to the 3090 Ti and RTX 4090.

The new-generation kingpin, the RTX 4090, however, is in a league of its own. The 4090 has close to 70% more CUDA cores and a boost clock speed that’s actually higher than the 4080 (launch-day flagship cards often have lower clock speeds than their more modest counterparts). Memory bandwidth still doesn’t quite match the 3090 Ti, but at over 1,000 GBps, it’s unlikely to hold the 4090 back in any capacity. Especially if you can overclock it.

There’s also another giant leap in the count of Tensor and RT cores, which should give the RTX 4090 a huge lead in ray tracing performance – especially when DLSS is enabled. It may also be able to make much better use of the new DLSS 3.0 frame generation feature than the RTX 4080 and 4070 Ti, but we’ll have to see with real-world testing to be sure. All three of the cards got the new generation Tensor cores and more of them, so DLSS in all its forms should be much more impressive this generation – not that cards this powerful should necessarily need it, but the option will be there in the most demanding of games, and it’ll help extend the life of these cards past this generation when new cards and more demanding games become available.

Just as intriguing as the potential performance this generation, though, are the power demands. Where the RTX 4070 Ti actually manages to drop a little in TDP over its predecessor, and the RTX 4080 takes full advantage of the improved efficiency of Lovelace to deliver more cores and higher clocks than the 3080 Ti, but at the same power draw, the RTX 4090 is an entirely different beast. It has the same Total Board Power (TBP) as the 3090 Ti, at 450W, making it one of the most power-hungry cards ever made, and one that’s likely to literally heat up the rooms it’s in. It gives you a lot for those power and thermal demands, and represents a huge upgrade over the 3090 Ti on the specs table, but 450W is an awful lot of power, and an awful lot of heat to kick out in turn.

To deliver all of that power to these new cards, NVIDIA used a new 16-pin PCIe 5 power connector which is only natively supported by a few of the latest power supplies. Fortunately, every one of these cards comes with a 12VHPWR adapter that converts two, three, or four 8pin PCIexpress power cables (depending on your card) to the new mini 16-pin connector.

To make sure that your adapter works well for years to come, ensure that it is properly plugged in at every connection point, and that it isn’t bent or pressed close to any other heat generating components.

The big advantage of this new 16-pin power connector is how much power it can draw: 600W all by itself. That, combined with the 75W that can be delivered through the PCIexpress x16 slot, gives huge scope for these cards to be overclocked, and for further advanced versions of these cards to come from manufacturers looking to really push the envelope.

How fast are the RTX 4090, RTX 4080 and RTX 4070 Ti?

The only way to truly know how fast these Ada Lovelace GPUs are is to put them through their paces in real world benchmarks. To that end, I built as powerful a modern PC as I could muster, using the following hardware.

- CPU: Intel Core i9 13900K

- Motherboard: ASUS Prime Z790-P WiFi

- RAM: 32GB DDR5 Corsair Vengeance 5200MHz C42

- Graphics: Asus TUF Gaming RTX 4090 / MSI SuprimX RTX 4080 / MSI Ventus 3X RTX 4070 Ti

- Storage: 2TB Western Digital SN770 NVMe SSD

- PSU: Corsair RM 850X

- Operating System: Windows 11 22H2

The memory was loaded up using its XPS profile for maximum performance, and the 13900K was undervolted by 0.08v offset to prevent it thermally throttling. If you’ve seen our recent coverage of the Raptor Lake range you’ll know we ran into some difficulty with even a 360mm AIO not managing to handle all of its heat. That problem is now behind us, and the 13900K is raring to go in helping the 4090 break all sorts of Micro Center performance records.

The Windows install was fully updated before testing began, and the graphics drivers used were the latest NVIDIA first-party releases available at the time. In the case of the RTX 4090 and RTX 4080, that was WHQL 527.56. For the RTX 4070 Ti, which we tested a little later, it was the WHQL 528.49 driver.

The graphics cards used are third-party releases from board partners, so aren’t completely indicative of NVIDIA’s reference Founders Editions versions. Cooling, power usage, and clock speeds will be slightly different, but should still give a good general idea of how well these new generation cards perform. These tests were also performed on an open-air test bench, so temperatures and clock speeds may be slightly higher than you would see if these cards were placed inside a typical gaming PC. Systems with strong overall cooling, however, should be able to replicate these results easily enough.

Synthetic Benchmarks

One of the best ways to compare graphics card is to run them through a synthetic benchmark. These are representations of real-world tasks, but close approximations that are easily repeatable. That doesn’t give us the whole picture, but they give clear results which you can enjoy pushing further and further with GPU tweaking, if that's something you're into.

Ultimately, I used Unigine Superposition benchmarks to put our new Lovelace GPUs through their paces.

If you’re looking for real-world tests, further down you’ll find professional software benchmarks, gaming benchmarks, and analysis of these cards’ thermal and overclocked performance.

Unigine Superposition

The latest in a line of iconic benchmarks from Unigine, Superposition follows on from Valley and Heaven benchmarks to provide modern GPUs with a range of runs to test what they are really capable of. It’s entirely free, although the paid-for version does have some additional testing options that you can take advantage of.

For testing the new RTX 40 GPUs, I used the Optimized 4K and Optimized 8K benchmark presets, using the default settings.

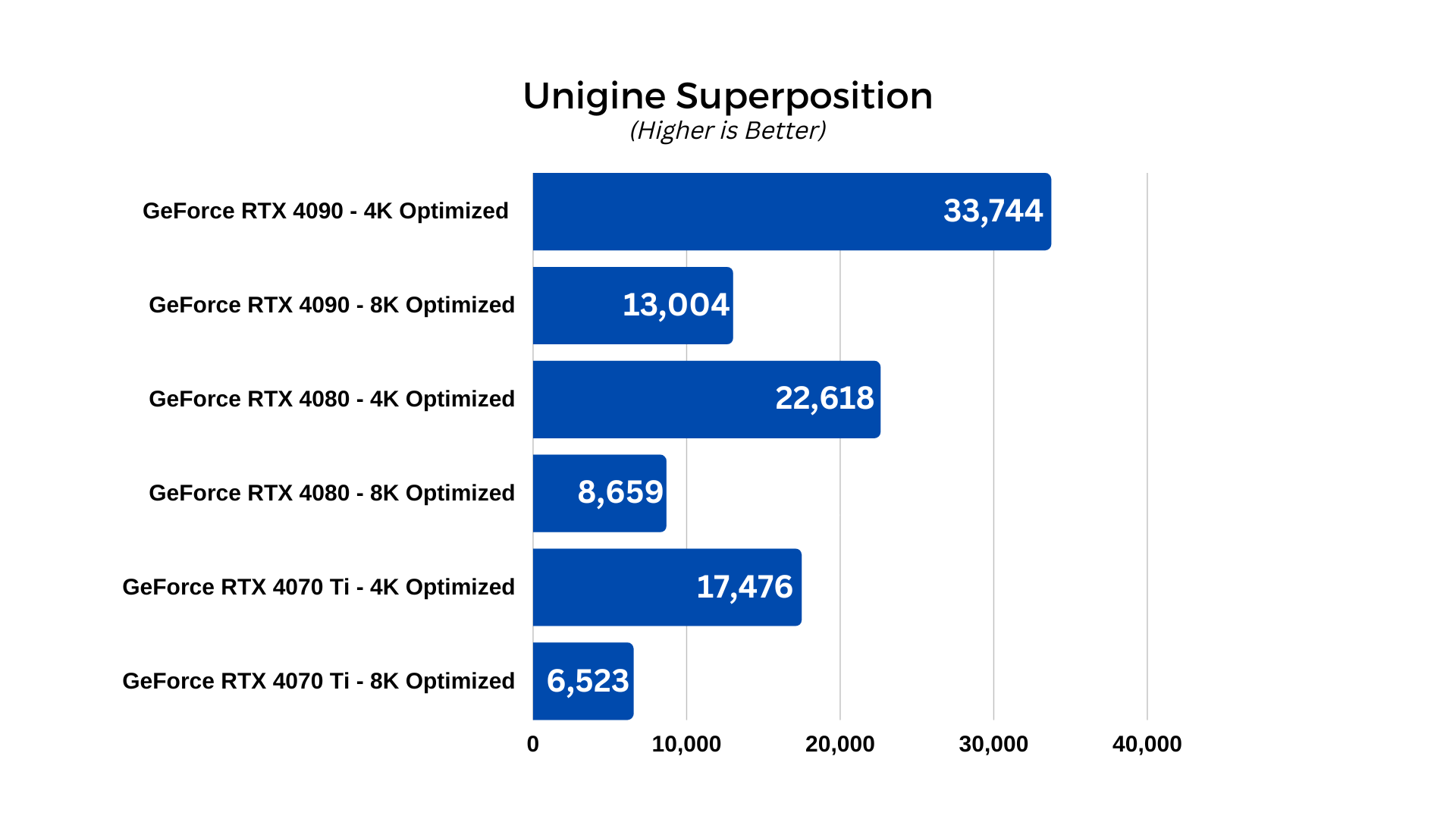

The Superposition benchmark proves that the 3DMark Time Spy results weren’t a fluke. In fact, they cement the RTX 4090 firmly as the king of the NVIDIA lineup and by quite some margin. It managed a score of 33,744 at 4K, and a staggering 13,004 at 8K. The RTX 4080 put up a good fight with scores of 22,618 and 8,659, respectively; Scores that would place it at the top of the leader boards if not for its bigger brother.

Both cards seem poised to be fantastic for 4K gaming – and potentially overkill for anything else – but it’s the RTX 4090 that continues to show itself as something beyond next-generation.

As for the RX 4070 Ti, it might not be designed for 4K gaming specifically, but it proved its worth in the Superposition benchmark. It managed a 4K Optimized score of 17,476, and an 8K optimized score of 6,523 points. Both are excellent scores, though they do fall behind some of the top cards from the competition, suggesting that this card will likely shine at its brightest when spitting out high frame rates when rendering games at 1440p.

Professional Applications

If you’re looking to do more work than play with your RTX 40-series GPU, then it’s a good idea to know how it will perform in certain applications. While we can’t test every program that can leverage a GPU’s parallel processing capabilities for rendering and 3D CAD work, I selected a number of benchmarks that I think give a good impression of just how capable these new cards are at professional workloads.

The benchmarks used for these tests were as follows:

- SpecViewPerf 2.0

- Blender 3.3

- Vray

SpecViewPerf

SpecViewPerf 2020 3.1 is the latest in a long-line of graphics benchmarks that are designed to replicate the kind of workloads a GPU might face in a professional setting. This involves 3D graphics design work, and uses both OpenGL and DirectX application programming interfaces to provide a comprehensive look at what a GPU is capable of when it’s not being used for gaming.

If you plan to do any professional work with your RTX 4000 GPU, these are the results that will matter to you, and with the new AI enhancements through advanced tensor cores, not to mention the higher clock speeds and increased CUDA core counts, these new 40-series cards should be the best yet for accelerating professional workloads.

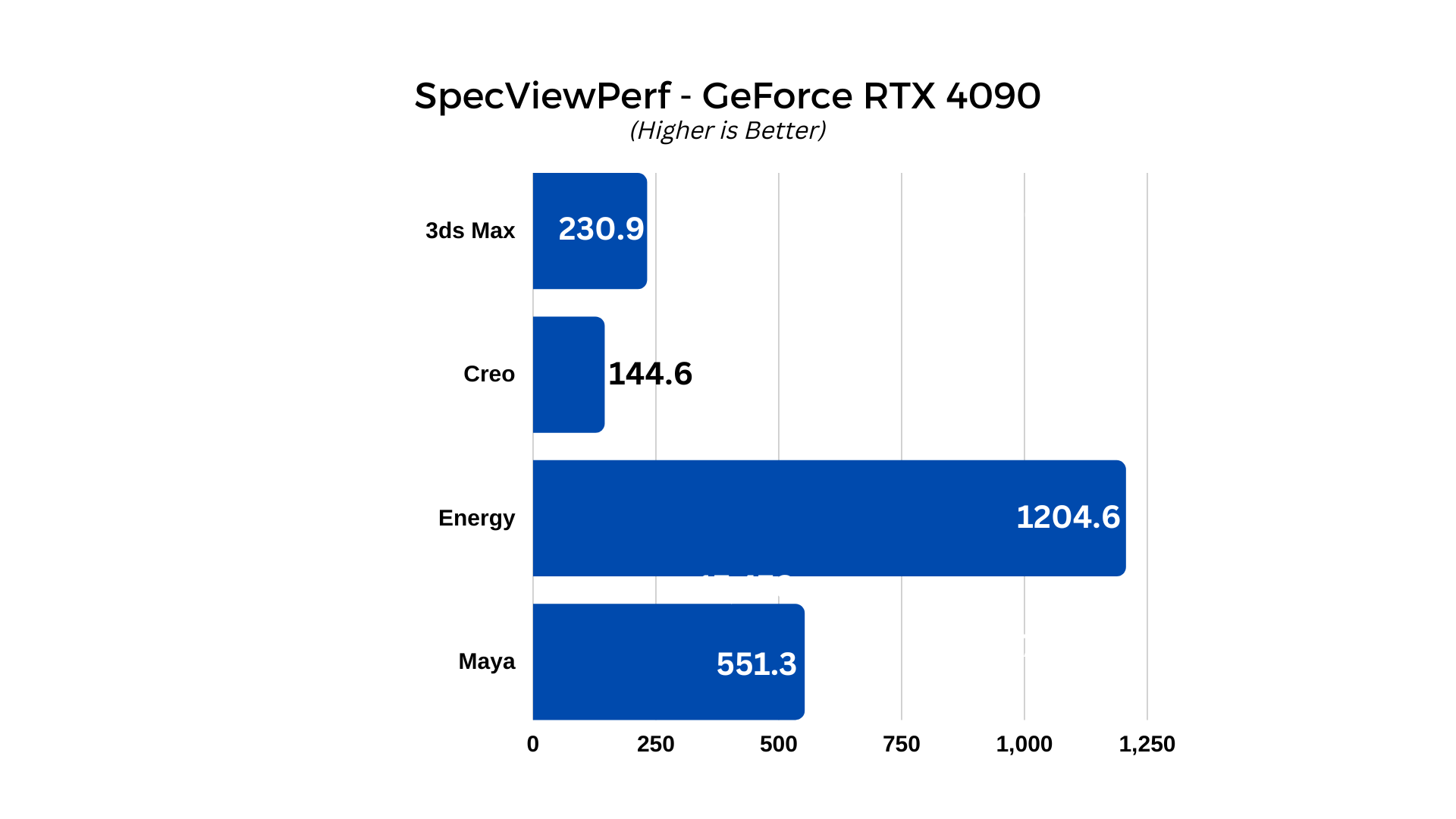

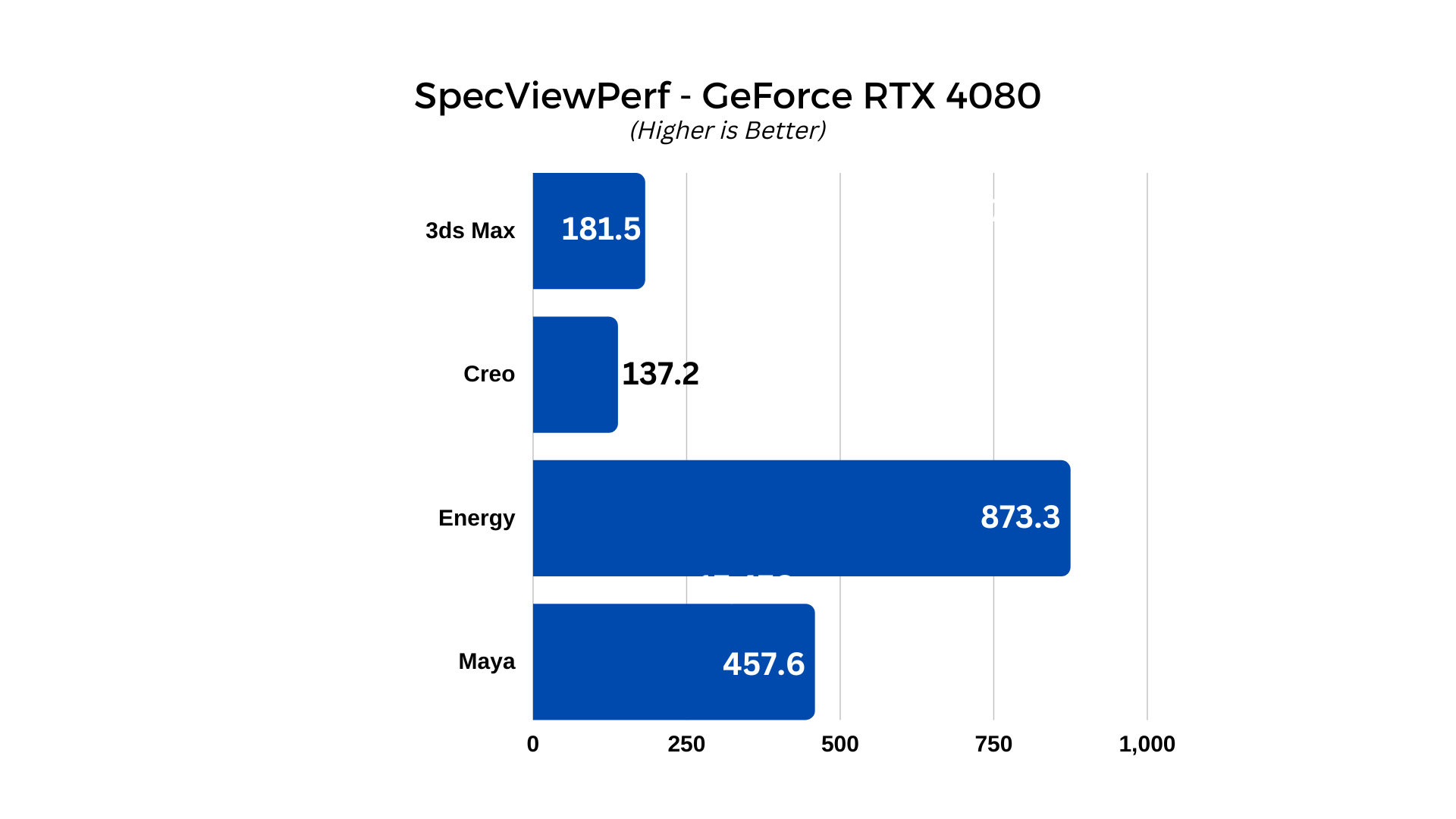

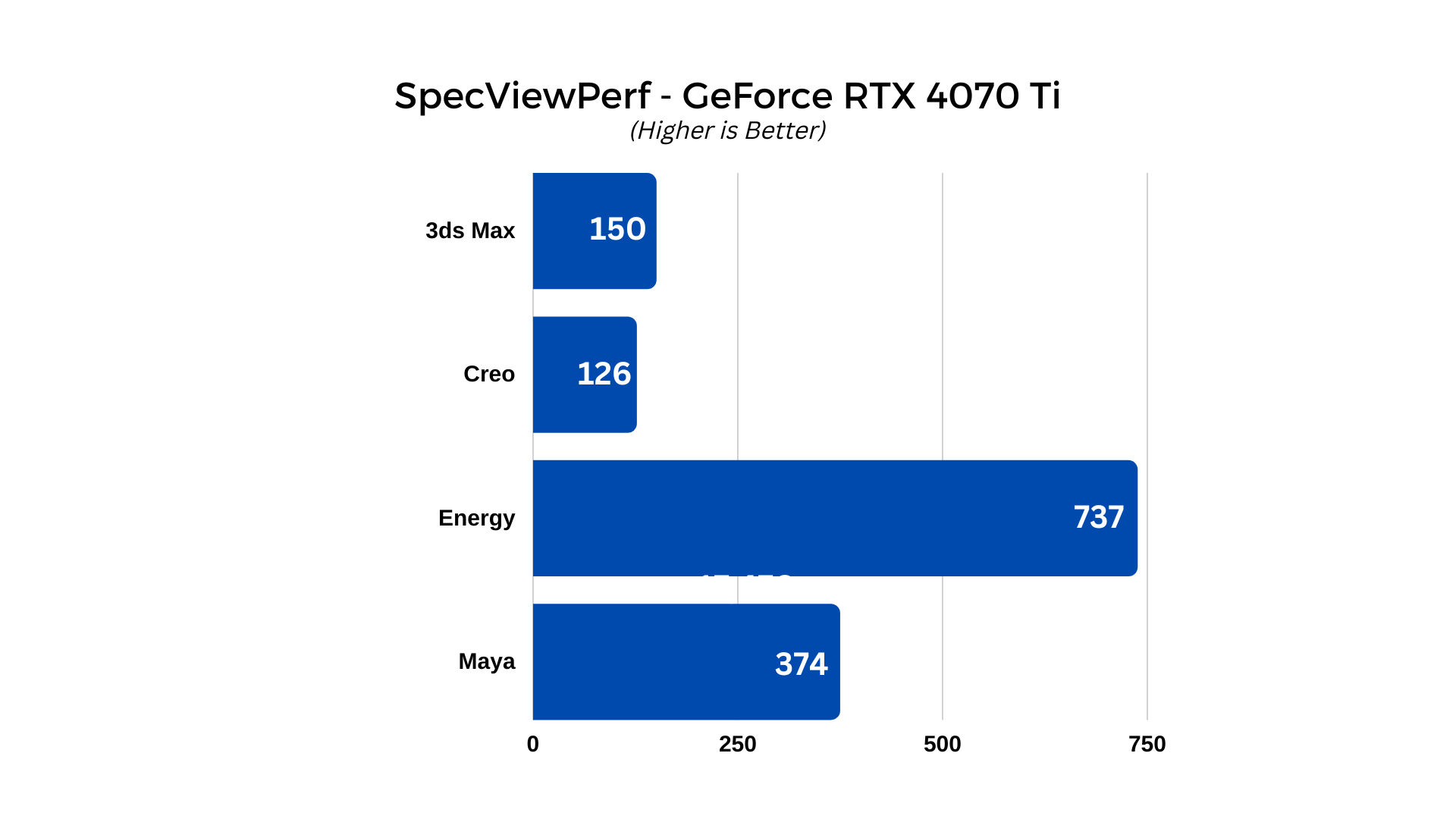

The viewsets used for this comparison review were 3ds Max, Creo, Energy, and Maya, all at 4K (3,840 x 2,160) resolution. All settings were left at default, otherwise.

All three cards performed fantastically in this benchmark, suggesting that there’s a very good reason to upgrade your GPU if you plan to use it for these kinds of professional workloads. Again, however, the RTX 4090 is a real standout.

The RTX 4090 managed scores in 3ds Max, Creo, Energy, and Maya, of: 230.9, 144.6, 1204.6, and 551.3, respectively. That’s so far ahead of the last-generation it’s genuinely shocking. Almost doubling the performance of the 3090 Ti, despite them having identical power demands. That shows what a gigantic advance the RTX 4090 really makes over its predecessors.

The RTX 4080 really does well too, though, offering impressive scores of 181.5, 137.2, 873.3, and 457.6. It doesn’t blow its predecessors out of the water in the same way, but it’s still far faster and easily worth upgrading to if the speed you complete your work at will earn you more money. You’ll just see a much more notable upgrade with the 4090 and at a less of a budget-busting price, considering the performance.

The RTX 4070 Ti might bring up the rear in this comparison, but it’s no slouch and readily competitive with the best graphics cards from the previous generation, and even some of the top ones from the latest. It had 3ds Max, Creo, Energy, and Maya scores of 150, 126, 737, and 374, respectively. It’s not necessarily going to be worth the upgrade for these sorts of tasks alone if you’re running a top last-generation card, but if you have something older, or could benefit from the 4070 Ti in other ways, it looks capable of delivering impressive acceleration for these kinds of professional workloads.

Blender

Blender is one of the most popular 3D computer graphics toolsets for creating animations, models, and visual effects. It’s a tool that augments all kinds of digital industries, and all of them can take advantage of its hardware acceleration using an NVIDIA graphics card. Although any old GPU will do to speed up some of its renders, the latest and greatest GPUs are always the most impressive, and the same goes with the new RTX 40 series.

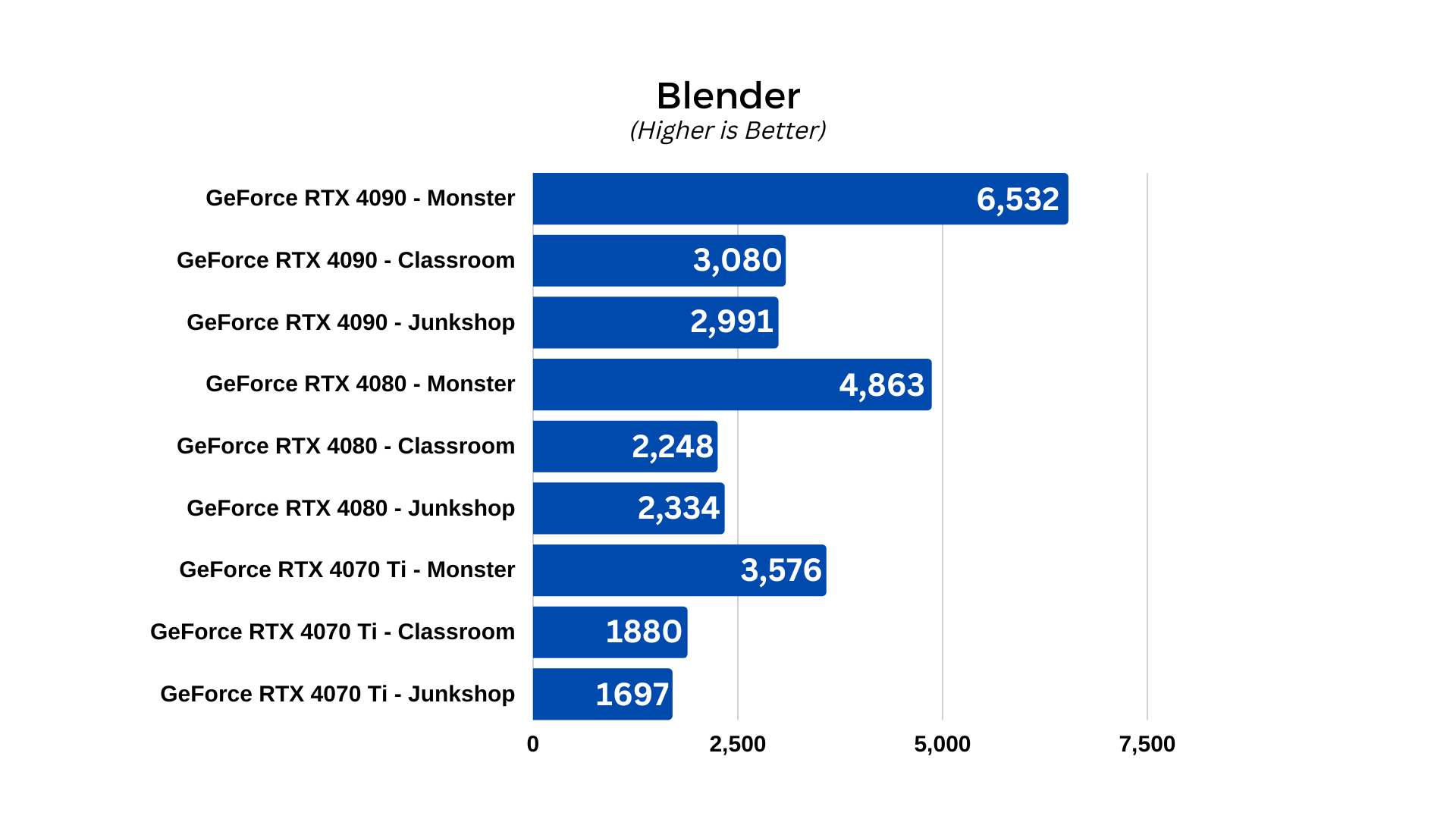

To test how capable the RTX 4090, RTX 4080, and RTX 4070 Ti are in Blender, I used the standardized Blender Benchmark to run its three test suites, all at stock settings:

- Monster

- Classroom

- Junkshop

There’s no huge surprise here that the RTX 4090 performs particularly well in Blender, but there are some interesting notes to take from how well the RTX 4080 competes in at least one of the tests. The 4090 steams ahead in Monster, managing 6532 to the 4080’s comparably pedestrian 4863 points, a difference of 34%. It does it again in Classroom, where it managed 3080 to the 4080’s 2248, a difference of 36%.

However, that gap is narrowed in Junkshop, where the 4090 is “only” 28% ahead, with a score of 2991, to the 4080’s 2334. That suggests that the 4080 may be capable of closing that performance gap more in certain workloads, so that’s worth bearing in mind if you primarily want a new GPU for Blender work. Both cards blow the last generation’s bests out of the water, though, making it very worthwhile upgrading if you are a regular Blender user.

As for the RTX 4070 Ti, it proved itself capable, but, as expected, not as powerful as its older siblings. It scored 37,576, 1,880, and 1,697 in Monster, Classroom, and Junkshop, respectively. That puts it well ahead of the competition and it’s competitive with some of the more powerful cards from the last-generation. Still, you can see the disparity in CUDA cores really holding it back from hitting those higher scores – especially when compared with the RTX 4090.

V-Ray

The final synthetic, professional benchmark we’re taking a look at is V-Ray. V-Ray is a plug-in for 3D graphics software applications that uses global illumination to generate realistic looking lighting, including path tracing, and photon mapping. It works with a range of applications, including Cinema 4D, 3ds Max, Maya, and Unreal Engine, among others.

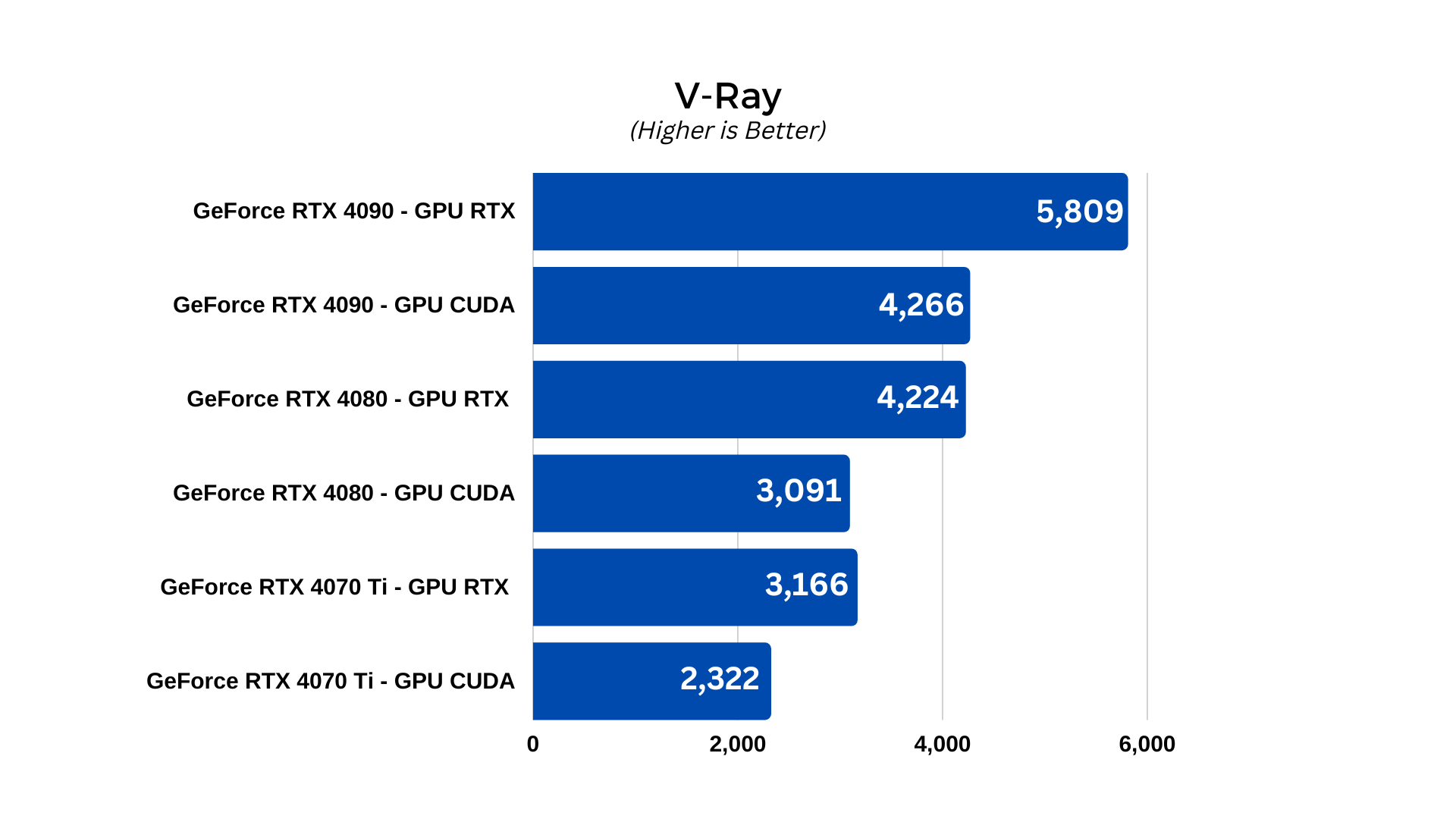

The V-Ray benchmark has the GPU render a detailed raytraced scene as quickly as possible, giving it a score that represents its capabilities within the application. For this test I can the GPU CUDA, and GPU RTX benchmarks and noted down the scores for each card.

The RTX 4070 Ti delivered a score of 2,322 in the GPU CUDA benchmark, and 3,166 in the RTX test, making easy work of this typically demanding benchmark. That’s a near 100% jump over its predecessor, and a more than 50% increase over the best from the last generation. The RTX 4070 Ti might not quite measure up to its bigger brothers, but it is a decidedly meaty upgrade over everything that came before.

The RTX 4080 managed scores of 3,091 and 4,224 for the CUDA and RTX tests, respectively, showing how capable it is at leveraging its new architecture and cores to blow through these scenes with ease. As expected, however, the RTX 4090 is everything the 4080 is and much more. It managed scores of 4,266 and 5,809, respectively, cementing it as the killer workstation GPU of its generation.

Gaming Benchmarks

Synthetic and professional benchmarks are great for understanding the raw capabilities of a card, but for gamers who want to see how they perform when facing uneven and inconsistent workloads, different engine requirements, and the pressures of long-term loads over lengthy gaming sessions, there’s only one way to find out how good the cards are: test them in real games.

Fortunately, there are a range of modern games which have their own built-in benchmarks, so I'll be using a few of them at a range of settings to see just how good these new NVIDIA GPUs are, as well as running them through a manual benchmark of arguably the best-looking games available today.

Since these are very high-end graphics cards, I skipped over the typical 1080p resolution testing – playing at that resolution with either of these cards would leave you completely CPU bound, wasting masses of GPU power. Instead, we'll be looking at these GPUs at various detail settings at 1440p and 4K resolution. I also included raytracing testing where applicable, and looked at the effect both DLSS and the new DLSS 3.0 exclusive feature, frame generation, to see what the new-generation tensor cores on the 40-series GPUs can really do.

The games used for testing these new cards were:

- Metro Exodus: Enhanced Edition

- Shadow of the Tomb Raider

- Red Dead Redemption 2

- Cyberpunk 2077

- Plague Tale: Requiem

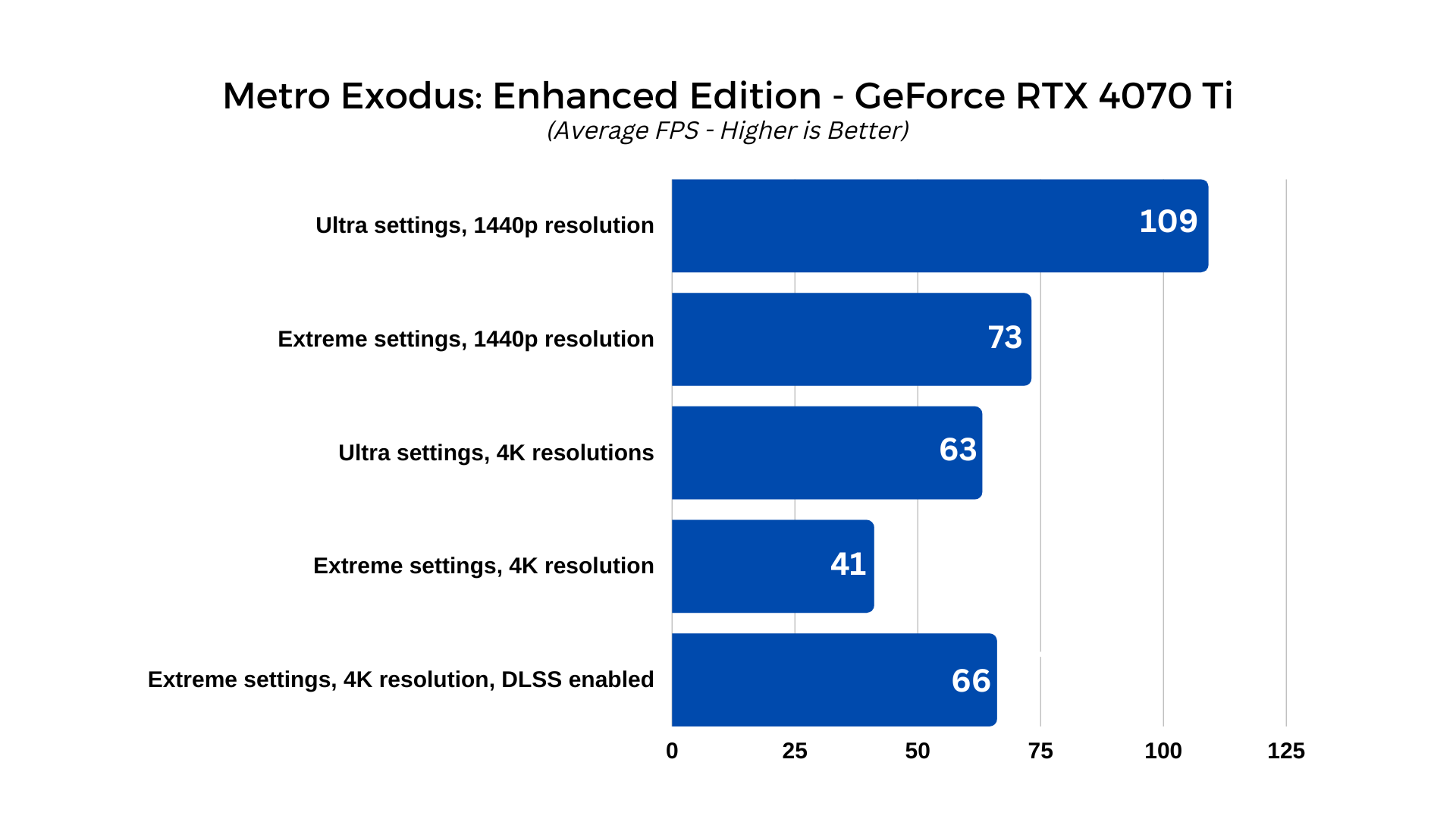

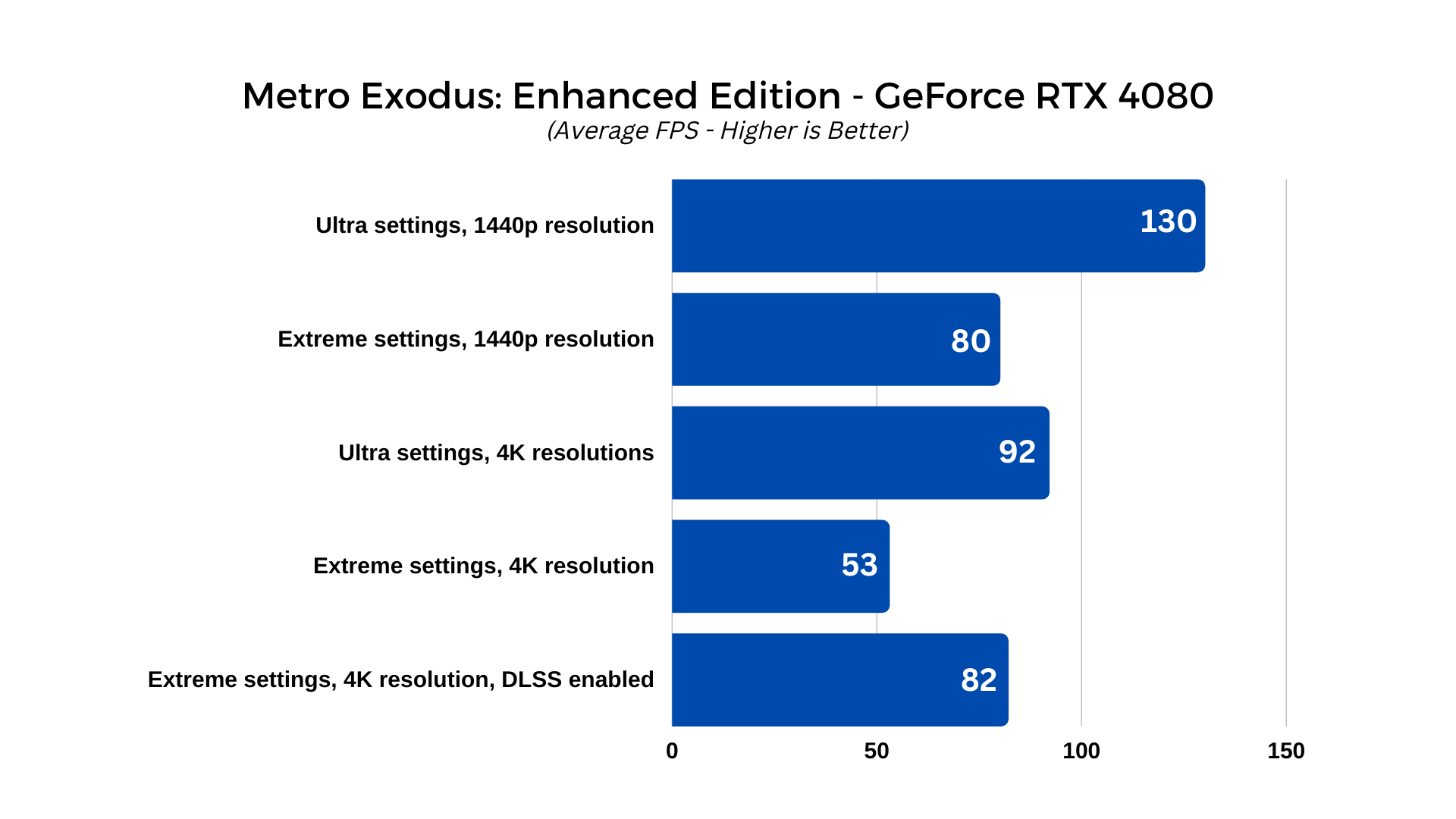

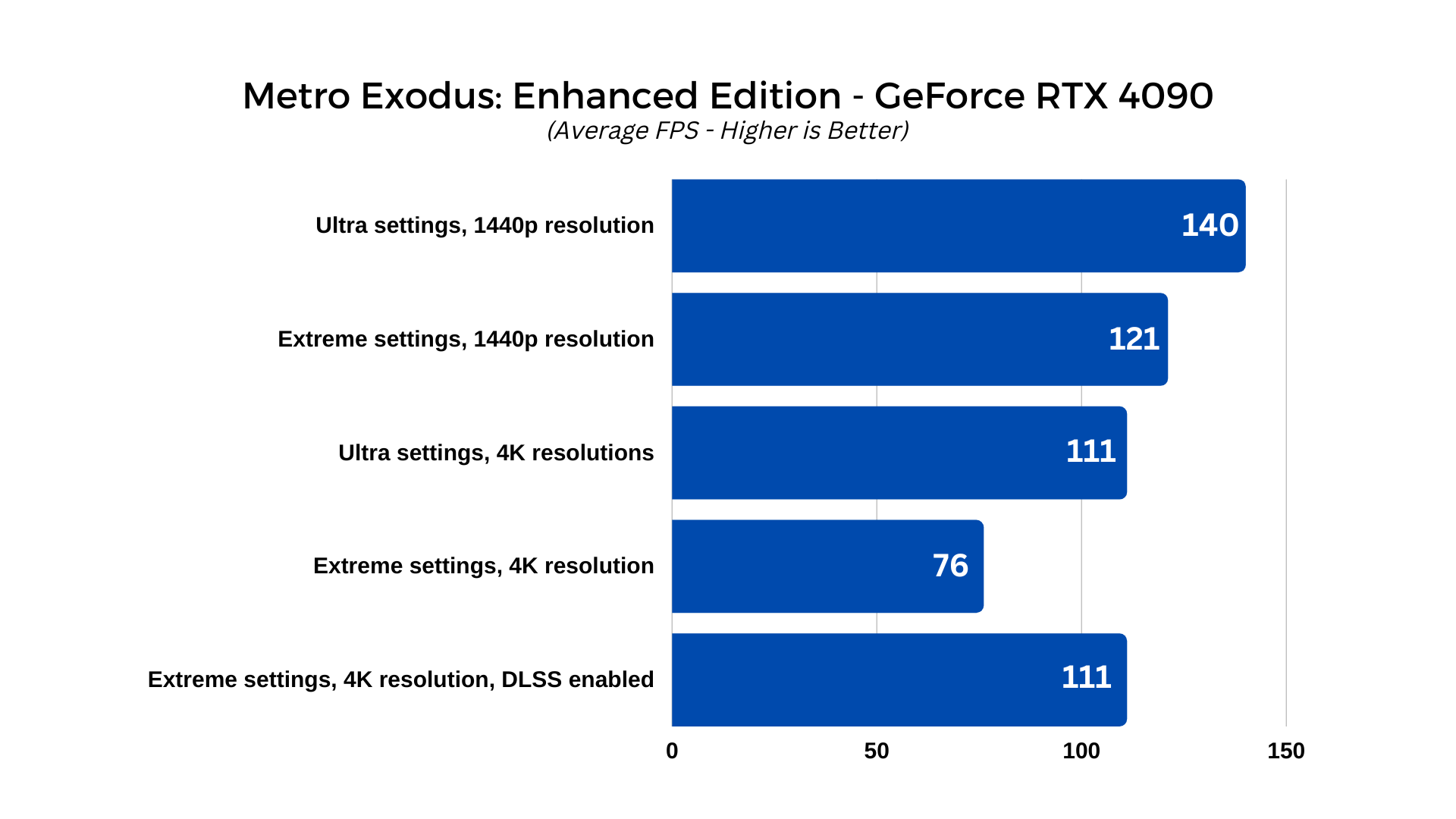

Metro Exodus: Enhanced Edition

Metro Exodus: Enhanced Edition is the new and improved version of one of the first globally illuminated, ray tracing games and it looks better than ever. That extra beauty comes at a higher demand on GPUs, though, and despite this game not being cutting edge any more, it’s still a real challenge for even some of the best graphics cards, so should give our 40-series cards a real run for their money.

To test this game, I used the official benchmark that comes with it, and used the preset settings at custom resolutions.

- Ultra settings, 1440p resolution

- Extreme settings, 1440p resolution

- Ultra settings, 4K resolution

- Extreme settings, 4K resolution

- Extreme settings, 4K resolution, DLSS enabled

Metro Exodus is a game that we’ve seen through recent testing isn’t affected by CPU performance, as it is extremely GPU bound and therefore should respond very well to our new-generation graphics cards. However, its global illumination ray tracing is still incredibly demanding and is enabled by default at Extreme settings, so may see these new cards struggle a little.

The RTX 4070 Ti performs very well in this game, hitting high frame rates at 1440p and 4K. In the first test with 1440p ultra, it hit minimum and average frame rates of 62 and 109 FPS, only dropping to 50 and 73 when ray tracing is enabled at Extreme settings, showing just how important that next-generation RT core design is, even if the count is lower on the 4070 Ti than other 40-series GPUs.

When we switch over to 4K, the 4070 Ti does struggle a little, but it still manages minimum and average frame rates of 43 and 63 FPS. Those are perfectly playable frame rates, and though you’ll get a smother experience at lower detail settings, you certainly don’t have to. Running the game at 4K with Extreme settings is a bit too much for this card, unfortunately, leading to minimum and average frame rates of 16 and 41 FPS, respectively. However, you don’t need to feel like you can’t play at the highest settings with this card, as enabling DLSS improves the frame rate to a minimum of 46 FPS and an average of 66. That’s much better and means that with DLSS to hand, the 4070 Ti can play this game at any setting you like and still enjoy perfectly comfortable frame rates.

The RTX 4080 manages impressive performance results at all detail settings, but particularly at higher resolutions. At 1440p ultra, it managed minimum and average frame rates of 73 and 130 FPS, falling to 52 and 80 at 1440p Extreme settings. It comes close but doesn’t quite stay above 60 FPS once ray tracing comes into the picture. It has the same problem at 4K Ultra settings, with minimum and average frame rates of 58 and 92 FPS, respectively.

4K Extreme settings stays just about playable with 31 and 53 FPS, but if you’re going to play at those settings you’ll want to enable DLSS, as it has a practically unnoticeable effect on visual quality, whilst raising the minimum and average frame rates to 54 and 82 FPS. That’s much more playable, and arguably the best way to play this deeply atmospheric game if you have an RTX 4080.

If you buy an RTX 4090, however, you can choose what you like, but you’ll still get some of the best performance results once DLSS is enabled. At the bottom end of the spec pile, the 4090 manages minimum and average frame rates of 87 and 140 at 1440p with Ultra settings, falling to 76 and 121 FPS at Extreme settings.

Performance is pretty similar at 4K with Extreme settings, at 67 and 111 FPS, offering an option for those who prefer resolution clarity over more advanced realtime lighting. Combining the two does bring even the powerful RTX 4090 to below 60 FPS however, with a minimum and average of just 53 and 76. Enabling DLSS rescues it, sending it back to 71 and 111 FPS, respectively.

If I was playing Metro Exodus Enhanced Edition with a 4090, those are the settings I’d pick, but it’s clear that when you bring DLSS into the picture, both the RTX 4080 and 4090 can handle the most demanding settings in this already demanding game, and the RTX 4070 Ti isn’t far behind, even if you might be better off playing at 1440p with that particular card.

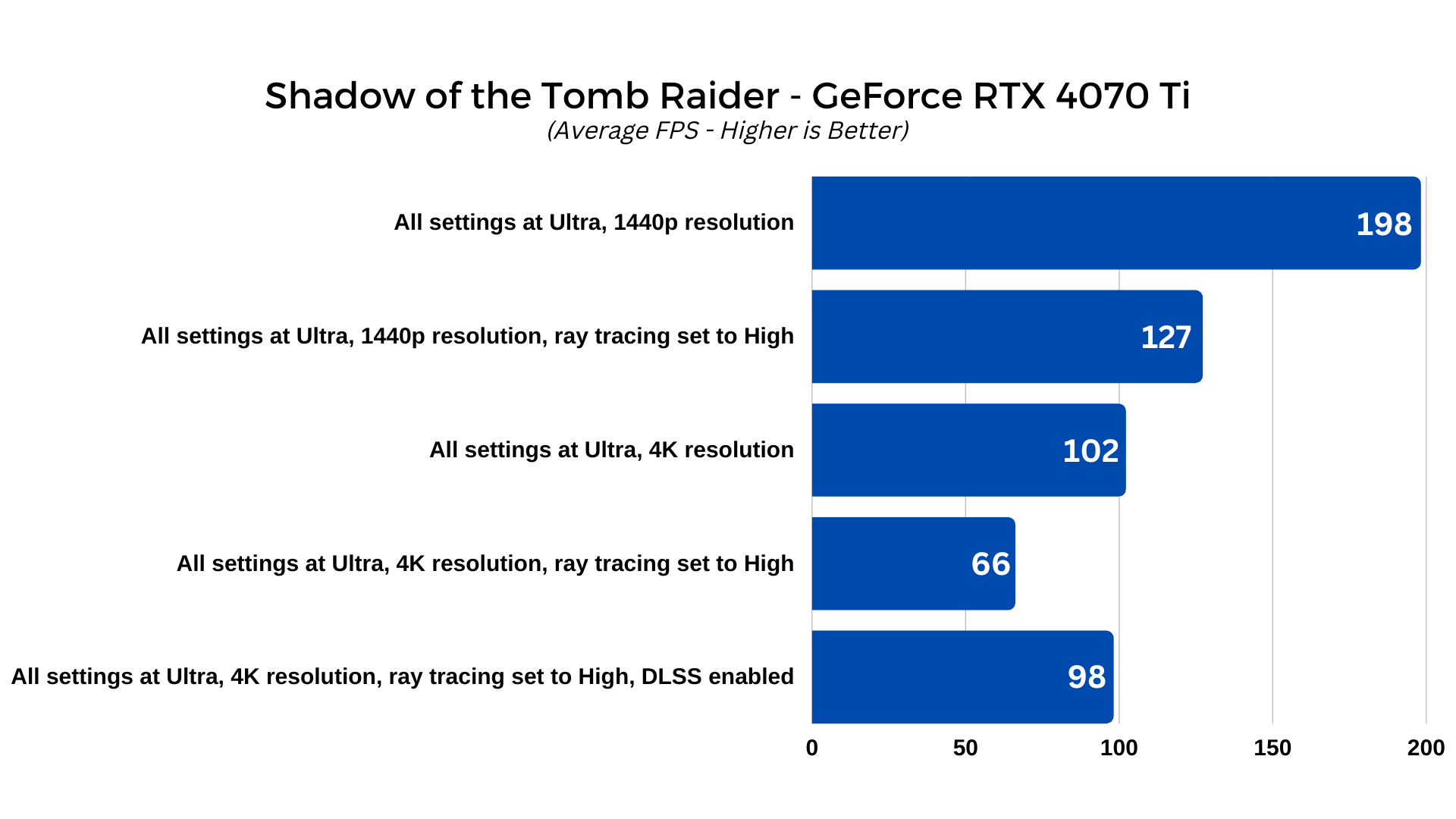

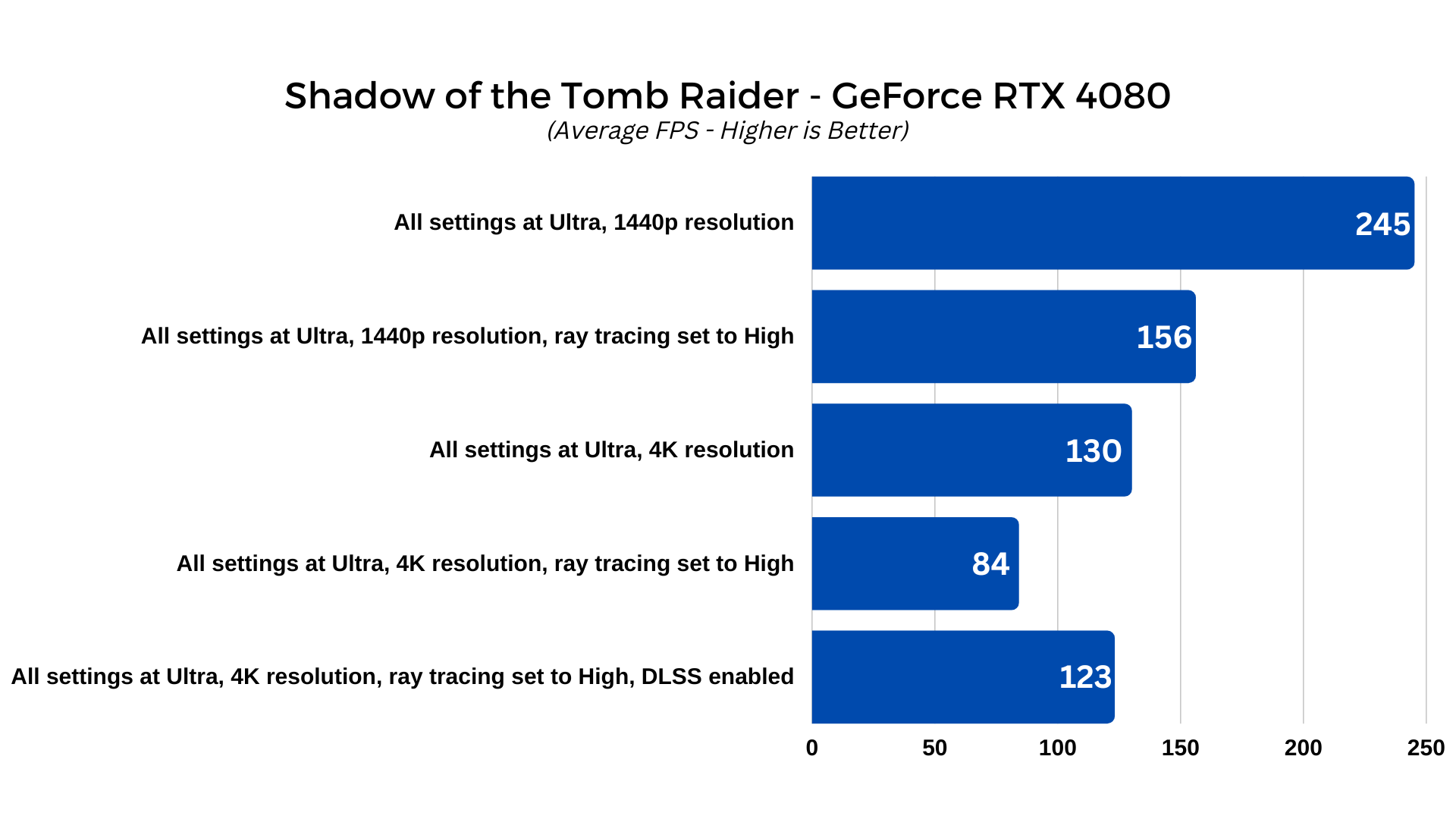

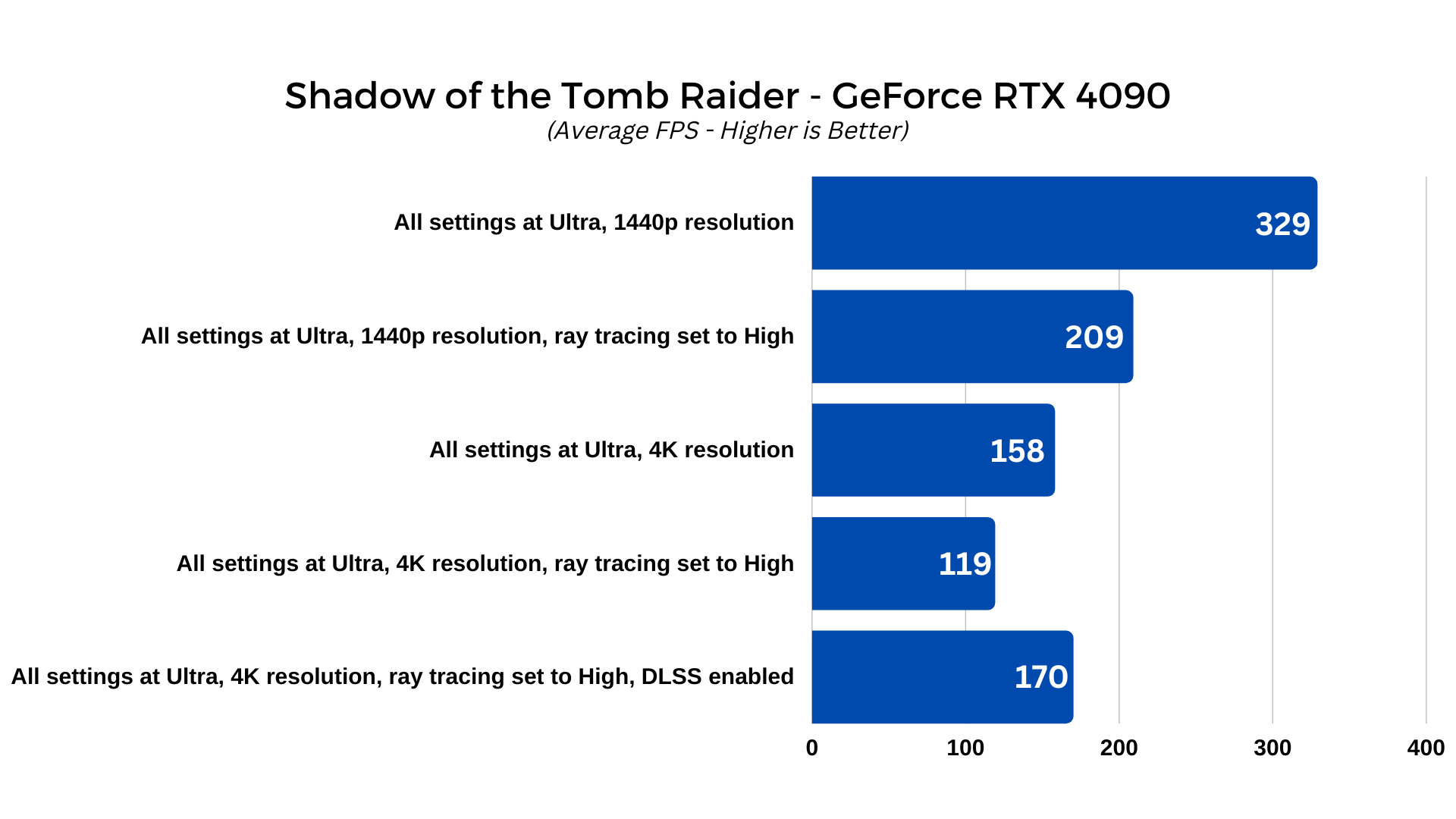

Shadow of The Tomb Raider

Shadow of the Tomb Raider is an older game, but since it scales fantastically well with both higher-powered CPUs and GPUs, it’s great for testing all manner of hardware. Its built-in benchmark throws vistas, close-up character models, and even some raytraced shadows at modern graphics cards if you enable it, and it still looks fantastic at higher resolutions.

To give our RTX 40-series cards a real workout, I ran the built-in benchmark at different resolutions, with ray tracing enabled and disabled, as well as with DLSS enabled and without. The settings were as follows:

- All settings at Ultra, 1440p resolution

- All settings at Ultra, 1440p resolution, ray tracing set to High

- All settings at Ultra, 4K resolution

- All settings at Ultra, 4K resolution, ray tracing set to High

- Extreme settings, 4K resolution, DLSS enabled

The Shadow of the Tomb Raider benchmark gives you a lot of information after the run, noting both CPU and graphics performance, but I only recorded the most relevant GPU information, focusing on Average, Minimum, and 95 percentile frame rates.

The RTX 4070 Ti manages to maintain triple digit frame rate averages at all settings, although some of its minimums do fall far enough to dip below 60 FPS on a rare occasion. At 1440p without ray tracing, the RTX 4070 Ti manages minimum, 95 percentile, and average frame rates of 156, 166, and 198 FPS, respectively. That’s enough to really enjoy Shadow of the Tomb Raider on one of the newer displays with a 240Hz refresh rate, although at such high FPS, I might be tempted to push the settings higher instead.

Do so, and the 4070 Ti still stands strong. With ray tracing enabled at 1440p, it manages 91, 97, and 127 FPS, which are very much playable results. You don’t even need to fear 4K with the RTX 4070 Ti in this game, as it manages minimum, 95 percentile, and average frame rates of 85, 89, and 102 FPS, respectively. It’s only when we introduce ray tracing alongside 4K resolution that the card finally capitulates its 60+ FPS clean streak, with frame rates of 48, 51, and 66 FPS.

That’s still more than playable, though, and if you want higher frame rates, all you need to do is enable DLSS. Switching that on even while at 4K with ray tracing enabled, the 4070 Ti manages to hit 76, 79, and 98 FPS. Depending on the display you have, that’s arguably the best settings to play this game at, as it doesn’t benefit from the ultra-high frame rates of some faster-paced games in quite the same way, and the additional detail can really make a difference in more atmospheric sections of the game.

The RTX 4080 makes quick work of this game, managing to maintain over 100 FPS in all metrics in our first three tests. Its minimum, 95 percentile, and average frame rates were 191, 201, and 245 FPS, at 1440p resolution, falling to a still-respectable 113, 120, and 156 FPS when ray tracing was enabled. 4K proved slightly more demanding, even without ray tracing, with frame rates of 109, 112, and 130 FPS – so whatever detail settings you pick with an RTX 4080, you’re going to be able to get great frame rates.

If you want ray tracing at 4K, you’ll have to settled for double digit frames per second, but they’re still more than playable at 60, 65, and 84 FPS. That won’t look as smooth and fluid as the 100+ FPS runs, but the consistency there is key, as you are unlikely to see any major stuttering with a steady 60+ FPS.

If you want more, however, enabling DLSS really raises the performance to 94, 99, and 123 FPS, which for RTX 4080 owners may well be the sweet spot of high performance and high detail levels, with consistent frame rates around the 100 FPS mark.

The RTX 4090, on the other hand, is so powerful, you don’t even really need to lean on DLSS to keep its frame rates high, although those on some of the new crop of 4K 240Hz monitors might want to consider it.

At 1440p it hits absurd minimum, 95 percentile, and average frame rates of 260, 264, and 329, respectively. Add ray tracing and it’s still consistently over 150 FPS, with 154, 162, and 209 FPS. Even 4K and 4K with ray tracing aren’t enough to bring this beast of a card down, hitting 152, 158, and 182 FPS, and 86, 93, and 119.

DLSS brings it back up above 100 FPS for everything, though, with 131, 137, and 170 FPS when enabled, even at 4K with ray tracing.

The RTX 4090 feels like overkill for an ageing game like this, but if you want the ultimate performance, it’ll give it to you. The RTX 4080 is more than enough to play this era and style of game at 4K with all of the beautiful settings turned up their fullest.

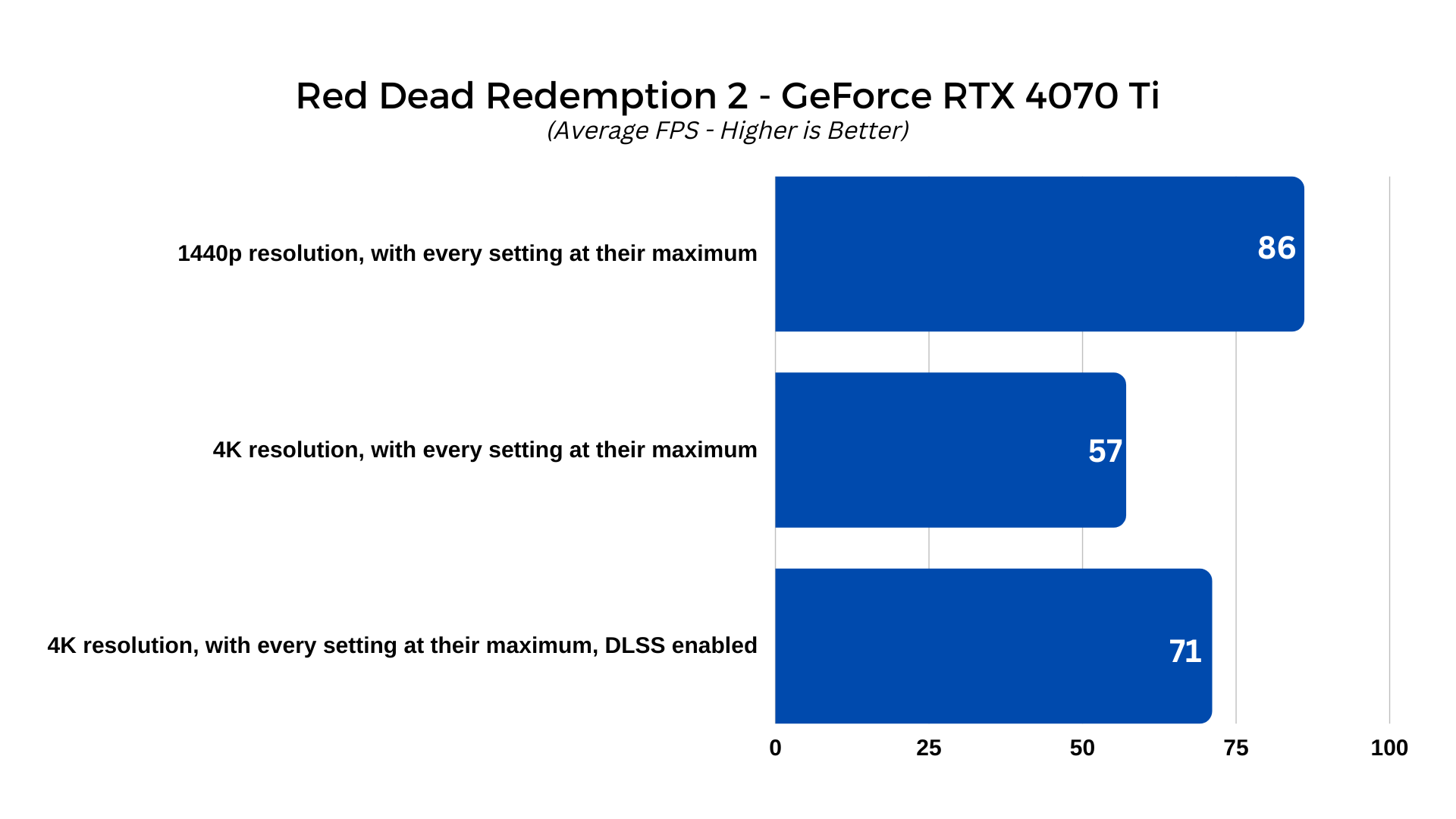

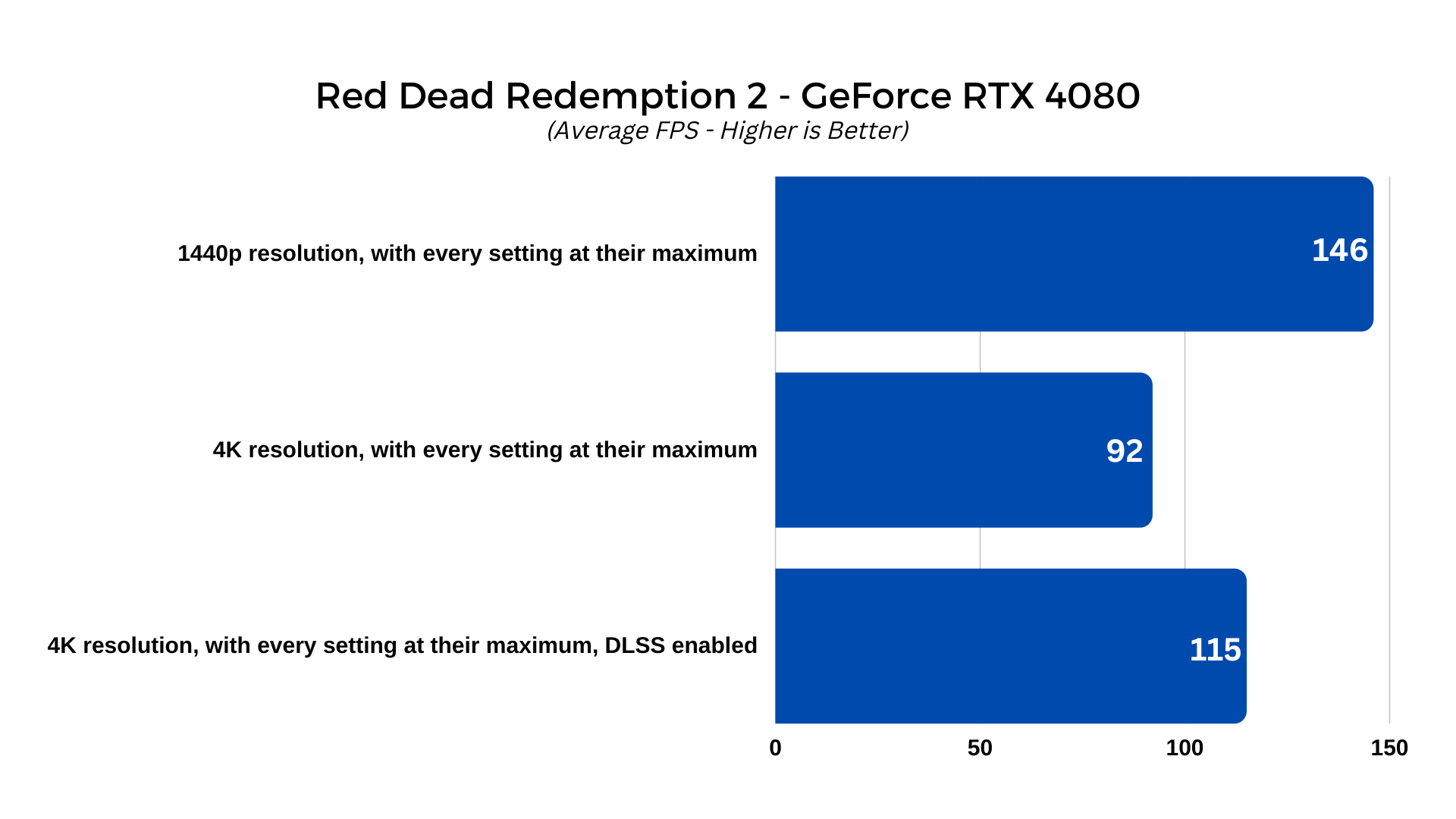

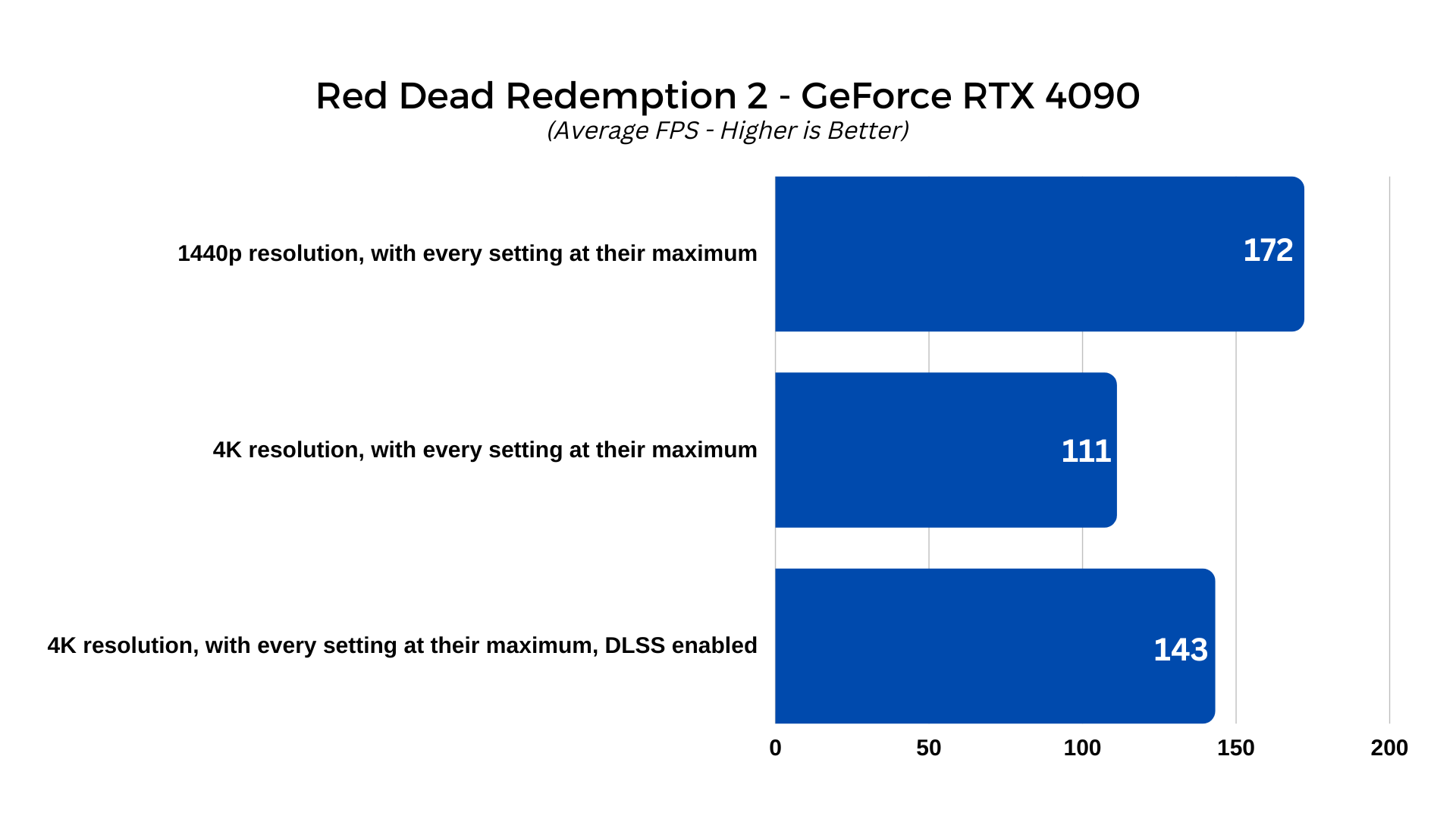

Red Dead Redemption 2

Rockstar’s Red Dead Redemption 2 might be getting a little long in the tooth, but it’s still one of the best-looking games available today. With these new graphics cards able to handle it at its prettiest, too, if you haven’t played Red Dead Redemption 2 before, using one of the new RTX 40-series cards may be the best way to enjoy it.

To try to give these cards at least a little trouble, I used the following settings:

- 1440p resolution, with every setting at their maximum

- 4K resolution, with every setting at their maximum

- 4K resolution, with every setting at their maximum, DLSS enabled

The frame rates are so high for this test that this is the last generation of graphics cards I’ll use this game for, as even as pretty as it is, it just doesn’t have the sheer demand of the latest AAA games. That said, I have consistently found that Red Dead Redemption 2 delivers very low minimum frame rates in its benchmark. It doesn’t matter what I change the settings to, they’re always very low, but I notice no stuttering in the benchmark, so it’s likely a one-off moment near the beginning or end of the run.

The RTX 4070 Ti did a great job with Red Dead Redemption 2 at 1440p, managing minimum and average frame rates of 13, and 86 FPS, respectively. The game looked great at those settings, and considering how fluid it was, that’s probably where I’d play it if I was gaming on this GPU. 4K is an option, as frame rates there weren’t bad, per say, but the minimum and average frames per second of 28 and 57 FPS, doesn’t give you the whole picture. There were some scenes where a stutter was quite noticeable, so I would be tempted to avoid that setting myself.

However, DLSS rode in to save the day, bringing its minimum and average frame rates back up to 25 and 71, and eliminating any form of stuttering. With both DLSS and FSR available in Red Dead Redemption 2, it’s a great example of a game that can be enjoyed at higher settings than many cards should be capable of, all thanks to dynamic upscaling.

That’s not something the RTX 4080 needed to rely on quite as much. At 1440p, it managed minimum and average frame rates of 27 and 146 at 1440p, falling to 14 and 92 FPS at 4K. DLSS brought it back up to 19 and 115 FPS, and still looked great, but with the FPS as high as they are, I might be tempted to run this game at native without DLSS, guaranteeing the game will look its best even if it does mean sacrificing a few frames.

The same goes for the RTX 4090, where it managed minimum and average frame rates of 24 and 172 FPS at 1440p, and 26 and 111 FPS at 4K. DLSS brought it back up to 22 and 143, but again, I’d likely just play at native. Having an average FPS of over 100 in a single player game like this is plenty for a fluid and responsive gaming experience.

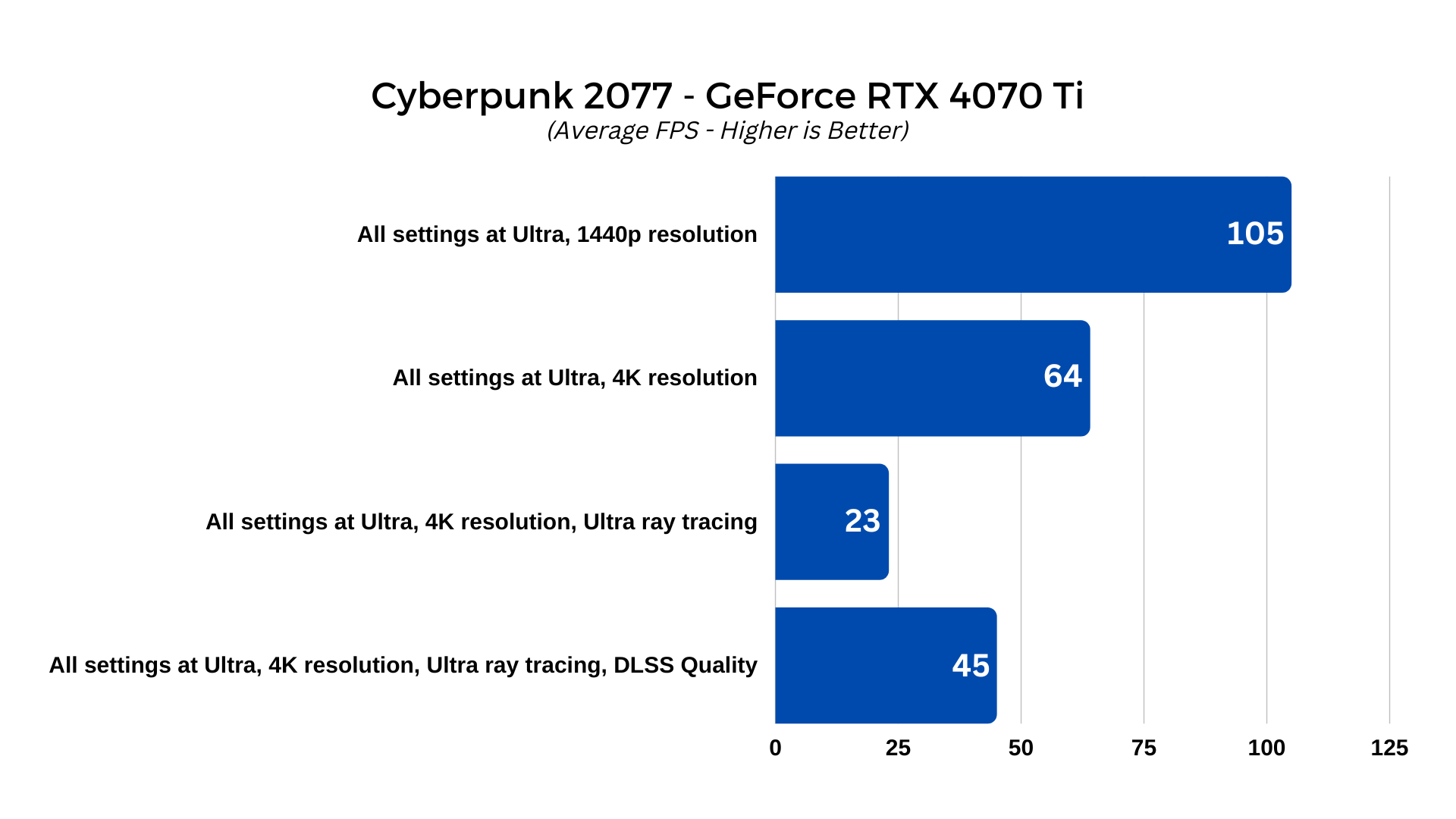

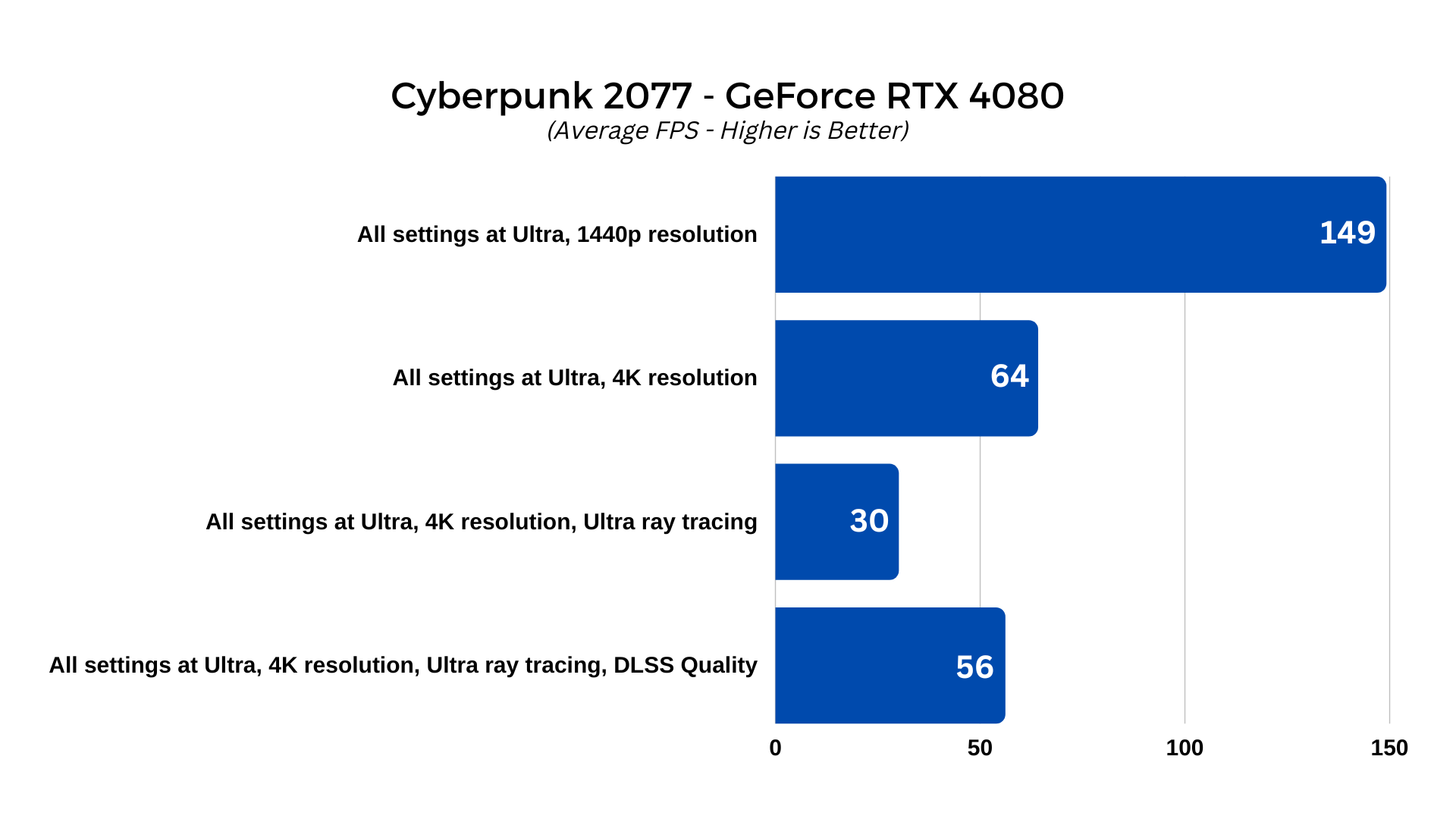

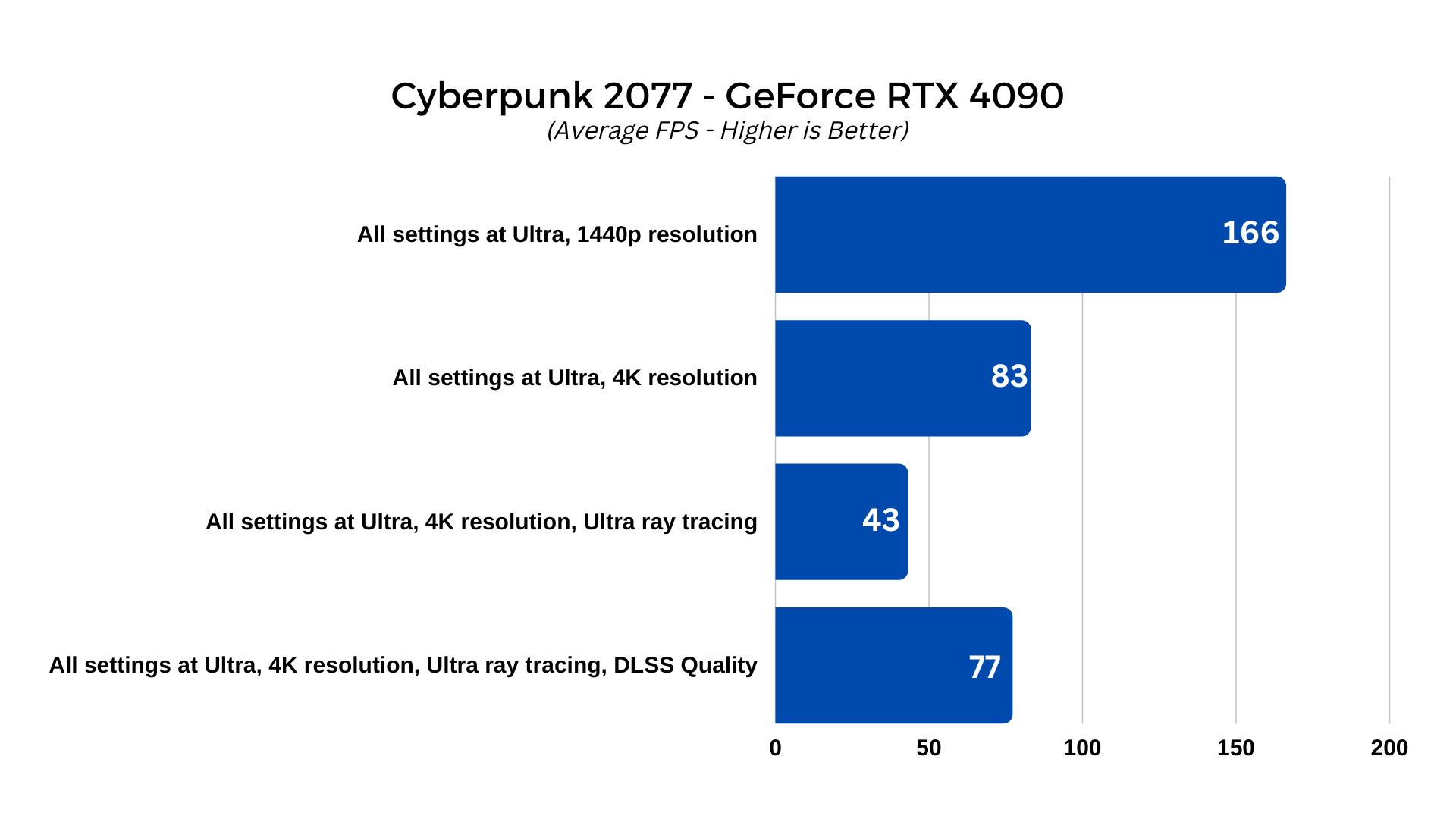

Cyberpunk 2077

Cyberpunk 2077 is a couple of years old at this point, but it’s still giving high-end gaming PCs a run for their money thanks to consistent updates to its lighting. With its combination of extremely detailed environments, heaps of NPCs, and continued advances in ray tracing, it’s the “Can it run Crysis?” of its generation. Even NVIDIA’s own presentation at the RTX 4090 reveal showed the card struggling at top settings without DLSS enabled. It will be interesting to see if those results are replicated with our real-world testing here.

With that in mind, I used the built-in benchmark to test out these two cards, at the following settings:

- All settings at Ultra, 1440p resolution

- All settings at Ultra, 4K resolution

- All settings at Ultra, 4K resolution, Ultra ray tracing

- All settings at Ultra, 4K resolution, Ultra ray tracing, DLSS Quality

We did it! We finally found a game that can make the RTX 4090 sweat (and the RTX 4080 and RTX 4070 Ti even more so). All three cards performed well at 1440p, though, with the 4070 Ti starting out strong with minimum and average frame rates of 82 and 105 FPS, respectively. It even equipped itself well at 4K – not a resolution this card is really designed for – hitting 41 and 64 FPS. That’s a little more cinematic than some gamers might prefer, but the option is certainly there if you want a more affordable way to play this game at peak resolution.

However, when it comes to ray tracing, you’ll need to consider lowering the settings or playing on a different card, as combining that with the already stringent demands of 4K resolution brings the RTX 4070 Ti crashing down. In our third benchmark run it managed to only claw its way to minimum and average frame rates of just 6 and 23 – completely unplayable. DLSS doesn’t really save it either, unfortunately, managing just 34 and 45 FPS. That’s just about playable, but it’s far from a smooth experience. Of course, the RTX 4070 Ti was never meant as a 4K card, so this is within expectations.

The RTX 4080 fairs a little better, but Cyberpunk 2077 still really turns the screws on this high-end GPU. At 1440p resolution without ray tracing, the RTX 4080 manages a minimum and average frame rate of 95 and 149, respectively. However, that’s the last time that card will see triple digit FPS, as it falls to 53 and 64 FPS at 4K. Enable ray tracing, and it falls even further, not even managing to hit the basic minimum comfort left of 30 FPS: it hit just 23 FPS minimum, and 30 FPS average.

That’s better than the RTX 4070 Ti managed, but it’s still arguably unplayable due to its lack of fluidity and the extreme stuttering that occurs when the frame rate dips below 30. Fortunately, DLSS rescues the venerable RTX 4080 and brings it back up to 44 and 56 FPS. While the RTX 4080 is very much a 4K graphics card, in this game, I might actually be tempted to play 1440p with ray tracing and DLSS enabled. The visual difference between that and 4K isn’t dramatic (to my eye at least) and you’ll still get a fluid and responsive gaming experience.

You might not need to make such sacrifices with the 4090, but unbelievably, you’ll still need to consider it. At 1440p it managed minimum and average frame rates of 84 and 166 FPS, respectively, while at 4K it falls to 71 and 83 FPS. Enable ray tracing, however, and even this card struggles, falling to just 33 and 43 FPS. That’s just about playable, which is a major achievement considering how much the other high-end cards struggled, but it’s still quite the perfect experience most gamers reach for. Thankfully, there’s DLSS.

With its mountain of next-generation Tensor cores, though, it’s perhaps no surprise that the results of DLSS are even more pronounced with the RTX 4090. The upscaling algorithm again comes to the rescue bringing it back to 61 and 77 FPS, which is about as good as you can expect from any gaming PC in a maxed-out Cyberpunk 2077.

Note: Cyberpunk 2077 has incredibly in-depth settings, so if this is a game you particularly want to play with any of these new-generation RTX-40 series GPUs, there are settings you’ll be able to tweak to improve performance without sacrificing too much on visuals. Especially with ray tracing, as you don’t need to play at the highest settings. Still, it’s impressive to find any game that can bring the RTX 4090 down a peg, leaving room for overclocks or future-generation cards to show us what Cyberpunk can really play like in the future.

Plague Tale: Requiem

Plague Tale: Requiem might be the best-looking game ever made (so far). A sequel to the already gorgeous and graphics-card-melting Plague Tale: Innocence, Requiem takes it to new levels of brilliance with enhanced lighting, greater character detail, and support for all of the latest graphics technologies. At the time of writing, the ray tracing update for the game is not yet available, so test results for that setting haven’t been included.

This game should put even our RTX 4090 through its paces and we can also use it to test out both DLSS when that high-powered GPU is struggling, and the new DLSS 3 frame generation feature which has made such a big splash with NVIDIA’s latest generation GPUs.

However, this game doesn’t have its own built-in benchmark. While that’s a shame, I got around it by going through a segment of the game in its first chapter where Amicia and Hugo are running through grass and trees while playing. It’s a beautiful opening to the game and has to contend with detailed up-close foliage along with expansive vistas in the distance. I ran the test at several settings and did my best to run the same route each time. It took around 40 seconds to complete, with only a variation of 0.25 seconds for a margin of error between runs.

During testing I recorded the Average, Minimum, Minimum 1%, and Minimum 0.1% frame rates using MSI Afterburner.

The settings used for testing this game were as follows:

- All settings at Ultra, 1440p resolution

- All settings at Ultra, 4K resolution

- All settings at Ultra, 4K resolution, DLSS Quality

- All settings at Ultra, 4K resolution, DLSS Quality, Frame Generation enabled

Note: With DLSS and frame generation as optional settings in Plague Tale: Requiem, both were included in these tests. However, the game also employs its own resolution scaler when DLSS is not enabled and it cannot be disabled, so though DLSS was only utilized in the latter two benchmark runs, the resolution scaler was active in those other two settings.

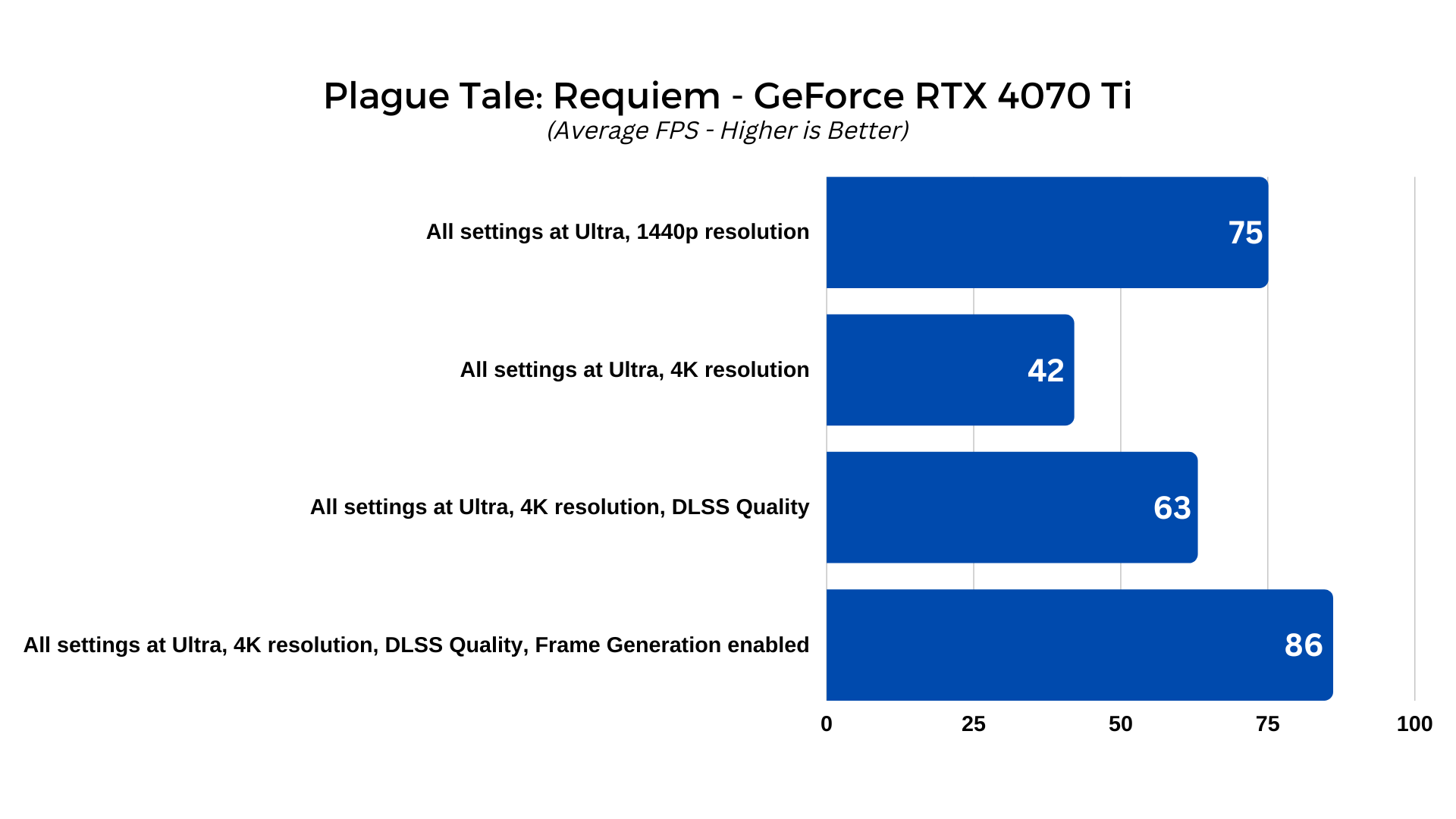

Right from the get go we can see that Plague Tale: Requiem is the most demanding game we’ve pitted these cards against. At 1440p resolution, the RTX 4070 Ti managed minimum 0.1%, minimum 1%, minimum, and average frame rates of 52, 56, 70, and 75 FPS, respectively. The game looks absolutely gorgeous at these settings, and if you’re playing on a 4070 Ti, this is arguably where you’ll find the best mix of performance and beauty. But you do have options if you want to raise the bar further.

At 4K resolution the 4070 Ti stumbles a little, but still manages to maintain frame rates of 36, 38, 39, and 42 FPS. It’s playable and doesn’t have any obvious stuttering, but it isn’t as fluid as the lower resolution. Or indeed as it is when you enable DLSS, because doing so brings the frame rates back up to 54, 56, 58, and 63 FPS, with no obvious visual artifacting as far as I could see – especially when in motion.

This is also one of the first games to support NVIDIA’s new frame generation technology, an exclusive of DLSS 3 on RTX 40-series cards. It ultimately made an even bigger difference than standard DLSS, raising frame rates again to 73, 79, 81, and 86 FPS. There was the occasional artifact that popped into view when this setting was enabled, so I might be tempted to leave it off until it’s more mature, but it certainly had a noticeable effect on how fluid the game feels, so it’s worth considering. Even at lower resolutions were you wanting to play it at even higher frame rates.

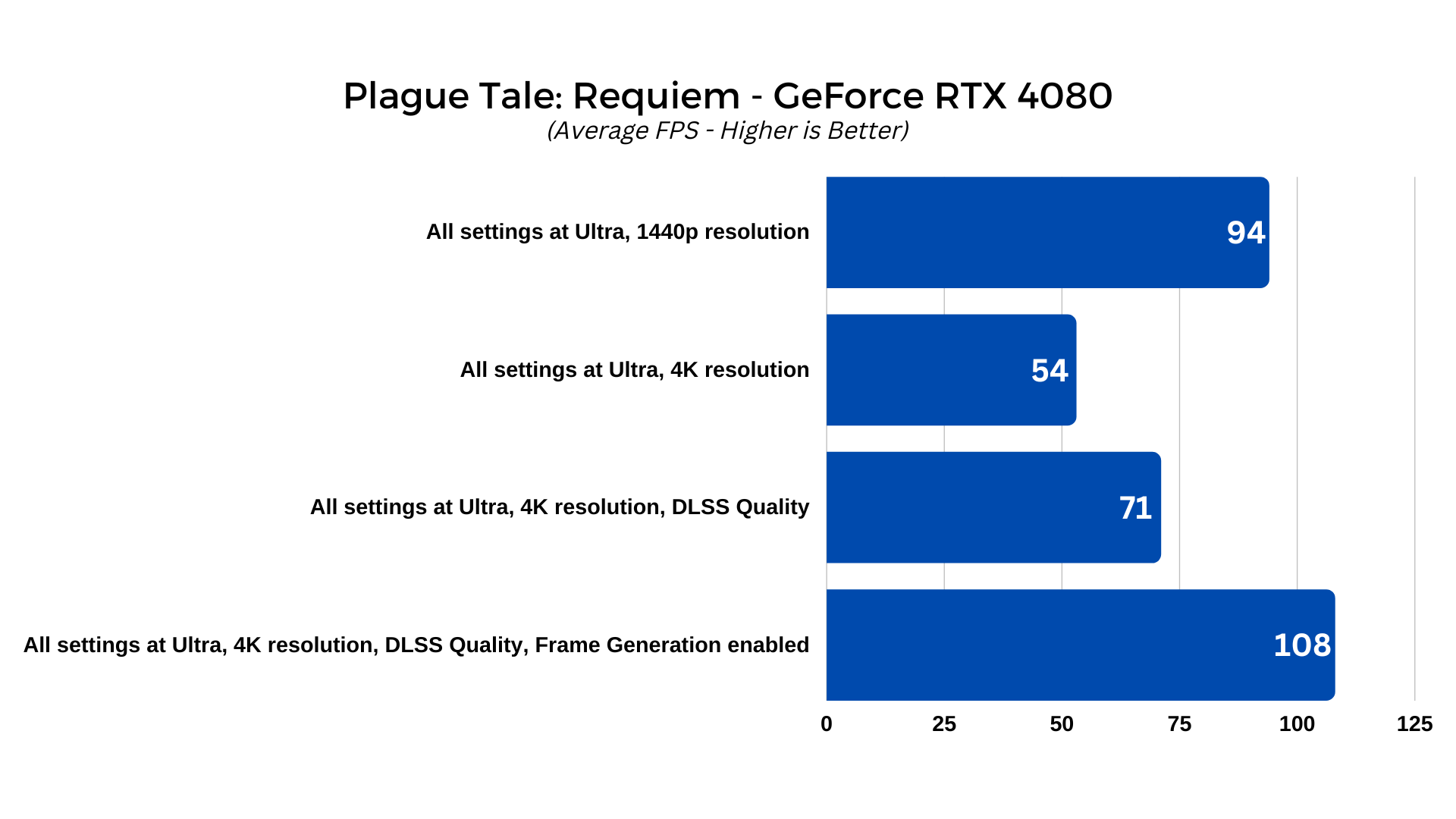

The RTX 4080 delivered better frame rates, as should be expected, but it never even broke into triple digits. At 1440p resolution, minimum 0.1%, minimum 1%, minimum, and average frame rates of 69, 80, 85, and 94 FPS, respectively. At 4K it starts to struggle a bit more, hitting just 45, 47, 48, and 53 FPS.

Fortunately, DLSS can come make the RTX 4080 a true 4K GPU with this demanding game. With DLSS enabled, the RTX 4080 was able to post much more respectable frame rates of 62, 68, 71, and 78 FPS. Adding frame generation into the mix meant that the RTX 4080 was able to hit triple digits for the first time, hitting 91, 99, 101, and 108 FPS, with no obvious degradation in visual quality. That’s a serious achievement for a doubling of FPS over native 4K.

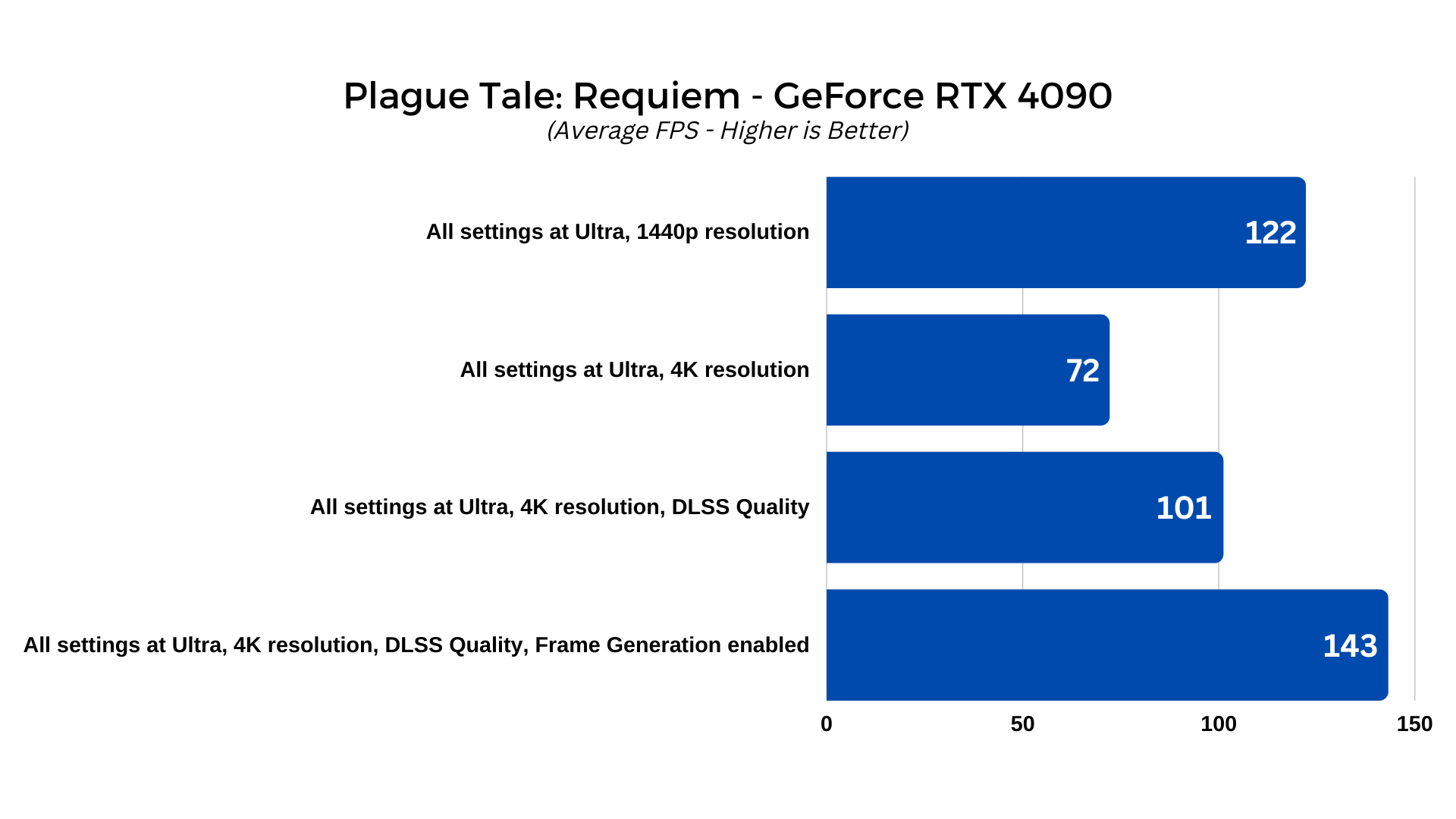

The RTX 4090 fared better, but even its raw power wasn’t enough to deliver the high frame rates we’ve seen in other games. At 1440p it managed minimum 0.1%, minimum 1%, minimum, and average frame rates of 70, 101, 111, and 122 FPS, respectively. At 4K that fell to 59, 64, 66, and 72 FPS.

DLSS nudged it to an average of 100+ FPS, managing 81, 86, 91, and 101 FPS frames per second, while enabling frame generation as well saw it boost to an impressive 116, 130, 133, and 143 FPS.

It’s amazing to see these new cards struggle in any game without ray tracing enabled. That’s coming in a later update, and I can already feel even these titanic GPUs quaking in their PCIexpress slots.

Power and thermals

A lot was made of the potential power draw of RTX 40-series graphics cards before their release, and though it didn’t end up being the doomsday scenario that some predicted, there’s no denying that these new cards are power hungry. But they actually run a lot cooler than I would have imagined.

To see how much power they actually draw and what kind of temperatures they hit, I used HWInfo to keep track of total GPU package power and core, hotspot, and memory temperatures during testing. To really push them, I fired up Furmark and ran a 4K stress test with 8x anti-aliasing and let it run for 30 minutes before noting temperatures and power draw.

The test was conducted at an ambient temperature of 20 degrees, but it was completed on an open-air test bench where airflow is much higher than most PC cases. Your temperatures may be different in a daily driver gaming PC.

At peak usage, the RTX 4070 Ti hit its rated TDP of 280W immediately, and it bumped around that limit throughout the test runs. That feels quite modest compared to its companions in the RTX 40-series, but it’s still a lot of power to pull and any PC running this card will want some hefty system cooling to dump all of that extra heat out of your case.

The cooler on the card itself proved pretty capable, keeping the GPU to 75 degrees on the core – although its hotspot did bounce up to 90 degrees after a while which makes me question whether this GPU might start to bump up against thermal limits after prolonged sessions in a closed gaming PC.

The RTX 4080 and RTX 4090 showed themselves to be the flagship cards that they are, with both pulling their rated power at peak usage. The RTX 4080 demanded 320W from the power supply, while the RTX 4090 pulled as much as 448W. That’s a lot of power for both, and a lot of eventual heat energy to dump out into the room, but both cards were incredibly quiet – almost silent during even intense loads – and amazingly, they stayed well below their temperature thresholds, too.

The RTX 4090 had a peak core temperature of just 65 degrees, with the hotspot not even breaching 80 degrees. The RTX 4080 ran even cooler, at just 64 degrees on the core and again, just under 80 degrees on the hotspot.

It would seem that the coolers on these two aftermarket boards are excellent and do a wonderful job of keeping temperatures and noise levels down.

Overclocking

Although these cards come with some factory overclocks applied, there’s no harm in seeing if they can go a little further. Overclocking is a time-consuming process and to really fine tune these GPUs to their absolute limits goes beyond the scope of this article, but I did spend some time pushing each card as far as I could.

For the RTX 4070 Ti, I used MSI Afterburner and raised the thermal limits so that the card had a little more headroom, but unfortunately on this version the 4070 Ti, there is no option to raise the power limit. Fortunately, the stock power limit seems to be more than enough, as it didn’t appear to constrict my overclocking efforts.

After some tweaking, I was able to raise the core by a further 150MHz, and the memory by a staggering 2,000 MHz. This did result in some slight temperature increases, with the core hitting 77 degrees and the hotspot 92 degrees, but those didn’t appear to be a problem and the cooler’s fan speed only increased a little to compensate.

The eventual score change made the overclock well worth it, though. While live clock speeds didn’t seem to quite reach the overclock I’d pushed the card towards, they were still high-enough to see our 3DMark Time Spy Extreme score rise from 11168 combined, and 11007 GPU, to 11816 and 11755, respectively. That’s a tangible bump in performance effectively for free, since power draw and temperatures were almost unchanged.

While the RTX 4080 and RTX 4090 do see improvement, it’s not nearly as marked as the RTX 4070 Ti. Both had much more power and thermal room to play with – though that did open up more room for improved performance as well. With both cards I used MSI Afterburner to raise the power and thermal limits of these cards to their maximums:

125% in the case of the RTX 4080, and 133% on the RTX 4090. This automatically caused the cards to boost around 100MHz higher on their cores, which was a good start. Temperatures stayed roughly the same, but fan speeds and power draw spiked notably on each.

Taking things further, I began to gradually raise the core clocks of each card while using Furmark to test stability. I was able to eventually uplift the RTX 4080’s core by 160MHz to 2925MHz. The RTX 4090 went further, with a stable 290MHz overclock bringing the card to a solid 3,000MHz.

Memory overclocking was even more impressive. At the same power settings, I was able to bring the memory clock of the RTX 4080 up by an incredible 1,600MHz to 12,800MHz. The RTX 4090 saw a big uplift of 500MHz, too, reaching 11,000MHz stable.

These overclocks saw power draw on both cards jump spectacularly, to 543W for the RTX 4090, and 390W for the RTX 4080. Both cards remained stable, however, despite running on an 850W PSU (the minimum recommended wattage for the RTX 4090 at stock). Temperatures rose to 70 degrees on the RTX 4090 and 68 degrees on the RTX 4080, but they stayed quiet still, with fan speeds only rising by about 10%.

But what about performance? These higher clocks saw the RTX 4080 go from a stock Time Spy Extreme combined and GPU scores of 13,493 and 13,904, to 14,599 and 15,141, when overclocked. The RTX 4090 went from 18,085 and 19,269, to 18,473, and 20,462.

That’s a near 9% bump in performance for the RTX 4080, and a between 2 and 6% improvement for the RTX 4090. Considering how impressive their performance is already, it’s exciting to see such gains being made possible through quick overclocks.

There is no voltage adjustment for these cards just yet with commercial tools. For that you’ll need to wait for a custom BIOS or further updates to already available overclocking tools. With such impressive thermal headroom on these cards, though, I’d be interested to see what they can do with much higher power limits and some core voltage adjustment. It feels like they have a lot further they could go.

Conclusion

There’s no denying that the new RTX 40-series graphics cards are amazingly powerful and come equipped with the dedicated RT and Tensor cores to really make ray tracing viable in even the most demanding of games. True, at the very top end in games like Cyberpunk 2077, even the otherwise-overpowered RTX 4090 does struggle, but that merely cements it as the obvious choice for gamers looking to play at 4K with every setting maxed out.

The RTX 4080 and 4070 Ti fit into their own lanes well enough, though, with the RTX 4070 Ti a top-tier 1440p card, but with the ability to handle 4K when you want it, and the RTX 4080 is a solid alternative to the RTX 4090 at a more budget-friendly price.

That said, if you have room in your budget for the RTX 4090, the performance is staggering. While it has a large power draw and is larger than the average card, it is absolutely in a class of its own. It feels like a next-generation card alongside the RTX 4080, which is itself a powerful next-gen GPU. The RTX 4090 delivers the best 4K performance we’ve ever seen and if you want to play the latest AAA games with ray tracing cranked all the way up, there’s no GPU that can get even close to what it can do.

The other cards aren’t slouches, though, and any of these new 40-series GPUs will see you gaming at high settings and high frame rates for many years to come.